Fabio Narducci

Language identification as improvement for lip-based biometric visual systems

Feb 27, 2023Abstract:Language has always been one of humanity's defining characteristics. Visual Language Identification (VLI) is a relatively new field of research that is complex and largely understudied. In this paper, we present a preliminary study in which we use linguistic information as a soft biometric trait to enhance the performance of a visual (auditory-free) identification system based on lip movement. We report a significant improvement in the identification performance of the proposed visual system as a result of the integration of these data using a score-based fusion strategy. Methods of Deep and Machine Learning are considered and evaluated. To the experimentation purposes, the dataset called laBial Articulation for the proBlem of the spokEn Language rEcognition (BABELE), consisting of eight different languages, has been created. It includes a collection of different features of which the spoken language represents the most relevant, while each sample is also manually labelled with gender and age of the subjects.

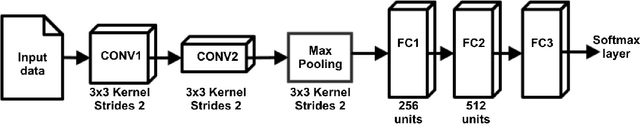

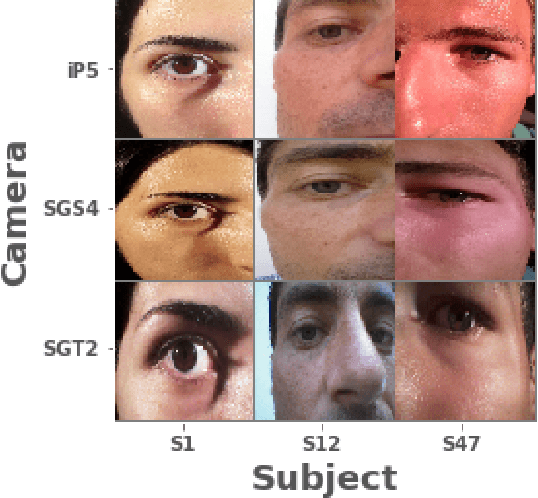

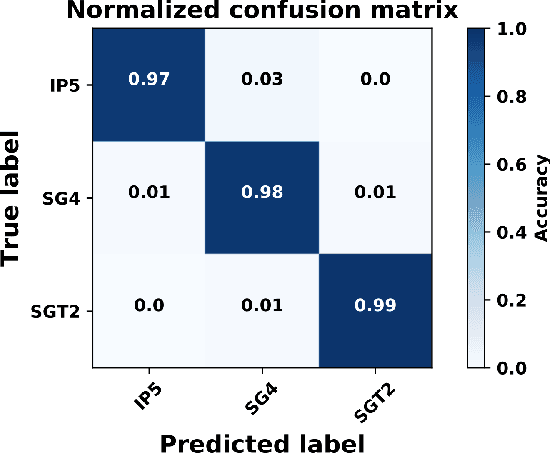

Deep learning for source camera identification on mobile devices

Oct 13, 2017

Abstract:In the present paper, we propose a source camera identification method for mobile devices based on deep learning. Recently, convolutional neural networks (CNNs) have shown a remarkable performance on several tasks such as image recognition, video analysis or natural language processing. A CNN consists on a set of layers where each layer is composed by a set of high pass filters which are applied all over the input image. This convolution process provides the unique ability to extract features automatically from data and to learn from those features. Our proposal describes a CNN architecture which is able to infer the noise pattern of mobile camera sensors (also known as camera fingerprint) with the aim at detecting and identifying not only the mobile device used to capture an image (with a 98\% of accuracy), but also from which embedded camera the image was captured. More specifically, we provide an extensive analysis on the proposed architecture considering different configurations. The experiment has been carried out using the images captured from different mobile devices cameras (MICHE-I Dataset was used) and the obtained results have proved the robustness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge