Eya Ben Amar

Stochastic differential equations for performance analysis of wireless communication systems

Feb 08, 2024

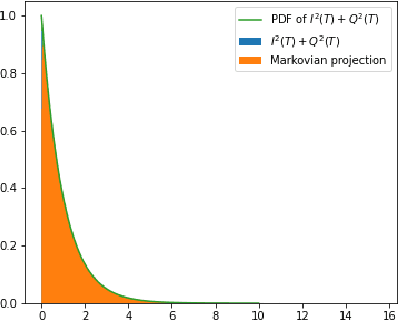

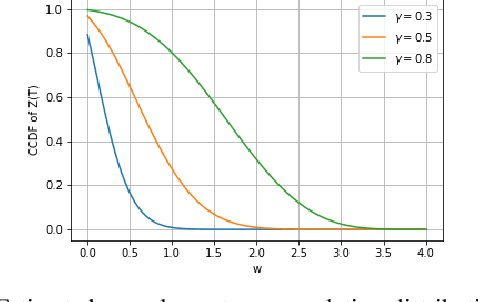

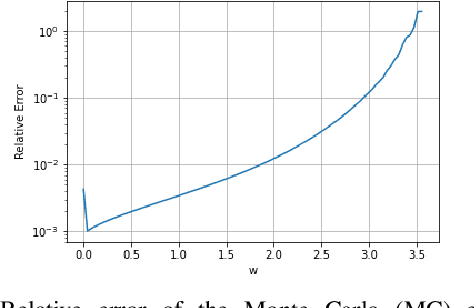

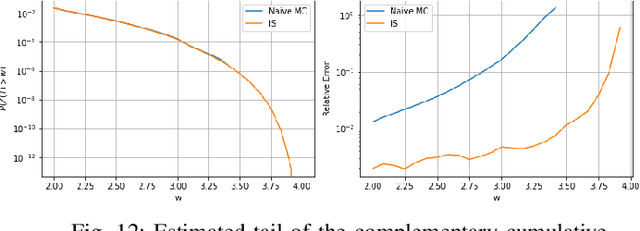

Abstract:This paper addresses the difficulty of characterizing the time-varying nature of fading channels. The current time-invariant models often fall short of capturing and tracking these dynamic characteristics. To overcome this limitation, we explore using of stochastic differential equations (SDEs) and Markovian projection to model signal envelope variations, considering scenarios involving Rayleigh, Rice, and Hoyt distributions. Furthermore, it is of practical interest to study the performance of channels modeled by SDEs. In this work, we investigate the fade duration metric, representing the time during which the signal remains below a specified threshold within a fixed time interval. We estimate the complementary cumulative distribution function (CCDF) of the fade duration using Monte Carlo simulations, and analyze the influence of system parameters on its behavior. Finally, we leverage importance sampling, a known variance-reduction technique, to estimate the tail of the CCDF efficiently.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge