Evan Hu

Can Transformers Learn Sequential Function Classes In Context?

Dec 21, 2023Abstract:In-context learning (ICL) has revolutionized the capabilities of transformer models in NLP. In our project, we extend the understanding of the mechanisms underpinning ICL by exploring whether transformers can learn from sequential, non-textual function class data distributions. We introduce a novel sliding window sequential function class and employ toy-sized transformers with a GPT-2 architecture to conduct our experiments. Our analysis indicates that these models can indeed leverage ICL when trained on non-textual sequential function classes. Additionally, our experiments with randomized y-label sequences highlights that transformers retain some ICL capabilities even when the label associations are obfuscated. We provide evidence that transformers can reason with and understand sequentiality encoded within function classes, as reflected by the effective learning of our proposed tasks. Our results also show that the performance deteriorated with increasing randomness in the labels, though not to the extent one might expect, implying a potential robustness of learned sequentiality against label noise. Future research may want to look into how previous explanations of transformers, such as induction heads and task vectors, relate to sequentiality in ICL in these toy examples. Our investigation lays the groundwork for further research into how transformers process and perceive sequential data.

Architectural Resilience to Foreground-and-Background Adversarial Noise

Mar 23, 2020

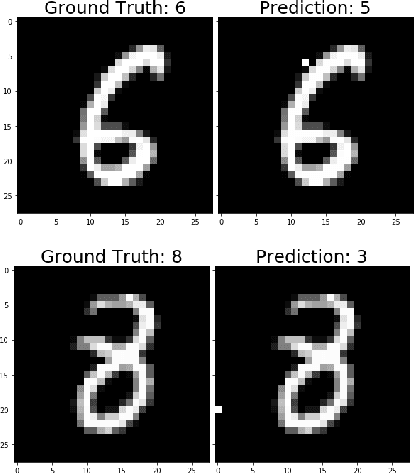

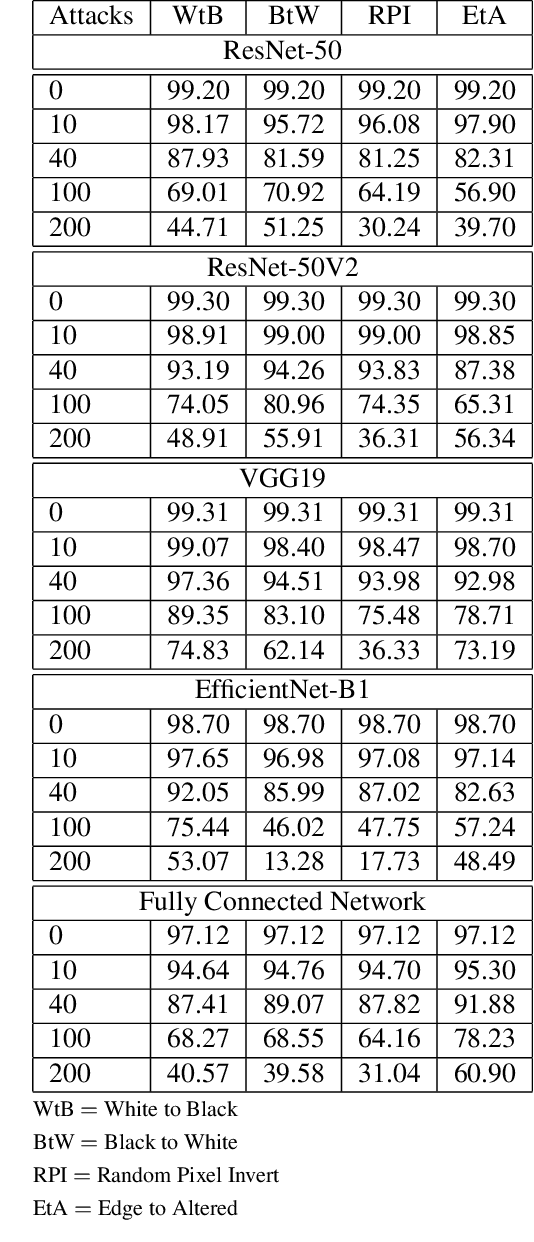

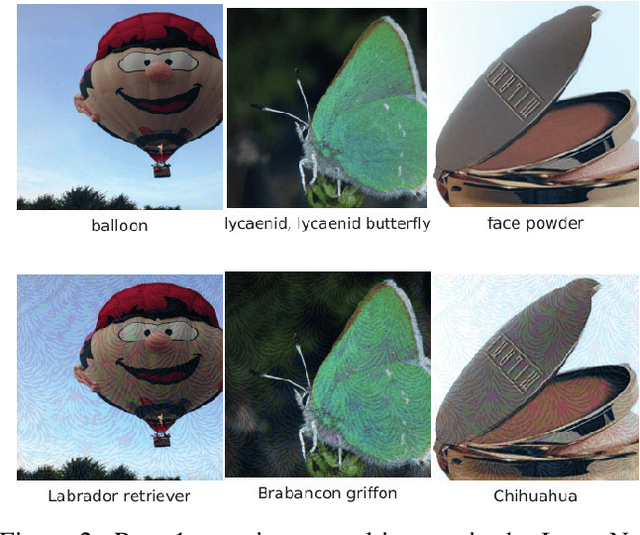

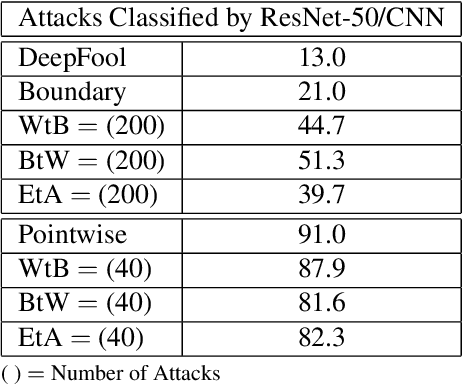

Abstract:Adversarial attacks in the form of imperceptible perturbations of normal images have been extensively studied, and for every new defense methodology created, multiple adversarial attacks are found to counteract it. In particular, a popular style of attack, exemplified in recent years by DeepFool and Carlini-Wagner, relies solely on white-box scenarios in which full access to the predictive model and its weights are required. In this work, we instead propose distinct model-agnostic benchmark perturbations of images in order to investigate the resilience and robustness of different network architectures. Results empirically determine that increasing depth within most types of Convolutional Neural Networks typically improves model resilience towards general attacks, with improvement steadily decreasing as the model becomes deeper. Additionally, we find that a notable difference in adversarial robustness exists between residual architectures with skip connections and non-residual architectures of similar complexity. Our findings provide direction for future understanding of residual connections and depth on network robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge