Eun-Seok Ryu

LTC-GIF: Attracting More Clicks on Feature-length Sports Videos

Jan 22, 2022

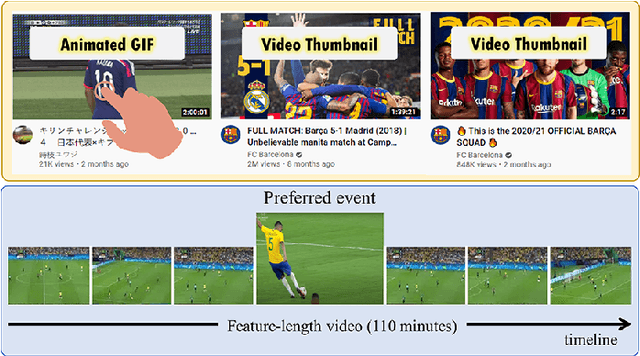

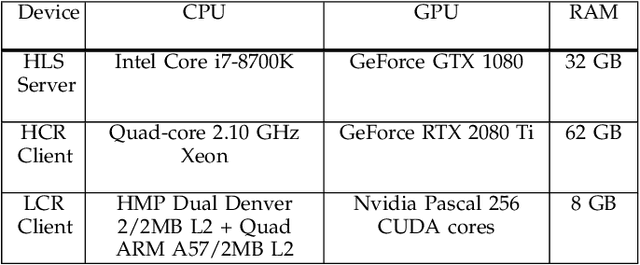

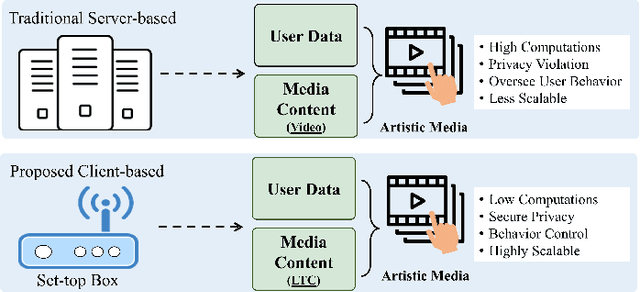

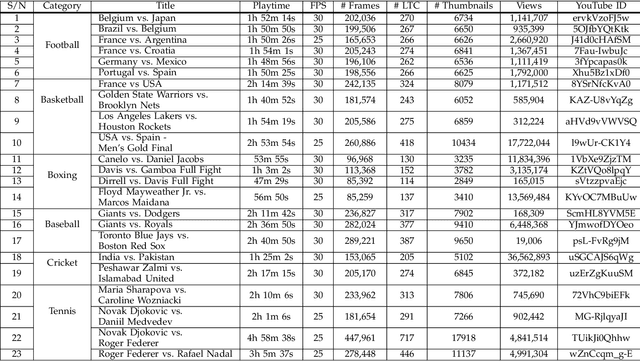

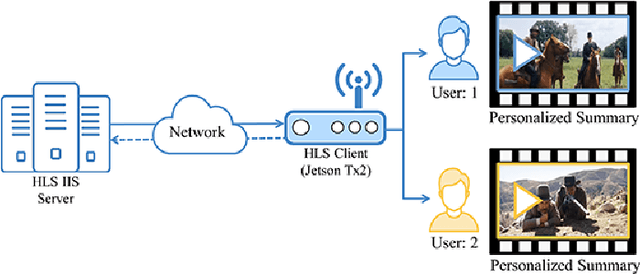

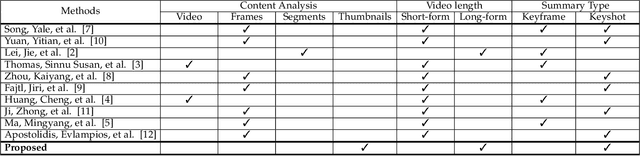

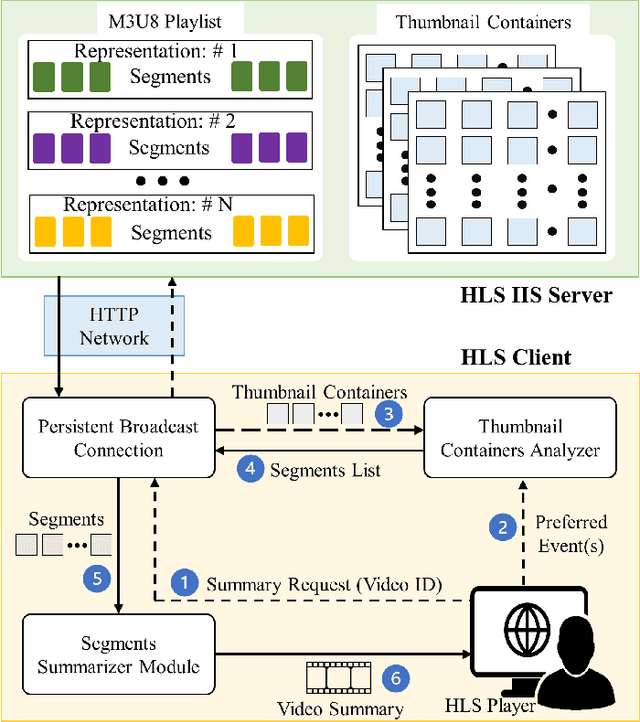

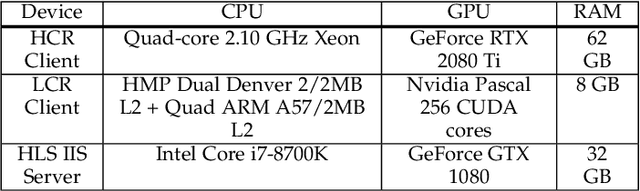

Abstract:This paper proposes a lightweight method to attract users and increase views of the video by presenting personalized artistic media -- i.e, static thumbnails and animated GIFs. This method analyzes lightweight thumbnail containers (LTC) using computational resources of the client device to recognize personalized events from full-length sports videos. In addition, instead of processing the entire video, small video segments are processed to generate artistic media. This makes the proposed approach more computationally efficient compared to the baseline approaches that create artistic media using the entire video. The proposed method retrieves and uses thumbnail containers and video segments, which reduces the required transmission bandwidth as well as the amount of locally stored data used during artistic media generation. When extensive experiments were conducted on the Nvidia Jetson TX2, the computational complexity of the proposed method was 3.57 times lower than that of the SoA method. In the qualitative assessment, GIFs generated using the proposed method received 1.02 higher overall ratings compared to the SoA method. To the best of our knowledge, this is the first technique that uses LTC to generate artistic media while providing lightweight and high-performance services even on resource-constrained devices.

LTC-SUM: Lightweight Client-driven Personalized Video Summarization Framework Using 2D CNN

Jan 22, 2022

Abstract:This paper proposes a novel lightweight thumbnail container-based summarization (LTC-SUM) framework for full feature-length videos. This framework generates a personalized keyshot summary for concurrent users by using the computational resource of the end-user device. State-of-the-art methods that acquire and process entire video data to generate video summaries are highly computationally intensive. In this regard, the proposed LTC-SUM method uses lightweight thumbnails to handle the complex process of detecting events. This significantly reduces computational complexity and improves communication and storage efficiency by resolving computational and privacy bottlenecks in resource-constrained end-user devices. These improvements were achieved by designing a lightweight 2D CNN model to extract features from thumbnails, which helped select and retrieve only a handful of specific segments. Extensive quantitative experiments on a set of full 18 feature-length videos (approximately 32.9 h in duration) showed that the proposed method is significantly computationally efficient than state-of-the-art methods on the same end-user device configurations. Joint qualitative assessments of the results of 56 participants showed that participants gave higher ratings to the summaries generated using the proposed method. To the best of our knowledge, this is the first attempt in designing a fully client-driven personalized keyshot video summarization framework using thumbnail containers for feature-length videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge