Eugene Kim

Pseudo-label Correction for Instance-dependent Noise Using Teacher-student Framework

Nov 24, 2023Abstract:The high capacity of deep learning models to learn complex patterns poses a significant challenge when confronted with label noise. The inability to differentiate clean and noisy labels ultimately results in poor generalization. We approach this problem by reassigning the label for each image using a new teacher-student based framework termed P-LC (pseudo-label correction). Traditional teacher-student networks are composed of teacher and student classifiers for knowledge distillation. In our novel approach, we reconfigure the teacher network into a triple encoder, leveraging the triplet loss to establish a pseudo-label correction system. As the student generates pseudo labels for a set of given images, the teacher learns to choose between the initially assigned labels and the pseudo labels. Experiments on MNIST, Fashion-MNIST, and SVHN demonstrate P-LC's superior performance over existing state-of-the-art methods across all noise levels, most notably in high noise. In addition, we introduce a noise level estimation to help assess model performance and inform the need for additional data cleaning procedures.

Query-by-Example Keyword Spotting system using Multi-head Attention and Softtriple Loss

Feb 14, 2021

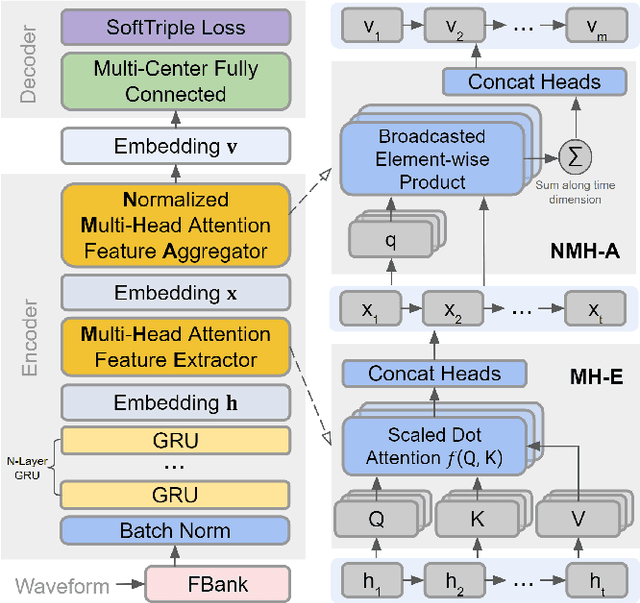

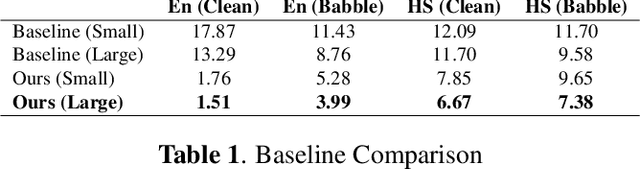

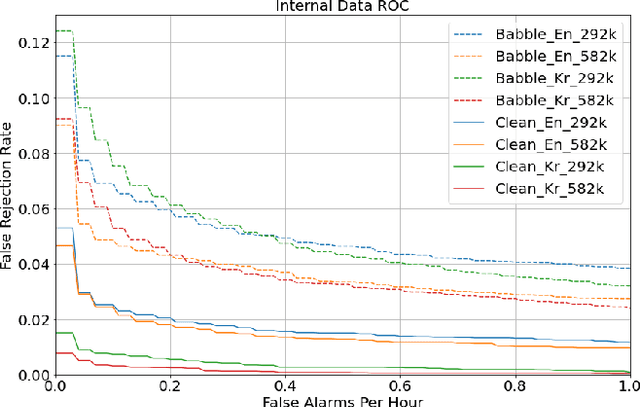

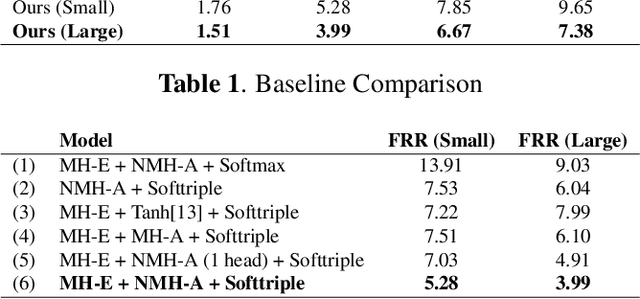

Abstract:This paper proposes a neural network architecture for tackling the query-by-example user-defined keyword spotting task. A multi-head attention module is added on top of a multi-layered GRU for effective feature extraction, and a normalized multi-head attention module is proposed for feature aggregation. We also adopt the softtriple loss - a combination of triplet loss and softmax loss - and showcase its effectiveness. We demonstrate the performance of our model on internal datasets with different languages and the public Hey-Snips dataset. We compare the performance of our model to a baseline system and conduct an ablation study to show the benefit of each component in our architecture. The proposed work shows solid performance while preserving simplicity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge