Essaid Sabir

A Green Multi-Attribute Client Selection for Over-The-Air Federated Learning: A Grey-Wolf-Optimizer Approach

Sep 16, 2024

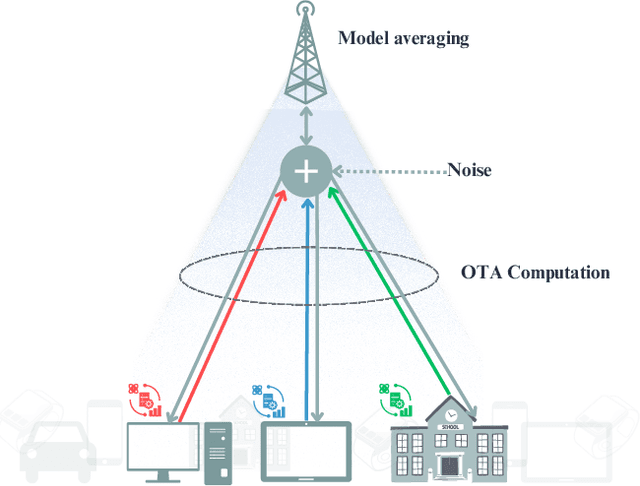

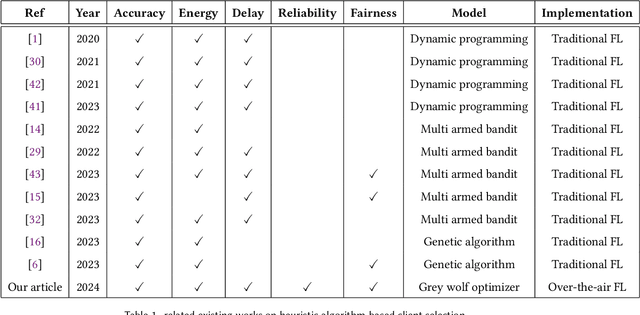

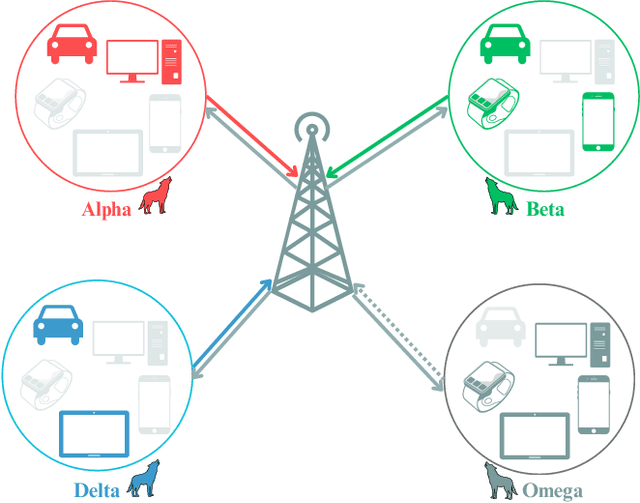

Abstract:Federated Learning (FL) has gained attention across various industries for its capability to train machine learning models without centralizing sensitive data. While this approach offers significant benefits such as privacy preservation and decreased communication overhead, it presents several challenges, including deployment complexity and interoperability issues, particularly in heterogeneous scenarios or resource-constrained environments. Over-the-air (OTA) FL was introduced to tackle these challenges by disseminating model updates without necessitating direct device-to-device connections or centralized servers. However, OTA-FL brought forth limitations associated with heightened energy consumption and network latency. In this paper, we propose a multi-attribute client selection framework employing the grey wolf optimizer (GWO) to strategically control the number of participants in each round and optimize the OTA-FL process while considering accuracy, energy, delay, reliability, and fairness constraints of participating devices. We evaluate the performance of our multi-attribute client selection approach in terms of model loss minimization, convergence time reduction, and energy efficiency. In our experimental evaluation, we assessed and compared the performance of our approach against the existing state-of-the-art methods. Our results demonstrate that the proposed GWO-based client selection outperforms these baselines across various metrics. Specifically, our approach achieves a notable reduction in model loss, accelerates convergence time, and enhances energy efficiency while maintaining high fairness and reliability indicators.

Federated Learning for 6G: Paradigms, Taxonomy, Recent Advances and Insights

Dec 07, 2023Abstract:Artificial Intelligence (AI) is expected to play an instrumental role in the next generation of wireless systems, such as sixth-generation (6G) mobile network. However, massive data, energy consumption, training complexity, and sensitive data protection in wireless systems are all crucial challenges that must be addressed for training AI models and gathering intelligence and knowledge from distributed devices. Federated Learning (FL) is a recent framework that has emerged as a promising approach for multiple learning agents to build an accurate and robust machine learning models without sharing raw data. By allowing mobile handsets and devices to collaboratively learn a global model without explicit sharing of training data, FL exhibits high privacy and efficient spectrum utilization. While there are a lot of survey papers exploring FL paradigms and usability in 6G privacy, none of them has clearly addressed how FL can be used to improve the protocol stack and wireless operations. The main goal of this survey is to provide a comprehensive overview on FL usability to enhance mobile services and enable smart ecosystems to support novel use-cases. This paper examines the added-value of implementing FL throughout all levels of the protocol stack. Furthermore, it presents important FL applications, addresses hot topics, provides valuable insights and explicits guidance for future research and developments. Our concluding remarks aim to leverage the synergy between FL and future 6G, while highlighting FL's potential to revolutionize wireless industry and sustain the development of cutting-edge mobile services.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge