Eric Gourdin

Asymptotically Optimal Strategies For Combinatorial Semi-Bandits in Polynomial Time

Feb 14, 2021

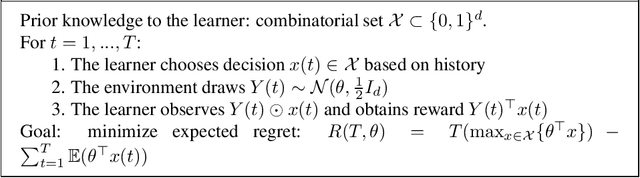

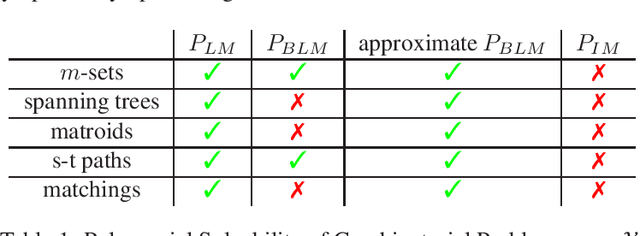

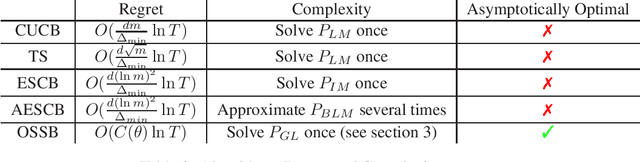

Abstract:We consider combinatorial semi-bandits with uncorrelated Gaussian rewards. In this article, we propose the first method, to the best of our knowledge, that enables to compute the solution of the Graves-Lai optimization problem in polynomial time for many combinatorial structures of interest. In turn, this immediately yields the first known approach to implement asymptotically optimal algorithms in polynomial time for combinatorial semi-bandits.

Statistically Efficient, Polynomial Time Algorithms for Combinatorial Semi Bandits

Feb 17, 2020

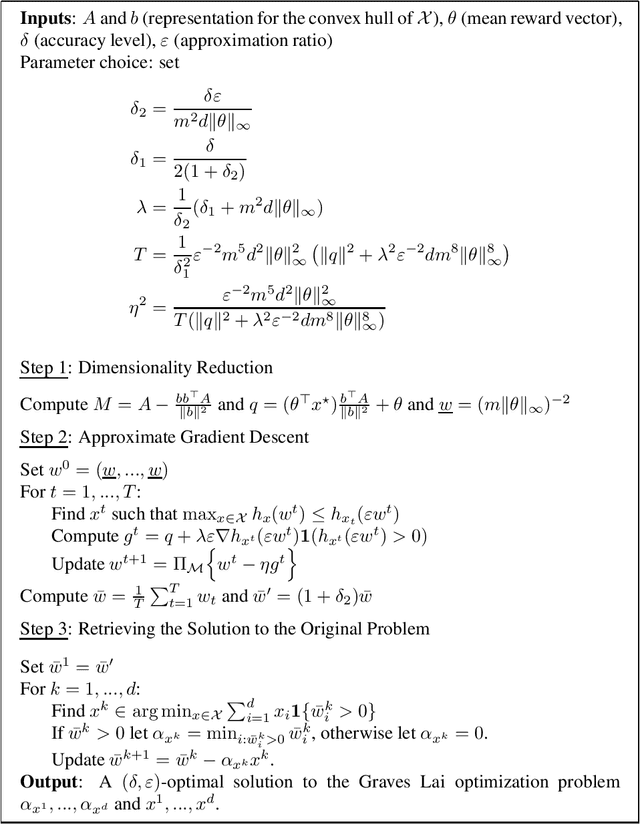

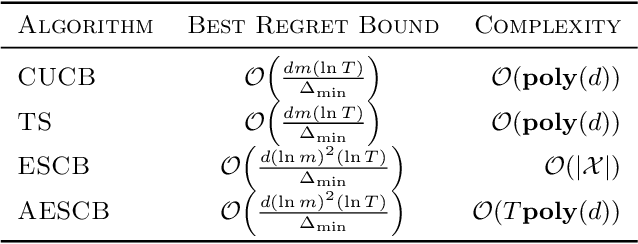

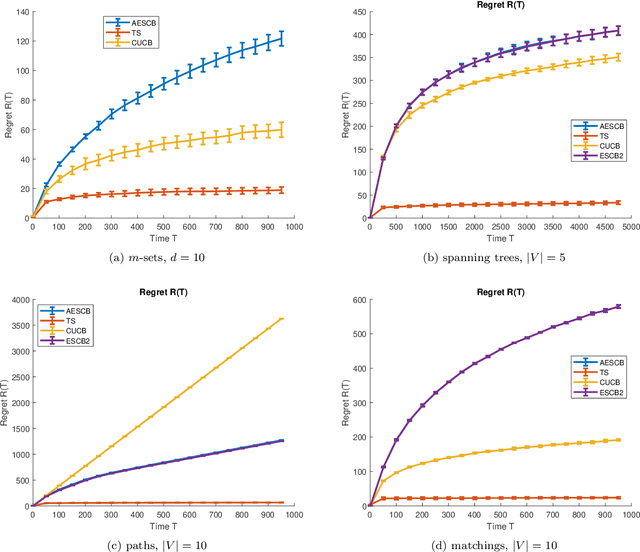

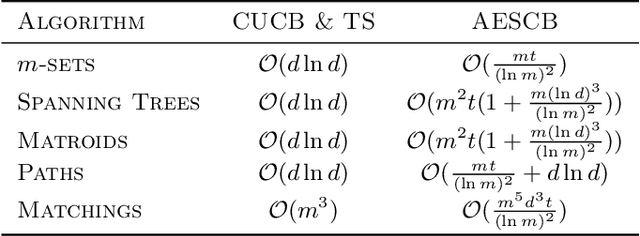

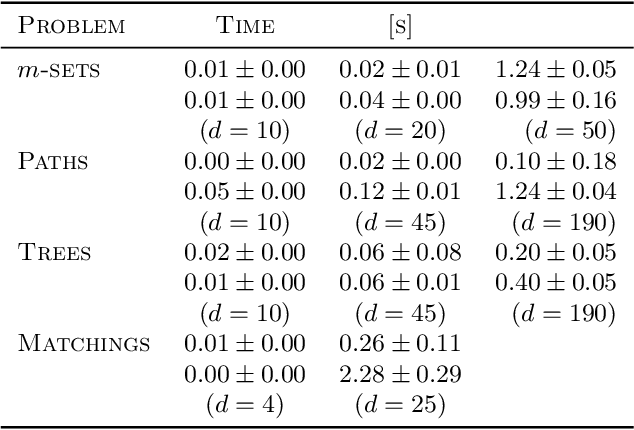

Abstract:We consider combinatorial semi-bandits over a set of arms ${\cal X} \subset \{0,1\}^d$ where rewards are uncorrelated across items. For this problem, the algorithm ESCB yields the smallest known regret bound $R(T) = {\cal O}\Big( {d (\ln m)^2 (\ln T) \over \Delta_{\min} }\Big)$, but it has computational complexity ${\cal O}(|{\cal X}|)$ which is typically exponential in $d$, and cannot be used in large dimensions. We propose the first algorithm which is both computationally and statistically efficient for this problem with regret $R(T) = {\cal O} \Big({d (\ln m)^2 (\ln T)\over \Delta_{\min} }\Big)$ and computational complexity ${\cal O}(T {\bf poly}(d))$. Our approach involves carefully designing an approximate version of ESCB with the same regret guarantees, showing that this approximate algorithm can be implemented in time ${\cal O}(T {\bf poly}(d))$ by repeatedly maximizing a linear function over ${\cal X}$ subject to a linear budget constraint, and showing how to solve this maximization problems efficiently.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge