Ercan Karaarslan

Mammographic Breast Positioning Assessment via Deep Learning

Jul 15, 2024

Abstract:Breast cancer remains a leading cause of cancer-related deaths among women worldwide, with mammography screening as the most effective method for the early detection. Ensuring proper positioning in mammography is critical, as poor positioning can lead to diagnostic errors, increased patient stress, and higher costs due to recalls. Despite advancements in deep learning (DL) for breast cancer diagnostics, limited focus has been given to evaluating mammography positioning. This paper introduces a novel DL methodology to quantitatively assess mammogram positioning quality, specifically in mediolateral oblique (MLO) views using attention and coordinate convolution modules. Our method identifies key anatomical landmarks, such as the nipple and pectoralis muscle, and automatically draws a posterior nipple line (PNL), offering robust and inherently explainable alternative to well-known classification and regression-based approaches. We compare the performance of proposed methodology with various regression and classification-based models. The CoordAtt UNet model achieved the highest accuracy of 88.63% $\pm$ 2.84 and specificity of 90.25% $\pm$ 4.04, along with a noteworthy sensitivity of 86.04% $\pm$ 3.41. In landmark detection, the same model also recorded the lowest mean errors in key anatomical points and the smallest angular error of 2.42 degrees. Our results indicate that models incorporating attention mechanisms and CoordConv module increase the accuracy in classifying breast positioning quality and detecting anatomical landmarks. Furthermore, we make the labels and source codes available to the community to initiate an open research area for mammography, accessible at https://github.com/tanyelai/deep-breast-positioning.

Prostate Lesion Estimation using Prostate Masks from Biparametric MRI

Jan 11, 2023

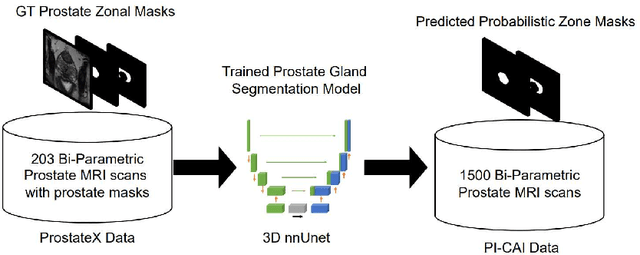

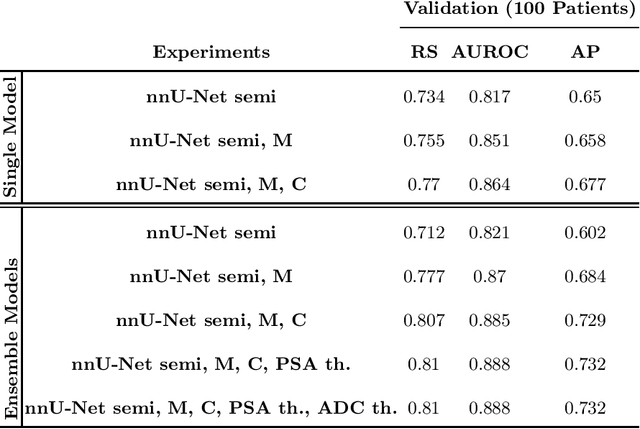

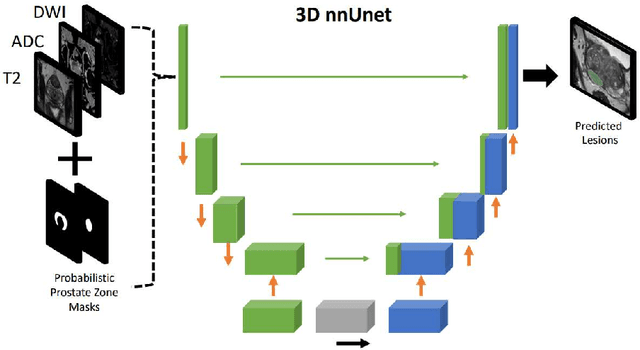

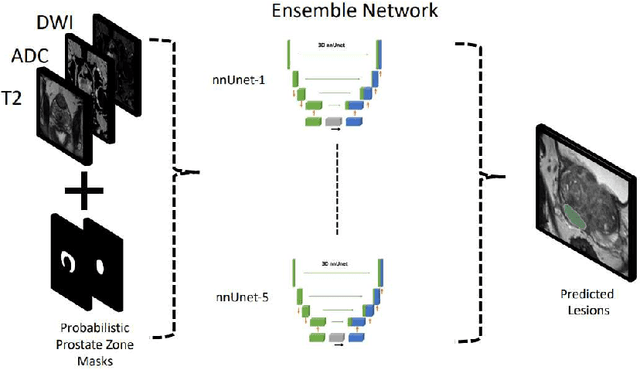

Abstract:Biparametric MRI has emerged as an alternative to multiparametric prostate MRI, which eliminates the need for the potential harms to the patient due to the contrast medium. One major issue with biparametric MRI is difficulty to detect clinically significant prostate cancer (csPCA). Deep learning algorithms have emerged as an alternative solution to detect csPCA in cohort studies. We present a workflow which predicts csPCA on biparametric prostate MRI PI-CAI 2022 Challenge with over 10,000 carefully-curated prostate MRI exams. We propose to to segment the prostate gland first to the central gland (transition + central zone) and the peripheral gland. Then we utilize these predcitions in combination with T2, ADC and DWI images to train an ensemble nnU-Net model. Finally, we utilize clinical indices PSA and ADC intensity distributions of lesion regions to reduce the false positives. Our method achieves top results on open-validation stage with a AUROC of 0.888 and AP of 0.732.

Shifted Windows Transformers for Medical Image Quality Assessment

Aug 11, 2022

Abstract:To maintain a standard in a medical imaging study, images should have necessary image quality for potential diagnostic use. Although CNN-based approaches are used to assess the image quality, their performance can still be improved in terms of accuracy. In this work, we approach this problem by using Swin Transformer, which improves the poor-quality image classification performance that causes the degradation in medical image quality. We test our approach on Foreign Object Classification problem on Chest X-Rays (Object-CXR) and Left Ventricular Outflow Tract Classification problem on Cardiac MRI with a four-chamber view (LVOT). While we obtain a classification accuracy of 87.1% and 95.48% on the Object-CXR and LVOT datasets, our experimental results suggest that the use of Swin Transformer improves the Object-CXR classification performance while obtaining a comparable performance for the LVOT dataset. To the best of our knowledge, our study is the first vision transformer application for medical image quality assessment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge