Enrique De Miguel Ambite

Uncertainty Quantification for Transformer Models for Dark-Pattern Detection

Dec 06, 2024

Abstract:The opaque nature of transformer-based models, particularly in applications susceptible to unethical practices such as dark-patterns in user interfaces, requires models that integrate uncertainty quantification to enhance trust in predictions. This study focuses on dark-pattern detection, deceptive design choices that manipulate user decisions, undermining autonomy and consent. We propose a differential fine-tuning approach implemented at the final classification head via uncertainty quantification with transformer-based pre-trained models. Employing a dense neural network (DNN) head architecture as a baseline, we examine two methods capable of quantifying uncertainty: Spectral-normalized Neural Gaussian Processes (SNGPs) and Bayesian Neural Networks (BNNs). These methods are evaluated on a set of open-source foundational models across multiple dimensions: model performance, variance in certainty of predictions and environmental impact during training and inference phases. Results demonstrate that integrating uncertainty quantification maintains performance while providing insights into challenging instances within the models. Moreover, the study reveals that the environmental impact does not uniformly increase with the incorporation of uncertainty quantification techniques. The study's findings demonstrate that uncertainty quantification enhances transparency and provides measurable confidence in predictions, improving the explainability and clarity of black-box models. This facilitates informed decision-making and mitigates the influence of dark-patterns on user interfaces. These results highlight the importance of incorporating uncertainty quantification techniques in developing machine learning models, particularly in domains where interpretability and trustworthiness are critical.

DETECTA 2.0: Research into non-intrusive methodologies supported by Industry 4.0 enabling technologies for predictive and cyber-secure maintenance in SMEs

May 24, 2024

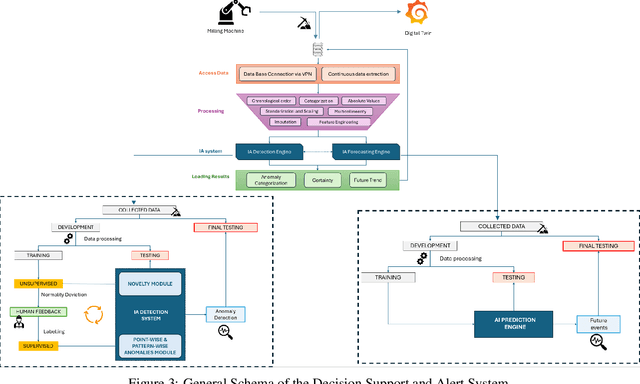

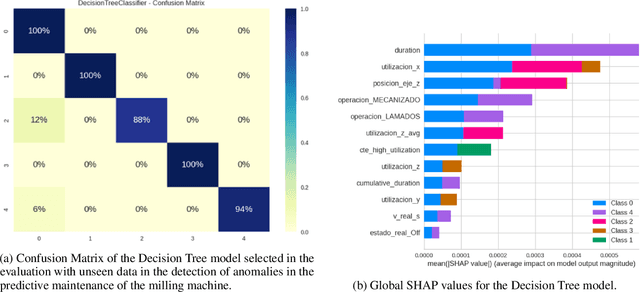

Abstract:The integration of predictive maintenance and cybersecurity represents a transformative advancement for small and medium-sized enterprises (SMEs) operating within the Industry 4.0 paradigm. Despite their economic importance, SMEs often face significant challenges in adopting advanced technologies due to resource constraints and knowledge gaps. The DETECTA 2.0 project addresses these hurdles by developing an innovative system that harmonizes real-time anomaly detection, sophisticated analytics, and predictive forecasting capabilities. The system employs a semi-supervised methodology, combining unsupervised anomaly detection with supervised learning techniques. This approach enables more agile and cost-effective development of AI detection systems, significantly reducing the time required for manual case review. At the core lies a Digital Twin interface, providing intuitive real-time visualizations of machine states and detected anomalies. Leveraging cutting-edge AI engines, the system intelligently categorizes anomalies based on observed patterns, differentiating between technical errors and potential cybersecurity incidents. This discernment is fortified by detailed analytics, including certainty levels that enhance alert reliability and minimize false positives. The predictive engine uses advanced time series algorithms like N-HiTS to forecast future machine utilization trends. This proactive approach optimizes maintenance planning, enhances cybersecurity measures, and minimizes unplanned downtimes despite variable production processes. With its modular architecture enabling seamless integration across industrial setups and low implementation costs, DETECTA 2.0 presents an attractive solution for SMEs to strengthen their predictive maintenance and cybersecurity strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge