Emrecan Kutay

Semantic Forwarding for Next Generation Relay Networks

Jan 30, 2024

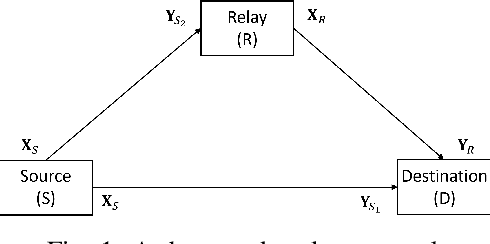

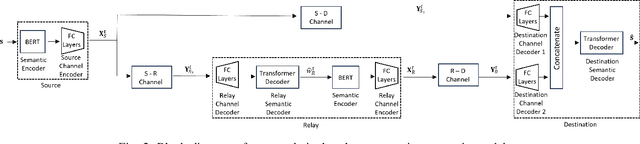

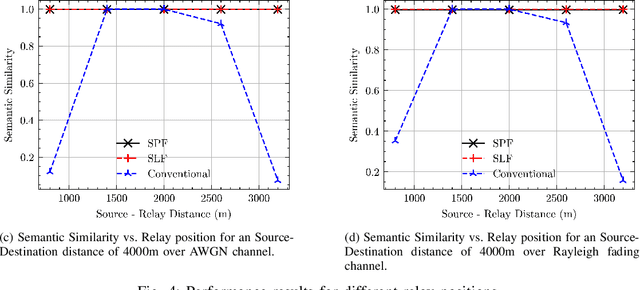

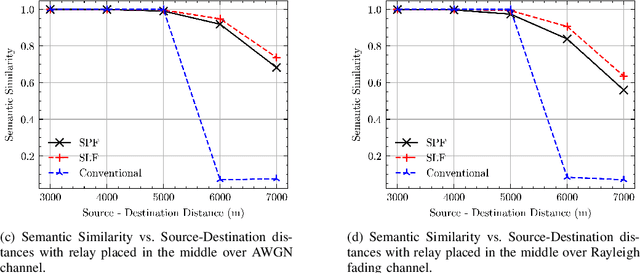

Abstract:We consider cooperative semantic text communications facilitated by a relay node. We propose two types of semantic forwarding: semantic lossy forwarding (SLF) and semantic predict-and-forward (SPF). Both are machine learning aided approaches, and, in particular, utilize attention mechanisms at the relay to establish a dynamic semantic state, updated upon receiving a new source signal. In the SLF model, the semantic state is used to decode the received source signal; whereas in the SPF model, it is used to predict the next source signal, enabling proactive forwarding. Our proposed forwarding schemes do not need any channel state information and exhibit consistent performance regardless of the relay's position. Our results demonstrate that the proposed semantic forwarding techniques outperform conventional semantic-agnostic baselines.

Semantic Text Compression for Classification

Sep 19, 2023Abstract:We study semantic compression for text where meanings contained in the text are conveyed to a source decoder, e.g., for classification. The main motivator to move to such an approach of recovering the meaning without requiring exact reconstruction is the potential resource savings, both in storage and in conveying the information to another node. Towards this end, we propose semantic quantization and compression approaches for text where we utilize sentence embeddings and the semantic distortion metric to preserve the meaning. Our results demonstrate that the proposed semantic approaches result in substantial (orders of magnitude) savings in the required number of bits for message representation at the expense of very modest accuracy loss compared to the semantic agnostic baseline. We compare the results of proposed approaches and observe that resource savings enabled by semantic quantization can be further amplified by semantic clustering. Importantly, we observe the generalizability of the proposed methodology which produces excellent results on many benchmark text classification datasets with a diverse array of contexts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge