Elvio G. Amparore

ShapBPT: Image Feature Attributions Using Data-Aware Binary Partition Trees

Feb 04, 2026Abstract:Pixel-level feature attributions are an important tool in eXplainable AI for Computer Vision (XCV), providing visual insights into how image features influence model predictions. The Owen formula for hierarchical Shapley values has been widely used to interpret machine learning (ML) models and their learned representations. However, existing hierarchical Shapley approaches do not exploit the multiscale structure of image data, leading to slow convergence and weak alignment with the actual morphological features. Moreover, no prior Shapley method has leveraged data-aware hierarchies for Computer Vision tasks, leaving a gap in model interpretability of structured visual data. To address this, this paper introduces ShapBPT, a novel data-aware XCV method based on the hierarchical Shapley formula. ShapBPT assigns Shapley coefficients to a multiscale hierarchical structure tailored for images, the Binary Partition Tree (BPT). By using this data-aware hierarchical partitioning, ShapBPT ensures that feature attributions align with intrinsic image morphology, effectively prioritizing relevant regions while reducing computational overhead. This advancement connects hierarchical Shapley methods with image data, providing a more efficient and semantically meaningful approach to visual interpretability. Experimental results confirm ShapBPT's effectiveness, demonstrating superior alignment with image structures and improved efficiency over existing XCV methods, and a 20-subject user study confirming that ShapBPT explanations are preferred by humans.

Using Stratified Sampling to Improve LIME Image Explanations

Mar 26, 2024Abstract:We investigate the use of a stratified sampling approach for LIME Image, a popular model-agnostic explainable AI method for computer vision tasks, in order to reduce the artifacts generated by typical Monte Carlo sampling. Such artifacts are due to the undersampling of the dependent variable in the synthetic neighborhood around the image being explained, which may result in inadequate explanations due to the impossibility of fitting a linear regressor on the sampled data. We then highlight a connection with the Shapley theory, where similar arguments about undersampling and sample relevance were suggested in the past. We derive all the formulas and adjustment factors required for an unbiased stratified sampling estimator. Experiments show the efficacy of the proposed approach.

Streamlining models with explanations in the learning loop

Feb 15, 2023Abstract:Several explainable AI methods allow a Machine Learning user to get insights on the classification process of a black-box model in the form of local linear explanations. With such information, the user can judge which features are locally relevant for the classification outcome, and get an understanding of how the model reasons. Standard supervised learning processes are purely driven by the original features and target labels, without any feedback loop informed by the local relevance of the features identified by the post-hoc explanations. In this paper, we exploit this newly obtained information to design a feature engineering phase, where we combine explanations with feature values. To do so, we develop two different strategies, named Iterative Dataset Weighting and Targeted Replacement Values, which generate streamlined models that better mimic the explanation process presented to the user. We show how these streamlined models compare to the original black-box classifiers, in terms of accuracy and compactness of the newly produced explanations.

* 16 pages, 10 figures, available repository

To trust or not to trust an explanation: using LEAF to evaluate local linear XAI methods

Jun 01, 2021

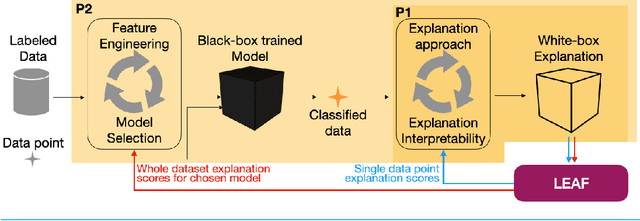

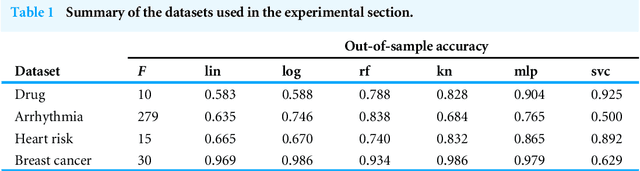

Abstract:The main objective of eXplainable Artificial Intelligence (XAI) is to provide effective explanations for black-box classifiers. The existing literature lists many desirable properties for explanations to be useful, but there is no consensus on how to quantitatively evaluate explanations in practice. Moreover, explanations are typically used only to inspect black-box models, and the proactive use of explanations as a decision support is generally overlooked. Among the many approaches to XAI, a widely adopted paradigm is Local Linear Explanations - with LIME and SHAP emerging as state-of-the-art methods. We show that these methods are plagued by many defects including unstable explanations, divergence of actual implementations from the promised theoretical properties, and explanations for the wrong label. This highlights the need to have standard and unbiased evaluation procedures for Local Linear Explanations in the XAI field. In this paper we address the problem of identifying a clear and unambiguous set of metrics for the evaluation of Local Linear Explanations. This set includes both existing and novel metrics defined specifically for this class of explanations. All metrics have been included in an open Python framework, named LEAF. The purpose of LEAF is to provide a reference for end users to evaluate explanations in a standardised and unbiased way, and to guide researchers towards developing improved explainable techniques.

* 16 pages, 8 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge