Eleni Manis

Towards Substantive Conceptions of Algorithmic Fairness: Normative Guidance from Equal Opportunity Doctrines

Jul 11, 2022

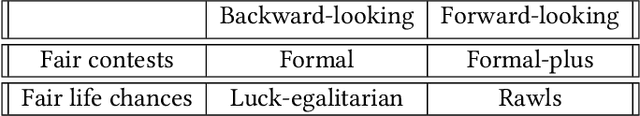

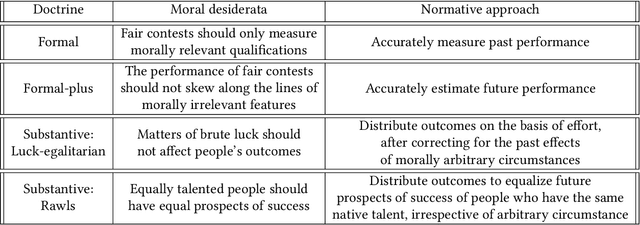

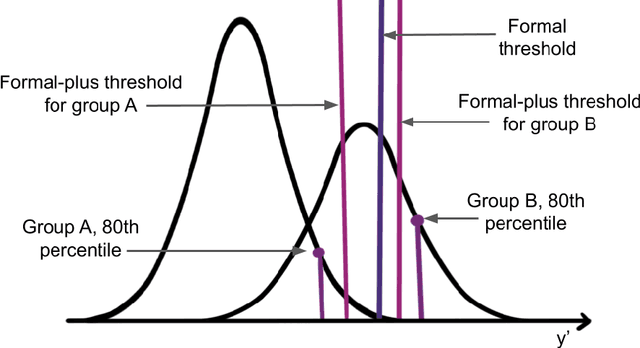

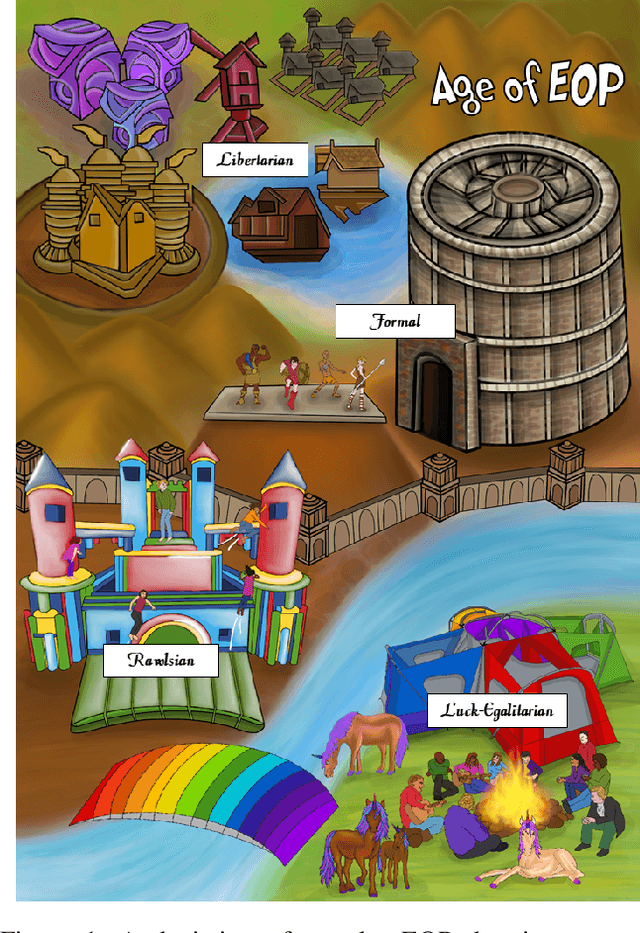

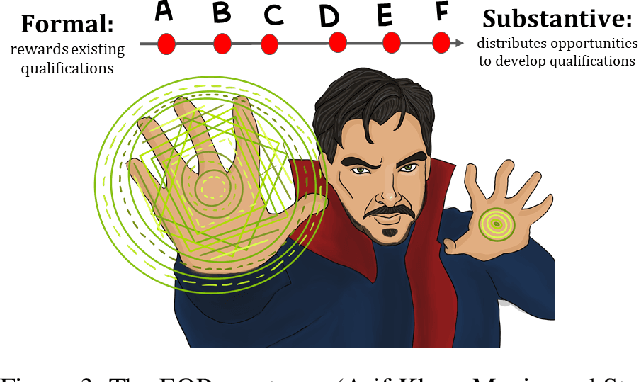

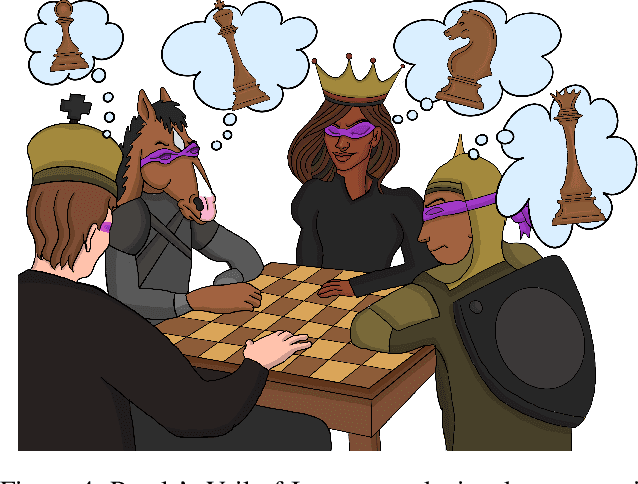

Abstract:In this work we use Equal Oppportunity (EO) doctrines from political philosophy to make explicit the normative judgements embedded in different conceptions of algorithmic fairness. We contrast formal EO approaches that narrowly focus on fair contests at discrete decision points, with substantive EO doctrines that look at people's fair life chances more holistically over the course of a lifetime. We use this taxonomy to provide a moral interpretation of the impossibility results as the incompatibility between different conceptions of a fair contest -- foward-looking versus backward-looking -- when people do not have fair life chances. We use this result to motivate substantive conceptions of algorithmic fairness and outline two plausible procedures based on the luck-egalitarian doctrine of EO, and Rawls's principle of fair equality of opportunity.

Fairness as Equality of Opportunity: Normative Guidance from Political Philosophy

Jun 15, 2021

Abstract:Recent interest in codifying fairness in Automated Decision Systems (ADS) has resulted in a wide range of formulations of what it means for an algorithmic system to be fair. Most of these propositions are inspired by, but inadequately grounded in, political philosophy scholarship. This paper aims to correct that deficit. We introduce a taxonomy of fairness ideals using doctrines of Equality of Opportunity (EOP) from political philosophy, clarifying their conceptions in philosophy and the proposed codification in fair machine learning. We arrange these fairness ideals onto an EOP spectrum, which serves as a useful frame to guide the design of a fair ADS in a given context. We use our fairness-as-EOP framework to re-interpret the impossibility results from a philosophical perspective, as the in-compatibility between different value systems, and demonstrate the utility of the framework with several real-world and hypothetical examples. Through our EOP-framework we hope to answer what it means for an ADS to be fair from a moral and political philosophy standpoint, and to pave the way for similar scholarship from ethics and legal experts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge