Elena Shipunova

Knowledge Distillation for Brain Tumor Segmentation

Feb 10, 2020

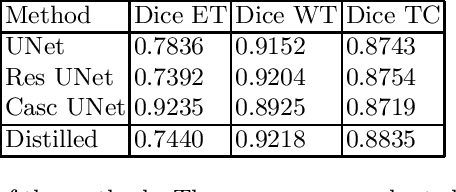

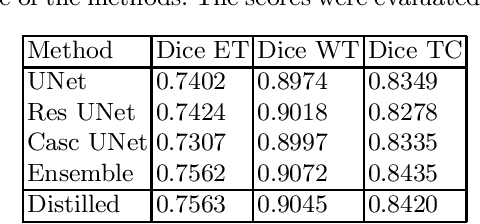

Abstract:The segmentation of brain tumors in multimodal MRIs is one of the most challenging tasks in medical image analysis. The recent state of the art algorithms solving this task is based on machine learning approaches and deep learning in particular. The amount of data used for training such models and its variability is a keystone for building an algorithm with high representation power. In this paper, we study the relationship between the performance of the model and the amount of data employed during the training process. On the example of brain tumor segmentation challenge, we compare the model trained with labeled data provided by challenge organizers, and the same model trained in omni-supervised manner using additional unlabeled data annotated with the ensemble of heterogeneous models. As a result, a single model trained with additional data achieves performance close to the ensemble of multiple models and outperforms individual methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge