Elena Merdjanovska

Self-Aware Knowledge Probing: Evaluating Language Models' Relational Knowledge through Confidence Calibration

Jan 26, 2026Abstract:Knowledge probing quantifies how much relational knowledge a language model (LM) has acquired during pre-training. Existing knowledge probes evaluate model capabilities through metrics like prediction accuracy and precision. Such evaluations fail to account for the model's reliability, reflected in the calibration of its confidence scores. In this paper, we propose a novel calibration probing framework for relational knowledge, covering three modalities of model confidence: (1) intrinsic confidence, (2) structural consistency and (3) semantic grounding. Our extensive analysis of ten causal and six masked language models reveals that most models, especially those pre-trained with the masking objective, are overconfident. The best-calibrated scores come from confidence estimates that account for inconsistencies due to statement rephrasing. Moreover, even the largest pre-trained models fail to encode the semantics of linguistic confidence expressions accurately.

NoiseBench: Benchmarking the Impact of Real Label Noise on Named Entity Recognition

May 13, 2024

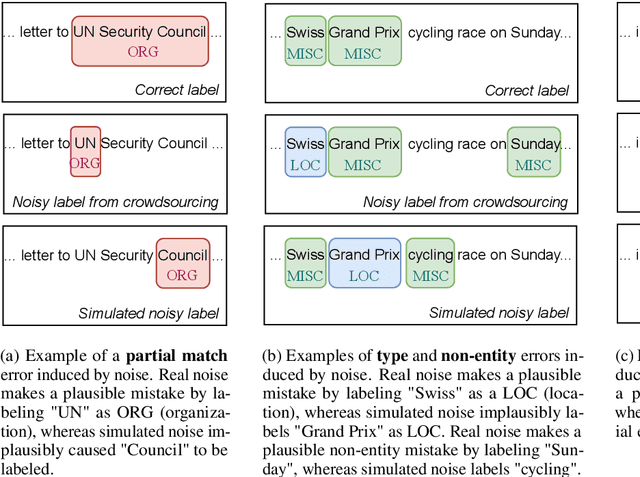

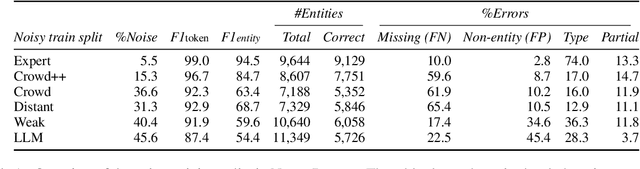

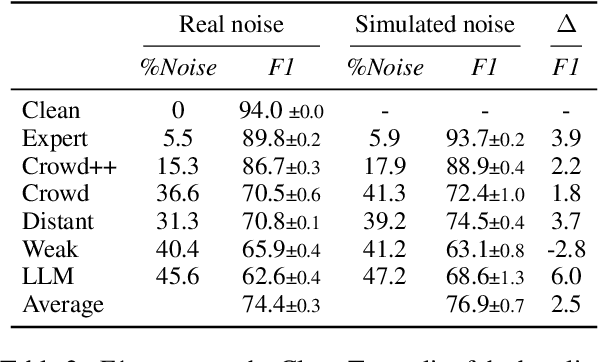

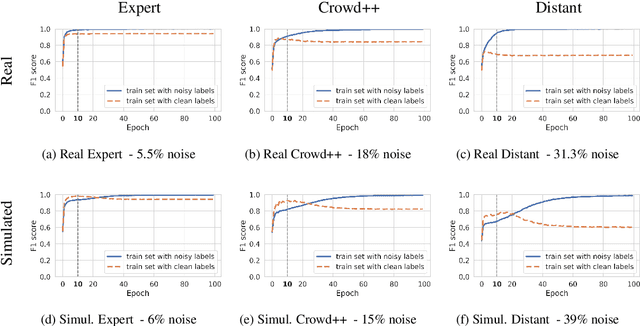

Abstract:Available training data for named entity recognition (NER) often contains a significant percentage of incorrect labels for entity types and entity boundaries. Such label noise poses challenges for supervised learning and may significantly deteriorate model quality. To address this, prior work proposed various noise-robust learning approaches capable of learning from data with partially incorrect labels. These approaches are typically evaluated using simulated noise where the labels in a clean dataset are automatically corrupted. However, as we show in this paper, this leads to unrealistic noise that is far easier to handle than real noise caused by human error or semi-automatic annotation. To enable the study of the impact of various types of real noise, we introduce NoiseBench, an NER benchmark consisting of clean training data corrupted with 6 types of real noise, including expert errors, crowdsourcing errors, automatic annotation errors and LLM errors. We present an analysis that shows that real noise is significantly more challenging than simulated noise, and show that current state-of-the-art models for noise-robust learning fall far short of their theoretically achievable upper bound. We release NoiseBench to the research community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge