Ekta Gavas

Enhancement-Driven Pretraining for Robust Fingerprint Representation Learning

Feb 16, 2024

Abstract:Fingerprint recognition stands as a pivotal component of biometric technology, with diverse applications from identity verification to advanced search tools. In this paper, we propose a unique method for deriving robust fingerprint representations by leveraging enhancement-based pre-training. Building on the achievements of U-Net-based fingerprint enhancement, our method employs a specialized encoder to derive representations from fingerprint images in a self-supervised manner. We further refine these representations, aiming to enhance the verification capabilities. Our experimental results, tested on publicly available fingerprint datasets, reveal a marked improvement in verification performance against established self-supervised training techniques. Our findings not only highlight the effectiveness of our method but also pave the way for potential advancements. Crucially, our research indicates that it is feasible to extract meaningful fingerprint representations from degraded images without relying on enhanced samples.

* 8 pages, 4 figures, Accepted at 19th VISIGRAPP 2024: VISAPP conference

Finger-UNet: A U-Net based Multi-Task Architecture for Deep Fingerprint Enhancement

Oct 01, 2023

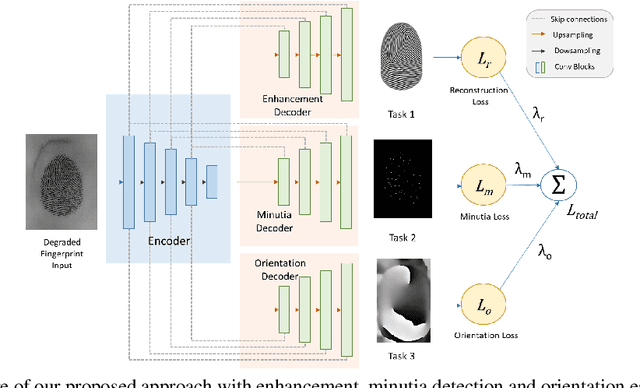

Abstract:For decades, fingerprint recognition has been prevalent for security, forensics, and other biometric applications. However, the availability of good-quality fingerprints is challenging, making recognition difficult. Fingerprint images might be degraded with a poor ridge structure and noisy or less contrasting backgrounds. Hence, fingerprint enhancement plays a vital role in the early stages of the fingerprint recognition/verification pipeline. In this paper, we investigate and improvise the encoder-decoder style architecture and suggest intuitive modifications to U-Net to enhance low-quality fingerprints effectively. We investigate the use of Discrete Wavelet Transform (DWT) for fingerprint enhancement and use a wavelet attention module instead of max pooling which proves advantageous for our task. Moreover, we replace regular convolutions with depthwise separable convolutions, which significantly reduces the memory footprint of the model without degrading the performance. We also demonstrate that incorporating domain knowledge with fingerprint minutiae prediction task can improve fingerprint reconstruction through multi-task learning. Furthermore, we also integrate the orientation estimation task to propagate the knowledge of ridge orientations to enhance the performance further. We present the experimental results and evaluate our model on FVC 2002 and NIST SD302 databases to show the effectiveness of our approach compared to previous works.

* 8 pages, 5 figures, Accepted at 18th VISIGRAPP 2023: VISAPP conference

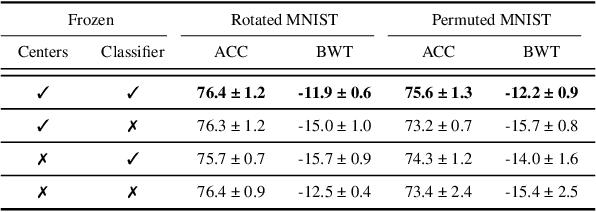

Center Loss Regularization for Continual Learning

Oct 21, 2021

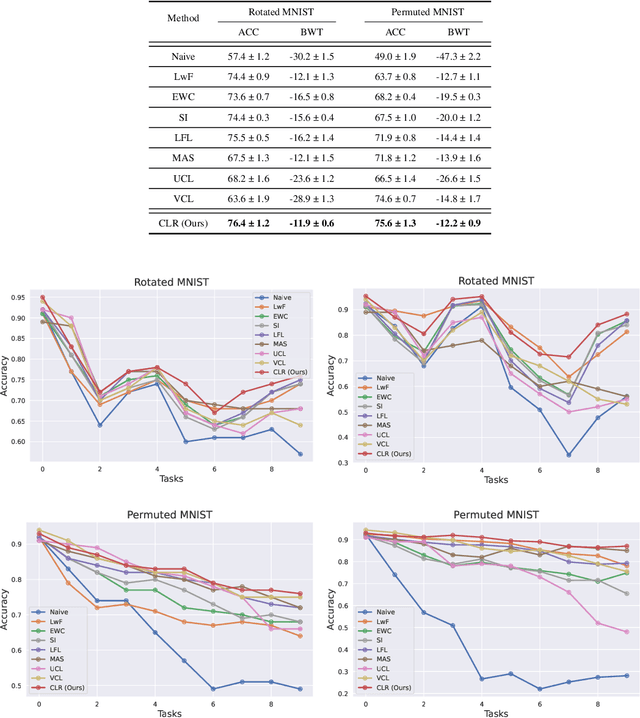

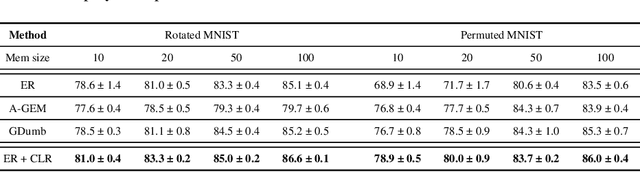

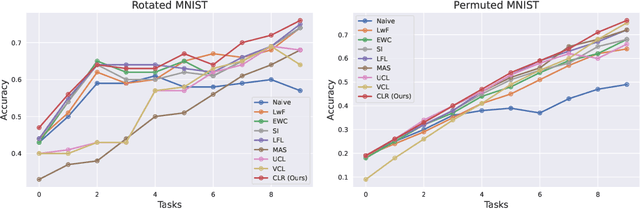

Abstract:The ability to learn different tasks sequentially is essential to the development of artificial intelligence. In general, neural networks lack this capability, the major obstacle being catastrophic forgetting. It occurs when the incrementally available information from non-stationary data distributions is continually acquired, disrupting what the model has already learned. Our approach remembers old tasks by projecting the representations of new tasks close to that of old tasks while keeping the decision boundaries unchanged. We employ the center loss as a regularization penalty that enforces new tasks' features to have the same class centers as old tasks and makes the features highly discriminative. This, in turn, leads to the least forgetting of already learned information. This method is easy to implement, requires minimal computational and memory overhead, and allows the neural network to maintain high performance across many sequentially encountered tasks. We also demonstrate that using the center loss in conjunction with the memory replay outperforms other replay-based strategies. Along with standard MNIST variants for continual learning, we apply our method to continual domain adaptation scenarios with the Digits and PACS datasets. We demonstrate that our approach is scalable, effective, and gives competitive performance compared to state-of-the-art continual learning methods.

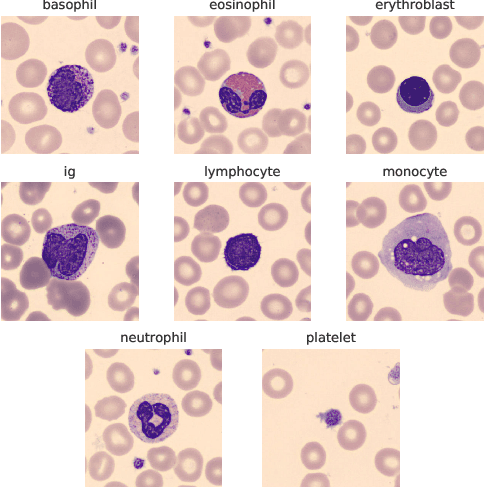

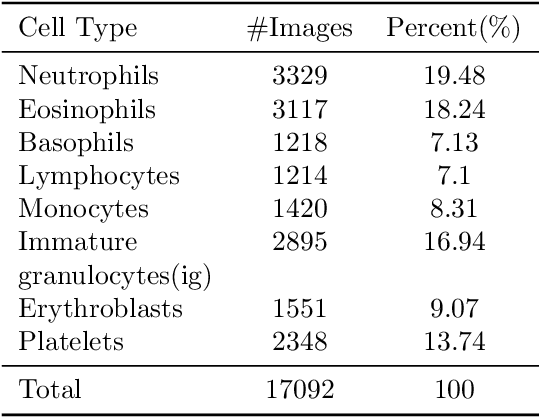

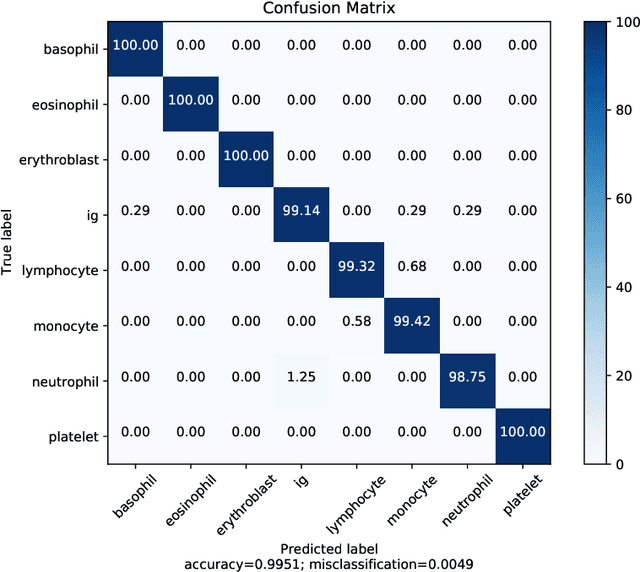

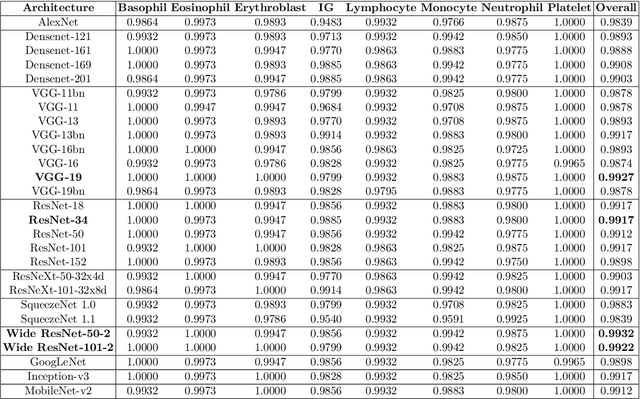

Deep CNNs for Peripheral Blood Cell Classification

Oct 18, 2021

Abstract:The application of machine learning techniques to the medical domain is especially challenging due to the required level of precision and the incurrence of huge risks of minute errors. Employing these techniques to a more complex subdomain of hematological diagnosis seems quite promising, with automatic identification of blood cell types, which can help in detection of hematologic disorders. In this paper, we benchmark 27 popular deep convolutional neural network architectures on the microscopic peripheral blood cell images dataset. The dataset is publicly available, with large number of normal peripheral blood cells acquired using the CellaVision DM96 analyzer and identified by expert pathologists into eight different cell types. We fine-tune the state-of-the-art image classification models pre-trained on the ImageNet dataset for blood cell classification. We exploit data augmentation techniques during training to avoid overfitting and achieve generalization. An ensemble of the top performing models obtains significant improvements over past published works, achieving the state-of-the-art results with a classification accuracy of 99.51%. Our work provides empirical baselines and benchmarks on standard deep-learning architectures for microscopic peripheral blood cell recognition task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge