Ehsan Saeedizade

Benchmarking Machine Learning Models for IoT Malware Detection under Data Scarcity and Drift

Jan 26, 2026Abstract:The rapid expansion of the Internet of Things (IoT) in domains such as smart cities, transportation, and industrial systems has heightened the urgency of addressing their security vulnerabilities. IoT devices often operate under limited computational resources, lack robust physical safeguards, and are deployed in heterogeneous and dynamic networks, making them prime targets for cyberattacks and malware applications. Machine learning (ML) offers a promising approach to automated malware detection and classification, but practical deployment requires models that are both effective and lightweight. The goal of this study is to investigate the effectiveness of four supervised learning models (Random Forest, LightGBM, Logistic Regression, and a Multi-Layer Perceptron) for malware detection and classification using the IoT-23 dataset. We evaluate model performance in both binary and multiclass classification tasks, assess sensitivity to training data volume, and analyze temporal robustness to simulate deployment in evolving threat landscapes. Our results show that tree-based models achieve high accuracy and generalization, even with limited training data, while performance deteriorates over time as malware diversity increases. These findings underscore the importance of adaptive, resource-efficient ML models for securing IoT systems in real-world environments.

I/O Burst Prediction for HPC Clusters using Darshan Logs

Aug 20, 2023

Abstract:Understanding cluster-wide I/O patterns of large-scale HPC clusters is essential to minimize the occurrence and impact of I/O interference. Yet, most previous work in this area focused on monitoring and predicting task and node-level I/O burst events. This paper analyzes Darshan reports from three supercomputers to extract system-level read and write I/O rates in five minutes intervals. We observe significant (over 100x) fluctuations in read and write I/O rates in all three clusters. We then train machine learning models to estimate the occurrence of system-level I/O bursts 5 - 120 minutes ahead. Evaluation results show that we can predict I/O bursts with more than 90% accuracy (F-1 score) five minutes ahead and more than 87% accuracy two hours ahead. We also show that the ML models attain more than 70% accuracy when estimating the degree of the I/O burst. We believe that high-accuracy predictions of I/O bursts can be used in multiple ways, such as postponing delay-tolerant I/O operations (e.g., checkpointing), pausing nonessential applications (e.g., file system scrubbers), and devising I/O-aware job scheduling methods. To validate this claim, we simulated a burst-aware job scheduler that can postpone the start time of applications to avoid I/O bursts. We show that the burst-aware job scheduling can lead to an up to 5x decrease in application runtime.

Demystifying the Performance of Data Transfers in High-Performance Research Networks

Aug 20, 2023

Abstract:High-speed research networks are built to meet the ever-increasing needs of data-intensive distributed workflows. However, data transfers in these networks often fail to attain the promised transfer rates for several reasons, including I/O and network interference, server misconfigurations, and network anomalies. Although understanding the root causes of performance issues is critical to mitigating them and increasing the utilization of expensive network infrastructures, there is currently no available mechanism to monitor data transfers in these networks. In this paper, we present a scalable, end-to-end monitoring framework to gather and store key performance metrics for file transfers to shed light on the performance of transfers. The evaluation results show that the proposed framework can monitor up to 400 transfers per host and more than 40, 000 transfers in total while collecting performance statistics at one-second precision. We also introduce a heuristic method to automatically process the gathered performance metrics and identify the root causes of performance anomalies with an F-score of 87 - 98%.

Real-Time Facial Expression Recognition using Facial Landmarks and Neural Networks

Jan 31, 2022

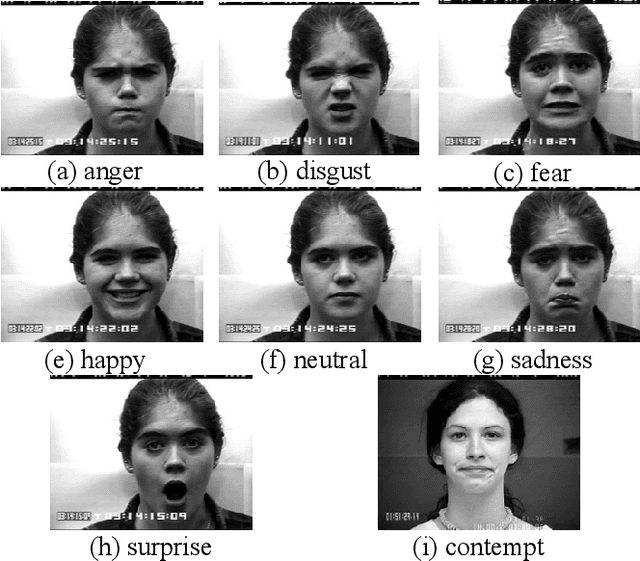

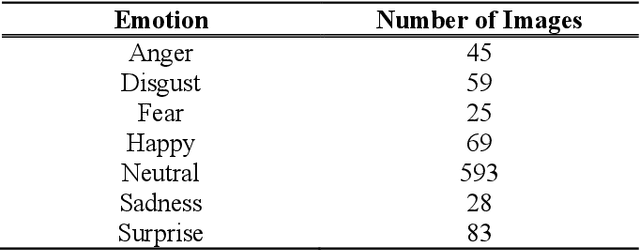

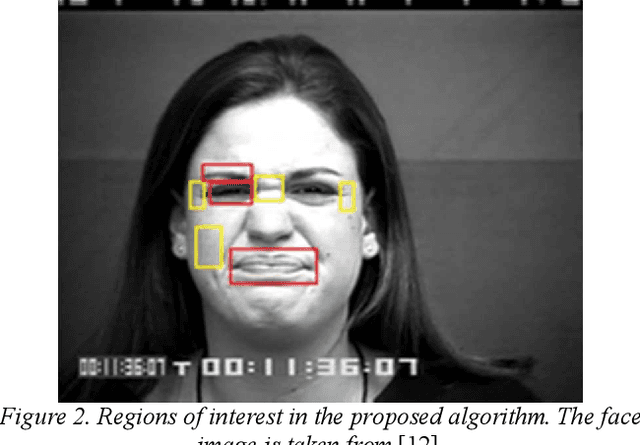

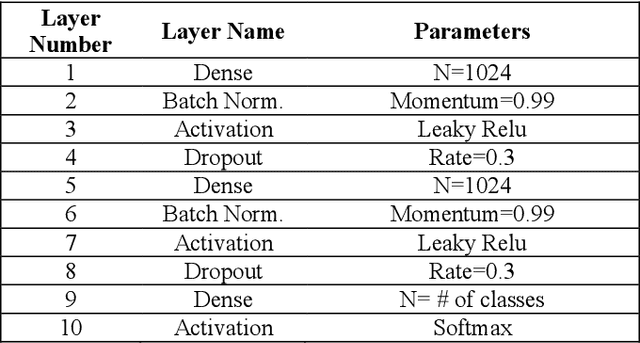

Abstract:This paper presents a lightweight algorithm for feature extraction, classification of seven different emotions, and facial expression recognition in a real-time manner based on static images of the human face. In this regard, a Multi-Layer Perceptron (MLP) neural network is trained based on the foregoing algorithm. In order to classify human faces, first, some pre-processing is applied to the input image, which can localize and cut out faces from it. In the next step, a facial landmark detection library is used, which can detect the landmarks of each face. Then, the human face is split into upper and lower faces, which enables the extraction of the desired features from each part. In the proposed model, both geometric and texture-based feature types are taken into account. After the feature extraction phase, a normalized vector of features is created. A 3-layer MLP is trained using these feature vectors, leading to 96% accuracy on the test set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge