Eduardo C. Garrido-Merchan

Explainable Post hoc Portfolio Management Financial Policy of a Deep Reinforcement Learning agent

Jul 19, 2024Abstract:Financial portfolio management investment policies computed quantitatively by modern portfolio theory techniques like the Markowitz model rely on a set on assumptions that are not supported by data in high volatility markets. Hence, quantitative researchers are looking for alternative models to tackle this problem. Concretely, portfolio management is a problem that has been successfully addressed recently by Deep Reinforcement Learning (DRL) approaches. In particular, DRL algorithms train an agent by estimating the distribution of the expected reward of every action performed by an agent given any financial state in a simulator. However, these methods rely on Deep Neural Networks model to represent such a distribution, that although they are universal approximator models, they cannot explain its behaviour, given by a set of parameters that are not interpretable. Critically, financial investors policies require predictions to be interpretable, so DRL agents are not suited to follow a particular policy or explain their actions. In this work, we developed a novel Explainable Deep Reinforcement Learning (XDRL) approach for portfolio management, integrating the Proximal Policy Optimization (PPO) with the model agnostic explainable techniques of feature importance, SHAP and LIME to enhance transparency in prediction time. By executing our methodology, we can interpret in prediction time the actions of the agent to assess whether they follow the requisites of an investment policy or to assess the risk of following the agent suggestions. To the best of our knowledge, our proposed approach is the first explainable post hoc portfolio management financial policy of a DRL agent. We empirically illustrate our methodology by successfully identifying key features influencing investment decisions, which demonstrate the ability to explain the agent actions in prediction time.

Best uses of ChatGPT and Generative AI for computer science research

Nov 18, 2023Abstract:Generative Artificial Intelligence (AI), particularly tools like OpenAI's popular ChatGPT, is reshaping the landscape of computer science research. Used wisely, these tools can boost the productivity of a computer research scientist. This paper provides an exploration of the diverse applications of ChatGPT and other generative AI technologies in computer science academic research, making recommendations about the use of Generative AI to make more productive the role of the computer research scientist, with the focus of writing new research papers. We highlight innovative uses such as brainstorming research ideas, aiding in the drafting and styling of academic papers and assisting in the synthesis of state-of-the-art section. Further, we delve into using these technologies in understanding interdisciplinary approaches, making complex texts simpler, and recommending suitable academic journals for publication. Significant focus is placed on generative AI's contributions to synthetic data creation, research methodology, and mentorship, as well as in task organization and article quality assessment. The paper also addresses the utility of AI in article review, adapting texts to length constraints, constructing counterarguments, and survey development. Moreover, we explore the capabilities of these tools in disseminating ideas, generating images and audio, text transcription, and engaging with editors. We also describe some non-recommended uses of generative AI for computer science research, mainly because of the limitations of this technology.

ChatGPT is not all you need. A State of the Art Review of large Generative AI models

Jan 11, 2023Abstract:During the last two years there has been a plethora of large generative models such as ChatGPT or Stable Diffusion that have been published. Concretely, these models are able to perform tasks such as being a general question and answering system or automatically creating artistic images that are revolutionizing several sectors. Consequently, the implications that these generative models have in the industry and society are enormous, as several job positions may be transformed. For example, Generative AI is capable of transforming effectively and creatively texts to images, like the DALLE-2 model; text to 3D images, like the Dreamfusion model; images to text, like the Flamingo model; texts to video, like the Phenaki model; texts to audio, like the AudioLM model; texts to other texts, like ChatGPT; texts to code, like the Codex model; texts to scientific texts, like the Galactica model or even create algorithms like AlphaTensor. This work consists on an attempt to describe in a concise way the main models are sectors that are affected by generative AI and to provide a taxonomy of the main generative models published recently.

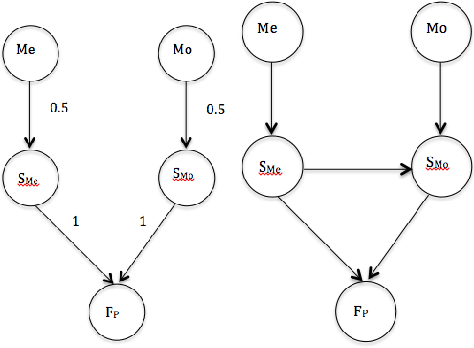

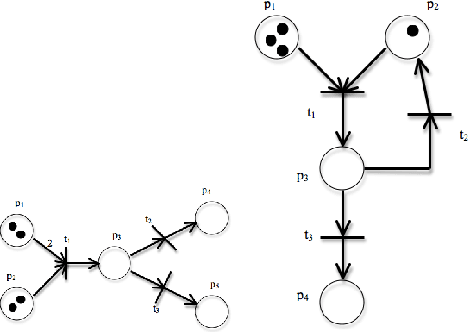

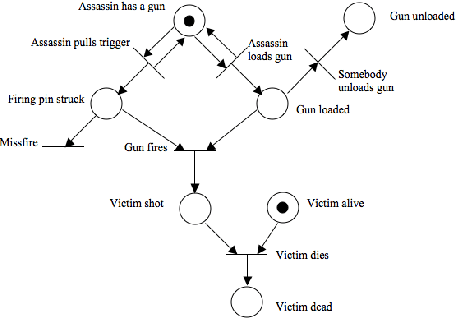

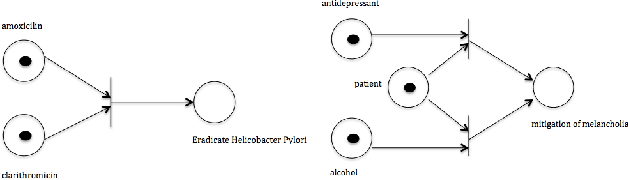

Fuzzy Stochastic Timed Petri Nets for Causal properties representation

Nov 24, 2020

Abstract:Imagery is frequently used to model, represent and communicate knowledge. In particular, graphs are one of the most powerful tools, being able to represent relations between objects. Causal relations are frequently represented by directed graphs, with nodes denoting causes and links denoting causal influence. A causal graph is a skeletal picture, showing causal associations and impact between entities. Common methods used for graphically representing causal scenarios are neurons, truth tables, causal Bayesian networks, cognitive maps and Petri Nets. Causality is often defined in terms of precedence (the cause precedes the effect), concurrency (often, an effect is provoked simultaneously by two or more causes), circularity (a cause provokes the effect and the effect reinforces the cause) and imprecision (the presence of the cause favors the effect, but not necessarily causes it). We will show that, even though the traditional graphical models are able to represent separately some of the properties aforementioned, they fail trying to illustrate indistinctly all of them. To approach that gap, we will introduce Fuzzy Stochastic Timed Petri Nets as a graphical tool able to represent time, co-occurrence, looping and imprecision in causal flow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge