Edoardo Urettini

Online Continual Learning for Time Series: a Natural Score-driven Approach

Jan 19, 2026Abstract:Online continual learning (OCL) methods adapt to changing environments without forgetting past knowledge. Similarly, online time series forecasting (OTSF) is a real-world problem where data evolve in time and success depends on both rapid adaptation and long-term memory. Indeed, time-varying and regime-switching forecasting models have been extensively studied, offering a strong justification for the use of OCL in these settings. Building on recent work that applies OCL to OTSF, this paper aims to strengthen the theoretical and practical connections between time series methods and OCL. First, we reframe neural network optimization as a parameter filtering problem, showing that natural gradient descent is a score-driven method and proving its information-theoretic optimality. Then, we show that using a Student's t likelihood in addition to natural gradient induces a bounded update, which improves robustness to outliers. Finally, we introduce Natural Score-driven Replay (NatSR), which combines our robust optimizer with a replay buffer and a dynamic scale heuristic that improves fast adaptation at regime drifts. Empirical results demonstrate that NatSR achieves stronger forecasting performance than more complex state-of-the-art methods.

Online Curvature-Aware Replay: Leveraging $\mathbf{2^{nd}}$ Order Information for Online Continual Learning

Feb 03, 2025

Abstract:Online Continual Learning (OCL) models continuously adapt to nonstationary data streams, usually without task information. These settings are complex and many traditional CL methods fail, while online methods (mainly replay-based) suffer from instabilities after the task shift. To address this issue, we formalize replay-based OCL as a second-order online joint optimization with explicit KL-divergence constraints on replay data. We propose Online Curvature-Aware Replay (OCAR) to solve the problem: a method that leverages second-order information of the loss using a K-FAC approximation of the Fisher Information Matrix (FIM) to precondition the gradient. The FIM acts as a stabilizer to prevent forgetting while also accelerating the optimization in non-interfering directions. We show how to adapt the estimation of the FIM to a continual setting stabilizing second-order optimization for non-iid data, uncovering the role of the Tikhonov regularization in the stability-plasticity tradeoff. Empirical results show that OCAR outperforms state-of-the-art methods in continual metrics achieving higher average accuracy throughout the training process in three different benchmarks.

GAS-Norm: Score-Driven Adaptive Normalization for Non-Stationary Time Series Forecasting in Deep Learning

Oct 04, 2024

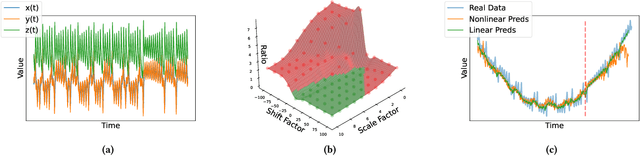

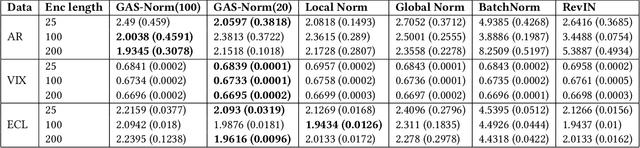

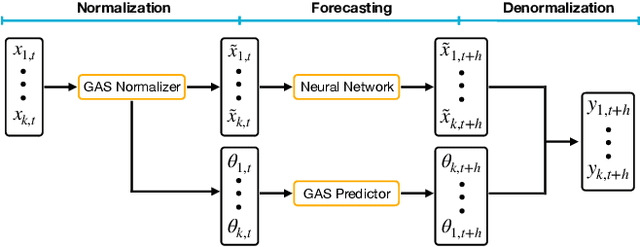

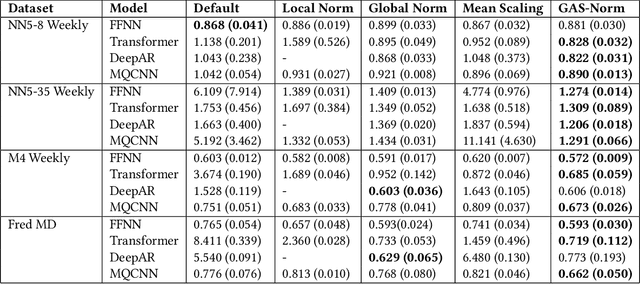

Abstract:Despite their popularity, deep neural networks (DNNs) applied to time series forecasting often fail to beat simpler statistical models. One of the main causes of this suboptimal performance is the data non-stationarity present in many processes. In particular, changes in the mean and variance of the input data can disrupt the predictive capability of a DNN. In this paper, we first show how DNN forecasting models fail in simple non-stationary settings. We then introduce GAS-Norm, a novel methodology for adaptive time series normalization and forecasting based on the combination of a Generalized Autoregressive Score (GAS) model and a Deep Neural Network. The GAS approach encompasses a score-driven family of models that estimate the mean and variance at each new observation, providing updated statistics to normalize the input data of the deep model. The output of the DNN is eventually denormalized using the statistics forecasted by the GAS model, resulting in a hybrid approach that leverages the strengths of both statistical modeling and deep learning. The adaptive normalization improves the performance of the model in non-stationary settings. The proposed approach is model-agnostic and can be applied to any DNN forecasting model. To empirically validate our proposal, we first compare GAS-Norm with other state-of-the-art normalization methods. We then combine it with state-of-the-art DNN forecasting models and test them on real-world datasets from the Monash open-access forecasting repository. Results show that deep forecasting models improve their performance in 21 out of 25 settings when combined with GAS-Norm compared to other normalization methods.

* Accepted at CIKM '24

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge