Edoardo Giacomello

Distributed Learning Approaches for Automated Chest X-Ray Diagnosis

Oct 04, 2021

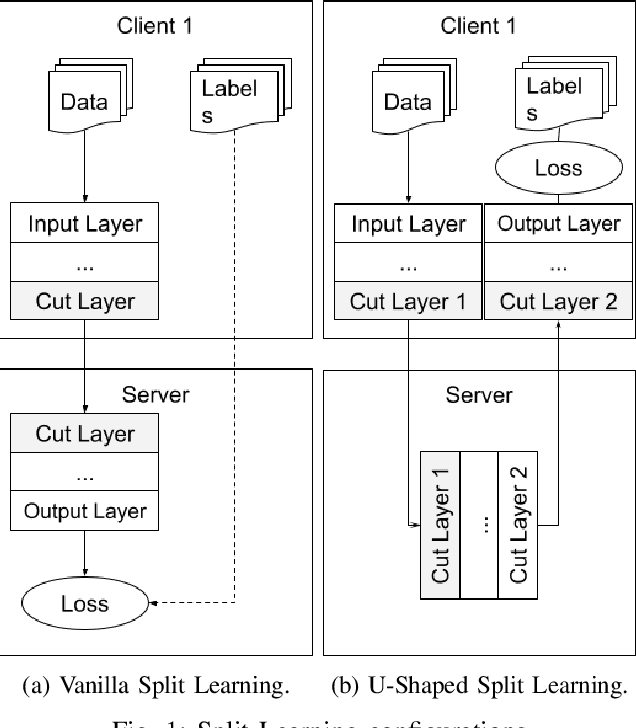

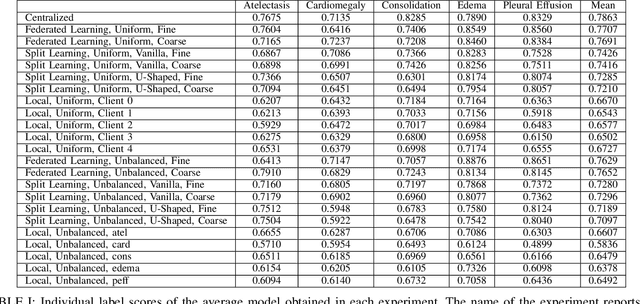

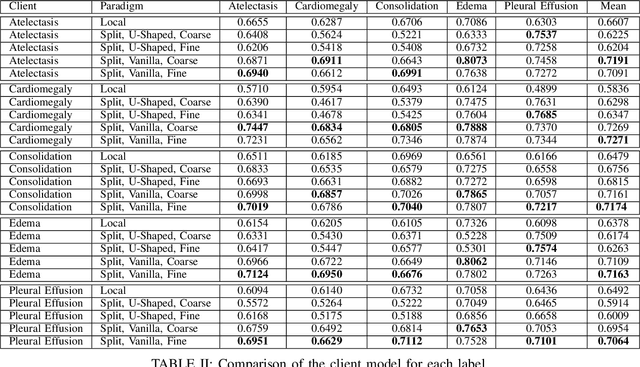

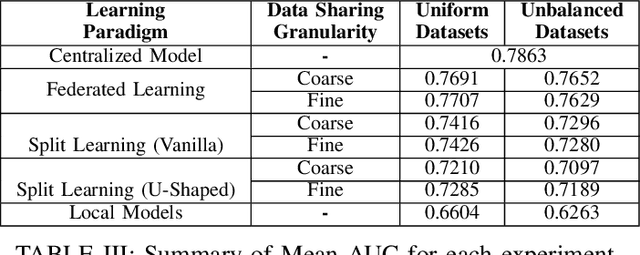

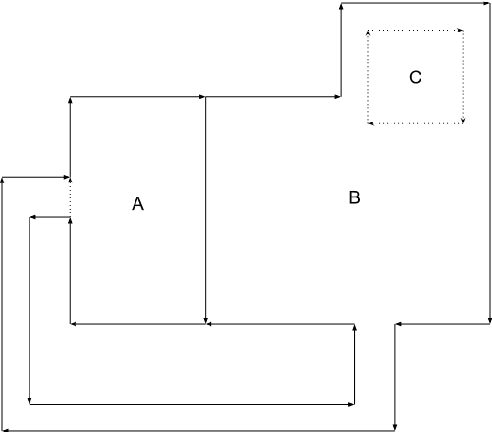

Abstract:Deep Learning has established in the latest years as a successful approach to address a great variety of tasks. Healthcare is one of the most promising field of application for Deep Learning approaches since it would allow to help clinicians to analyze patient data and perform diagnoses. However, despite the vast amount of data collected every year in hospitals and other clinical institutes, privacy regulations on sensitive data - such as those related to health - pose a serious challenge to the application of these methods. In this work, we focus on strategies to cope with privacy issues when a consortium of healthcare institutions needs to train machine learning models for identifying a particular disease, comparing the performances of two recent distributed learning approaches - Federated Learning and Split Learning - on the task of Automated Chest X-Ray Diagnosis. In particular, in our analysis we investigated the impact of different data distributions in client data and the possible policies on the frequency of data exchange between the institutions.

Image Embedding and Model Ensembling for Automated Chest X-Ray Interpretation

May 05, 2021

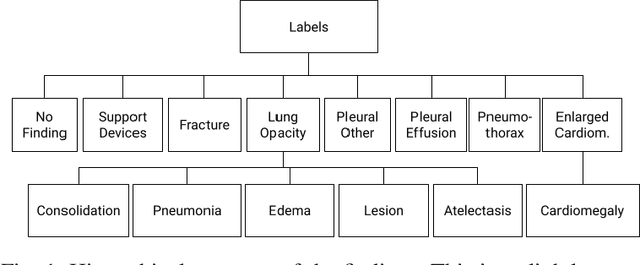

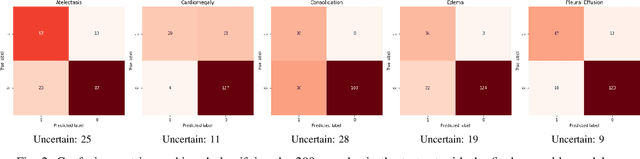

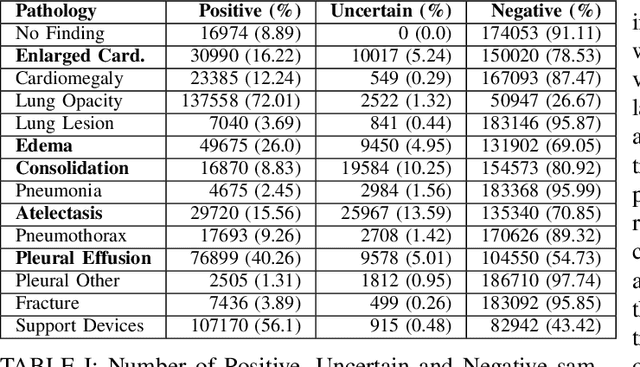

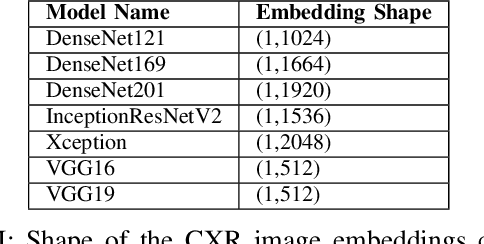

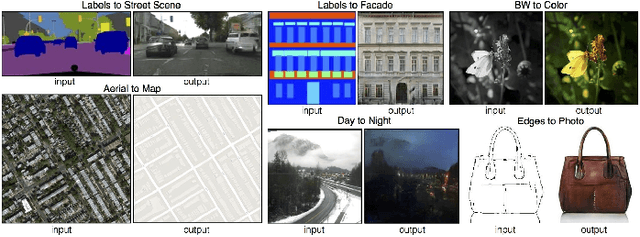

Abstract:Chest X-ray (CXR) is perhaps the most frequently-performed radiological investigation globally. In this work, we present and study several machine learning approaches to develop automated CXR diagnostic models. In particular, we trained several Convolutional Neural Networks (CNN) on the CheXpert dataset, a large collection of more than 200k CXR labeled images. Then, we used the trained CNNs to compute embeddings of the CXR images, in order to train two sets of tree-based classifiers from them. Finally, we described and compared three ensembling strategies to combine together the classifiers trained. Rather than expecting some performance-wise benefits, our goal in this work is showing that the above two methodologies, i.e., the extraction of image embeddings and models ensembling, can be effective and viable to solve tasks that require medical imaging understanding. Our results in that perspective are encouraging and worthy of further investigation.

Transfer Brain MRI Tumor Segmentation Models Across Modalities with Adversarial Networks

Oct 07, 2019

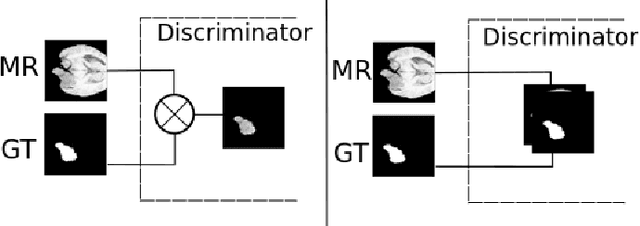

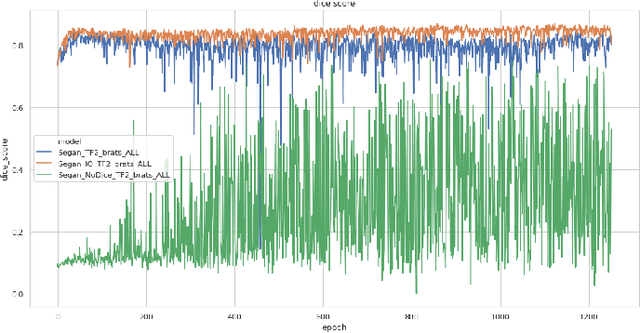

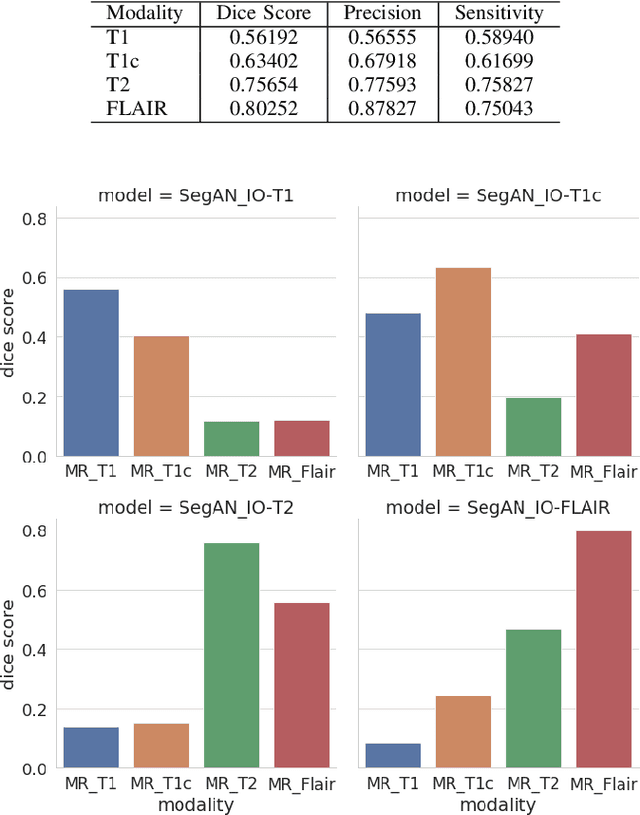

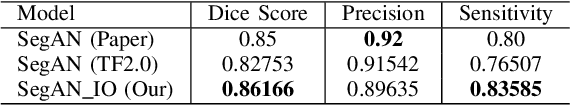

Abstract:In this work, we present an approach to brain cancer segmentation in Magnetic Resonance Images (MRI) using Adversarial Networks, that have been successfully applied to several complex image processing problems in recent years. Most of the segmentation approaches presented in the literature exploit the data from all the contrast modalities typically acquired in the clinical practice: T1-weighted, T1-weighted contrast-enhanced, T2-weighted, and T2-FLAIR. Unfortunately, often not all these modalities are available for each patient. Accordingly, in this paper, we extended a previous segmentation approach based on Adversarial Networks to deal with this issue. In particular, we trained a segmentation model for each modality at once and evaluated the performances of these models. Thus, we investigated the possibility of transferring the best among these single-modality models to the other modalities. Our results suggest that such a transfer learning approach allows achieving better performances for almost all the target modalities.

DOOM Level Generation using Generative Adversarial Networks

Apr 24, 2018

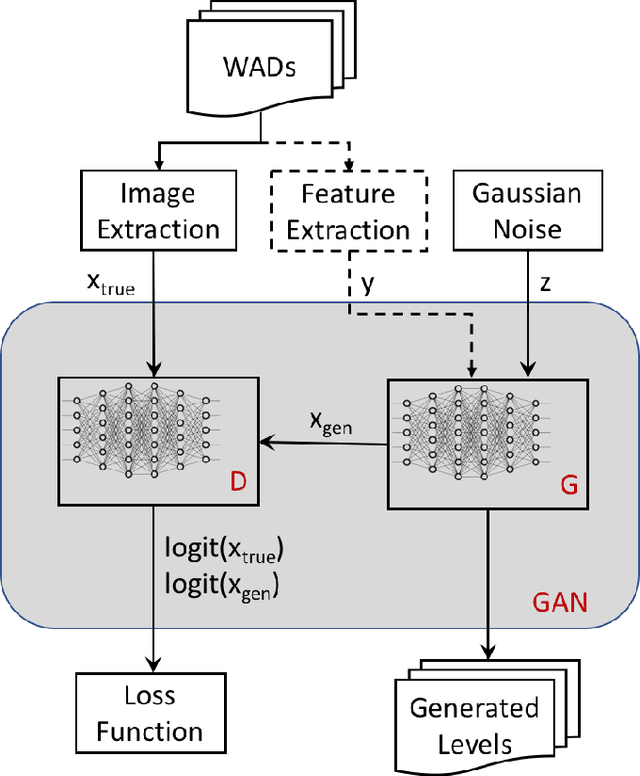

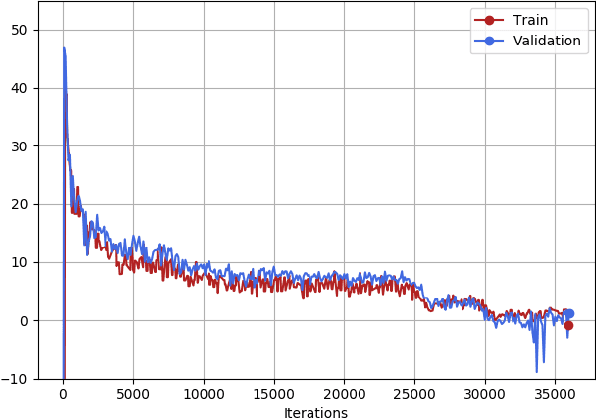

Abstract:We applied Generative Adversarial Networks (GANs) to learn a model of DOOM levels from human-designed content. Initially, we analysed the levels and extracted several topological features. Then, for each level, we extracted a set of images identifying the occupied area, the height map, the walls, and the position of game objects. We trained two GANs: one using plain level images, one using both the images and some of the features extracted during the preliminary analysis. We used the two networks to generate new levels and compared the results to assess whether the network trained using also the topological features could generate levels more similar to human-designed ones. Our results show that GANs can capture intrinsic structure of DOOM levels and appears to be a promising approach to level generation in first person shooter games.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge