Transfer Brain MRI Tumor Segmentation Models Across Modalities with Adversarial Networks

Paper and Code

Oct 07, 2019

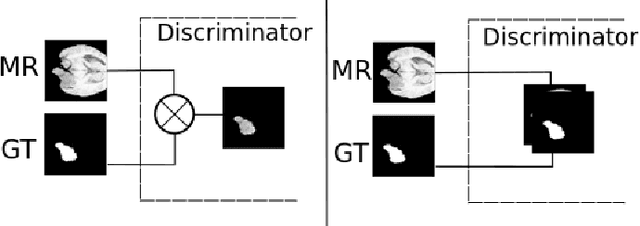

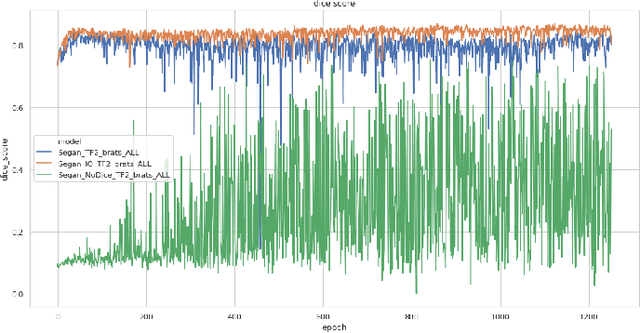

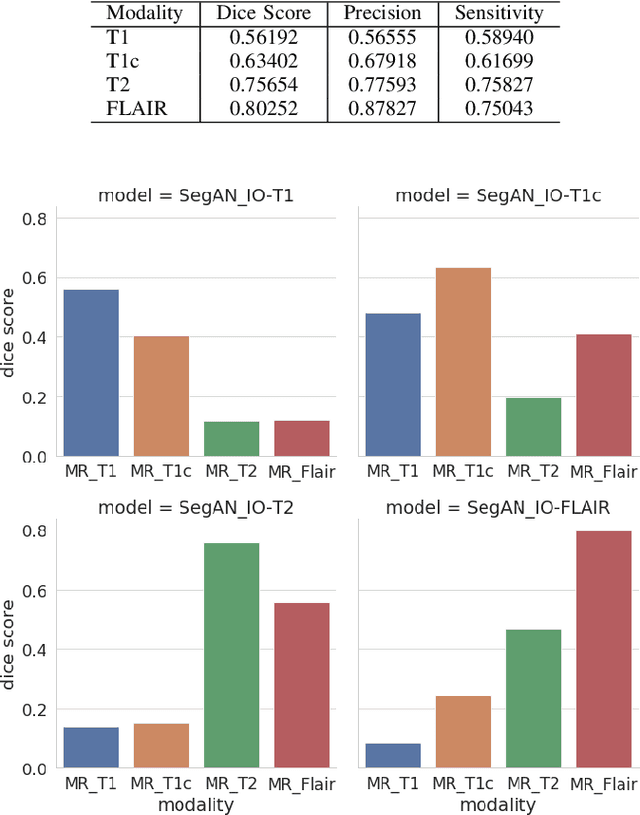

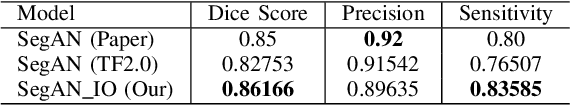

In this work, we present an approach to brain cancer segmentation in Magnetic Resonance Images (MRI) using Adversarial Networks, that have been successfully applied to several complex image processing problems in recent years. Most of the segmentation approaches presented in the literature exploit the data from all the contrast modalities typically acquired in the clinical practice: T1-weighted, T1-weighted contrast-enhanced, T2-weighted, and T2-FLAIR. Unfortunately, often not all these modalities are available for each patient. Accordingly, in this paper, we extended a previous segmentation approach based on Adversarial Networks to deal with this issue. In particular, we trained a segmentation model for each modality at once and evaluated the performances of these models. Thus, we investigated the possibility of transferring the best among these single-modality models to the other modalities. Our results suggest that such a transfer learning approach allows achieving better performances for almost all the target modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge