Dylan Fagot

IRIT

A Quasi-Newton algorithm on the orthogonal manifold for NMF with transform learning

Nov 06, 2018

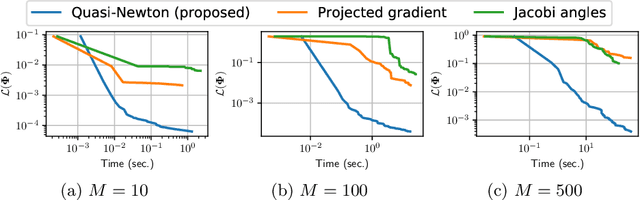

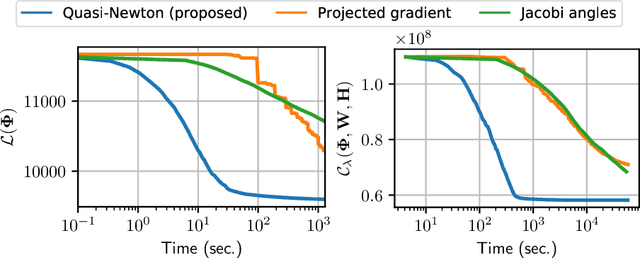

Abstract:Nonnegative matrix factorization (NMF) is a popular method for audio spectral unmixing. While NMF is traditionally applied to off-the-shelf time-frequency representations based on the short-time Fourier or Cosine transforms, the ability to learn transforms from raw data attracts increasing attention. However, this adds an important computational overhead. When assumed orthogonal (like the Fourier or Cosine transforms), learning the transform yields a non-convex optimization problem on the orthogonal matrix manifold. In this paper, we derive a quasi-Newton method on the manifold using sparse approximations of the Hessian. Experiments on synthetic and real audio data show that the proposed algorithm out-performs state-of-the-art first-order and coordinate-descent methods by orders of magnitude. A Python package for fast TL-NMF is released online at https://github.com/pierreablin/tlnmf.

Nonnegative Matrix Factorization with Transform Learning

Dec 15, 2017

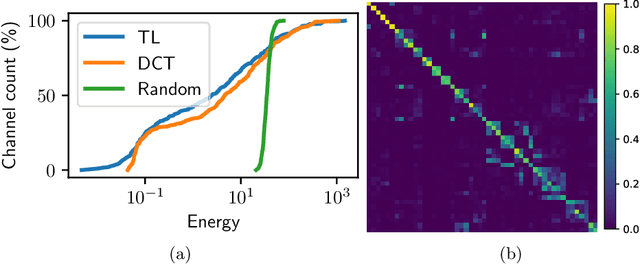

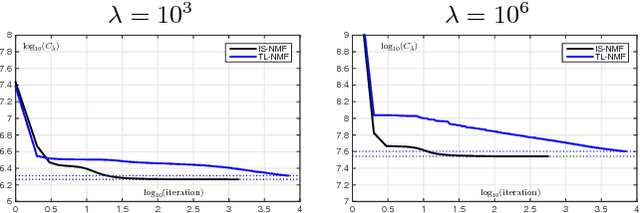

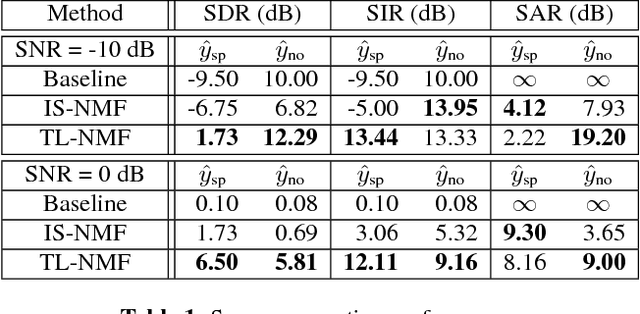

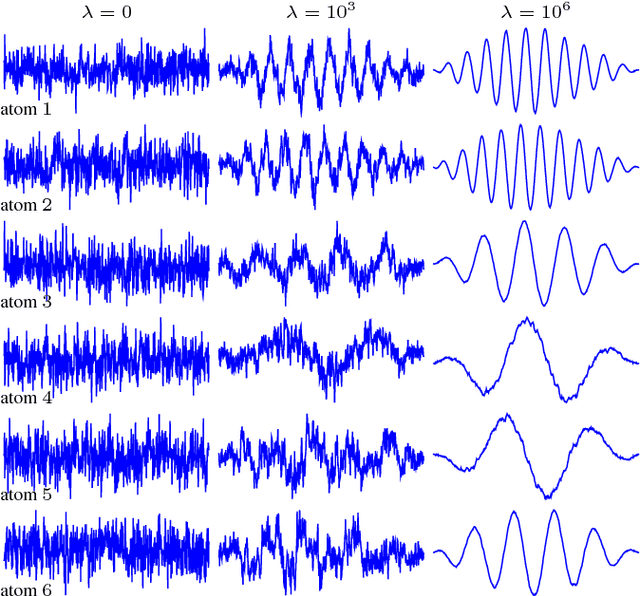

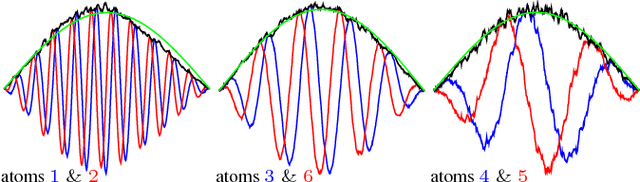

Abstract:Traditional NMF-based signal decomposition relies on the factorization of spectral data, which is typically computed by means of short-time frequency transform. In this paper we propose to relax the choice of a pre-fixed transform and learn a short-time orthogonal transform together with the factorization. To this end, we formulate a regularized optimization problem reminiscent of conventional NMF, yet with the transform as additional unknown parameters, and design a novel block-descent algorithm enabling to find stationary points of this objective function. The proposed joint transform learning and factorization approach is tested for two audio signal processing experiments, illustrating its conceptual and practical benefits.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge