Duhyeon Bang

Editable Generative Adversarial Networks: Generating and Editing Faces Simultaneously

Jul 20, 2018

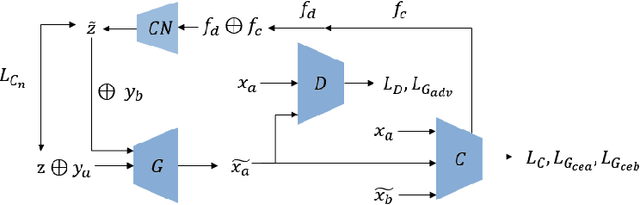

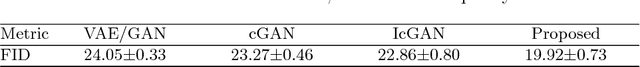

Abstract:We propose a novel framework for simultaneously generating and manipulating the face images with desired attributes. While the state-of-the-art attribute editing technique has achieved the impressive performance for creating realistic attribute effects, they only address the image editing problem, using the input image as the condition of model. Recently, several studies attempt to tackle both novel face generation and attribute editing problem using a single solution. However, their image quality is still unsatisfactory. Our goal is to develop a single unified model that can simultaneously create and edit high quality face images with desired attributes. A key idea of our work is that we decompose the image into the latent and attribute vector in low dimensional representation, and then utilize the GAN framework for mapping the low dimensional representation to the image. In this way, we can address both the generation and editing problem by learning the generator. For qualitative and quantitative evaluations, the proposed algorithm outperforms recent algorithms addressing the same problem. Also, we show that our model can achieve the competitive performance with the state-of-the-art attribute editing technique in terms of attribute editing quality.

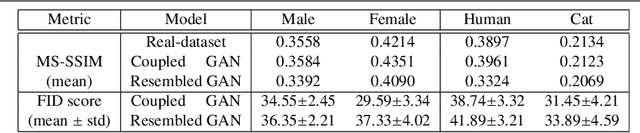

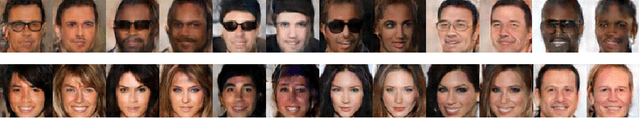

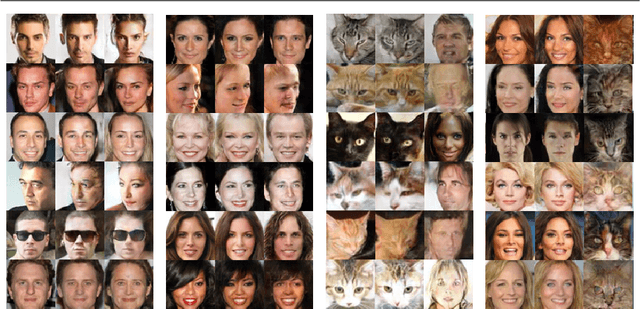

Resembled Generative Adversarial Networks: Two Domains with Similar Attributes

Jul 03, 2018

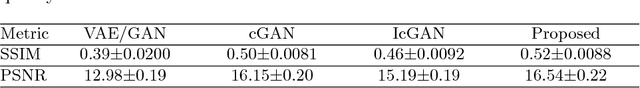

Abstract:We propose a novel algorithm, namely Resembled Generative Adversarial Networks (GAN), that generates two different domain data simultaneously where they resemble each other. Although recent GAN algorithms achieve the great success in learning the cross-domain relationship, their application is limited to domain transfers, which requires the input image. The first attempt to tackle the data generation of two domains was proposed by CoGAN. However, their solution is inherently vulnerable for various levels of domain similarities. Unlike CoGAN, our Resembled GAN implicitly induces two generators to match feature covariance from both domains, thus leading to share semantic attributes. Hence, we effectively handle a wide range of structural and semantic similarities between various two domains. Based on experimental analysis on various datasets, we verify that the proposed algorithm is effective for generating two domains with similar attributes.

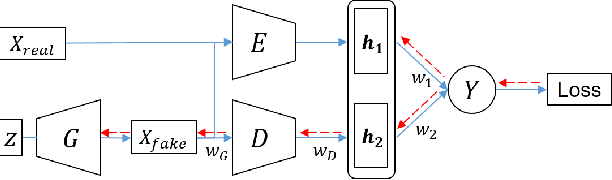

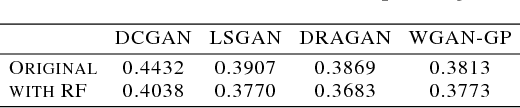

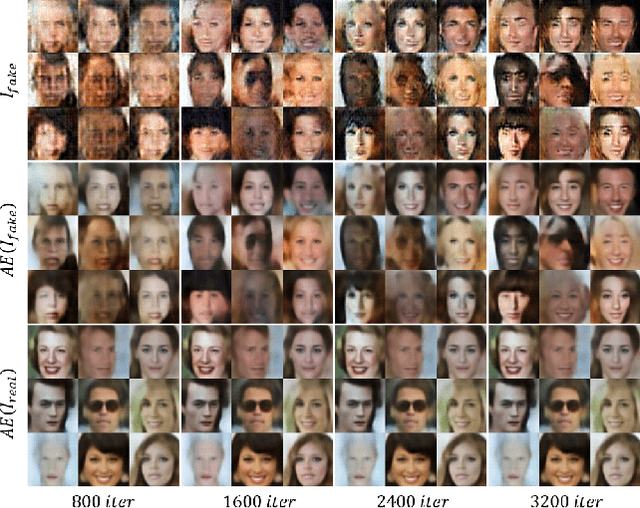

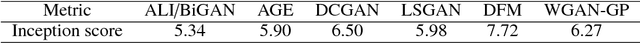

Improved Training of Generative Adversarial Networks Using Representative Features

Jul 03, 2018

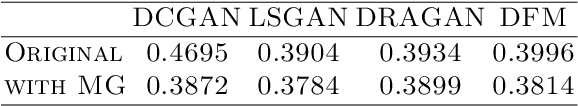

Abstract:Despite the success of generative adversarial networks (GANs) for image generation, the trade-off between visual quality and image diversity remains a significant issue. This paper achieves both aims simultaneously by improving the stability of training GANs. The key idea of the proposed approach is to implicitly regularize the discriminator using representative features. Focusing on the fact that standard GAN minimizes reverse Kullback-Leibler (KL) divergence, we transfer the representative feature, which is extracted from the data distribution using a pre-trained autoencoder (AE), to the discriminator of standard GANs. Because the AE learns to minimize forward KL divergence, our GAN training with representative features is influenced by both reverse and forward KL divergence. Consequently, the proposed approach is verified to improve visual quality and diversity of state of the art GANs using extensive evaluations.

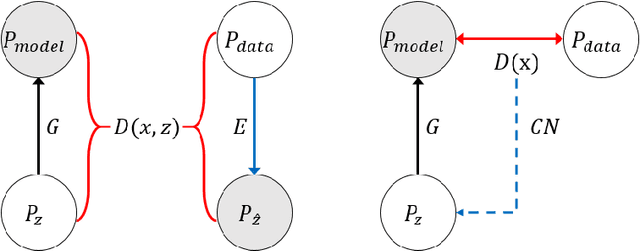

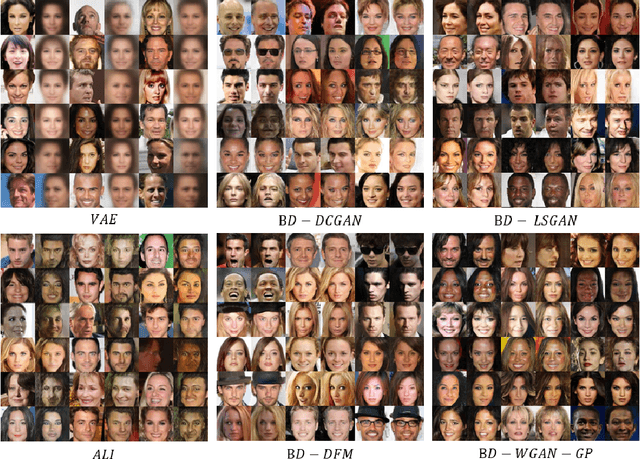

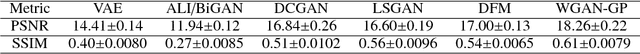

High Quality Bidirectional Generative Adversarial Networks

May 28, 2018

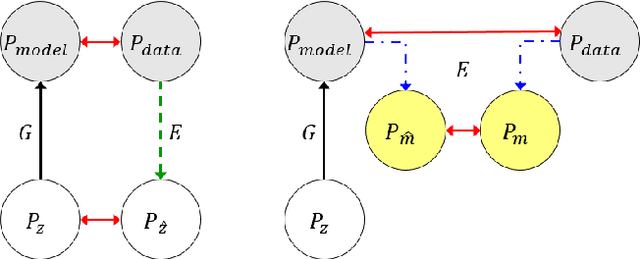

Abstract:Generative adversarial networks (GANs) have achieved outstanding success in generating the high quality data. Focusing on the generation process, existing GANs investigate unidirectional mapping from the latent vector to the data. Later, various studies point out that the latent space of GANs is semantically meaningful and can be utilized in advanced data analysis and manipulation. In order to analyze the real data in the latent space of GANs, it is necessary to investigate the inverse generation mapping from the data to the latent vector. To tackle this problem, the bidirectional generative models introduce an encoder to enable the inverse path of generation process. Unfortunately, this effort leads to the degradation of generation quality because the imperfect generator rather interferes the encoder training and vice versa. In this paper, we propose a new inference model that estimates the latent vector from the feature of GAN discriminator. While existing bidirectional models learns the image to latent translation, our algorithm formulates this inference mapping by the feature to latent translation. It is important to note that training of our model is independent of the GAN training. Owing to the attractive nature of this independency, the proposed algorithm can generate the high quality samples identical to those of unidirectional GANs and also reconstruct the original data faithfully. Moreover, our algorithm can be employed to any unidirectional GAN, even the pre-traind GANs.

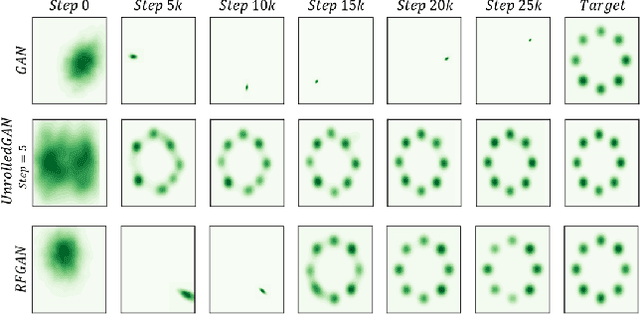

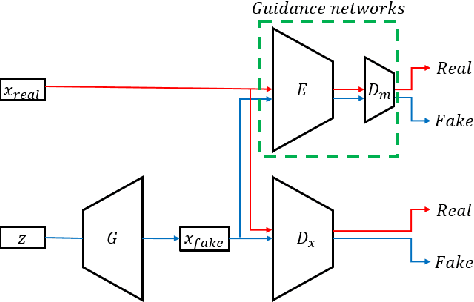

MGGAN: Solving Mode Collapse using Manifold Guided Training

Apr 12, 2018

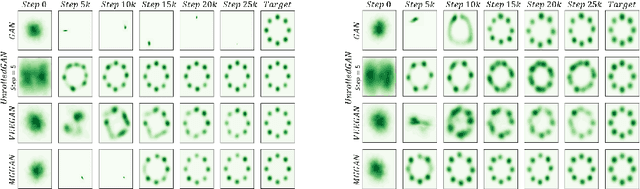

Abstract:Mode collapse is a critical problem in training generative adversarial networks. To alleviate mode collapse, several recent studies introduce new objective functions, network architectures or alternative training schemes. However, their achievement is often the result of sacrificing the image quality. In this paper, we propose a new algorithm, namely a manifold guided generative adversarial network (MGGAN), which leverages a guidance network on existing GAN architecture to induce generator learning all modes of data distribution. Based on extensive evaluations, we show that our algorithm resolves mode collapse without losing image quality. In particular, we demonstrate that our algorithm is easily extendable to various existing GANs. Experimental analysis justifies that the proposed algorithm is an effective and efficient tool for training GANs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge