Donghee Paek

Mixer-based lidar lane detection network and dataset for urban roads

Oct 21, 2021

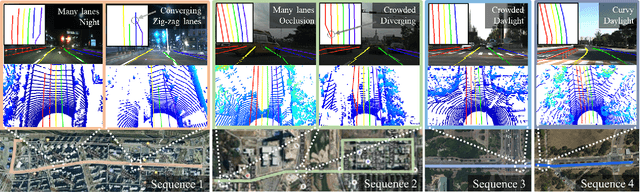

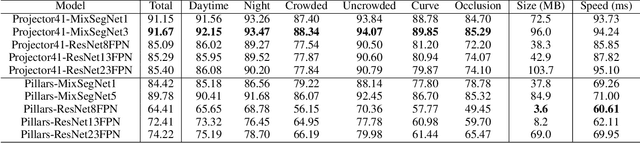

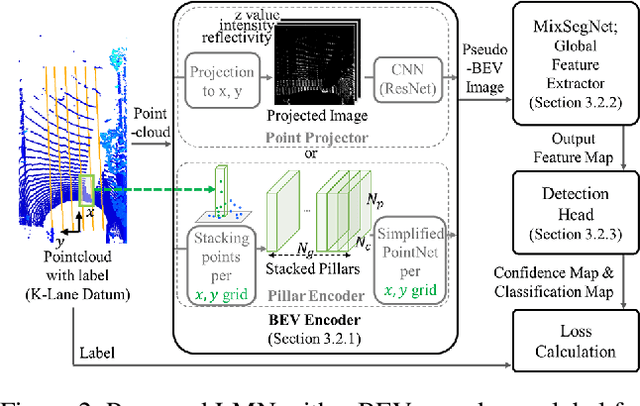

Abstract:Accurate lane detection under various road conditions is a critical function for autonomous driving. Generally, when detected lane lines from a front camera image are projected into a birds-eye view (BEV) for motion planning, the resulting lane lines are often distorted. And convolutional neural network (CNN)-based feature extractors often lose resolution when increasing the receptive field to detect global features such as lane lines. However, Lidar point cloud has little image distortion in the BEV-projection. Since lane lines are thin and stretch over entire BEV image while occupying only a small portion, lane lines should be detected as a global feature with high resolution. In this paper, we propose Lane Mixer Network (LMN) that extracts local features from Lidar point cloud, recognizes global features, and detects lane lines using a BEV encoder, a Mixer-based global feature extractor, and a detection head, respectively. In addition, we provide a world-first large urban lane dataset for Lidar, K-Lane, which has maximum 6 lanes under various urban road conditions. We demonstrate that the proposed LMN achieves the state-of-the-art performance, an F1 score of 91.67%, with K-Lane. The K-Lane, LMN training code, pre-trained models, and total dataset development platform are available at github.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge