Dongbo Huang

Know in AdVance: Linear-Complexity Forecasting of Ad Campaign Performance with Evolving User Interest

May 17, 2024

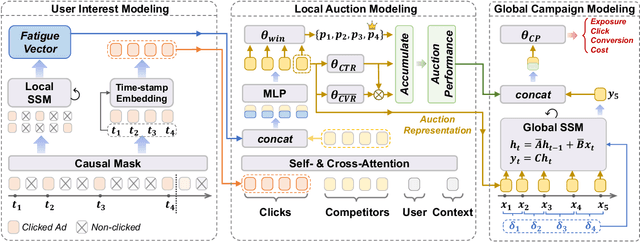

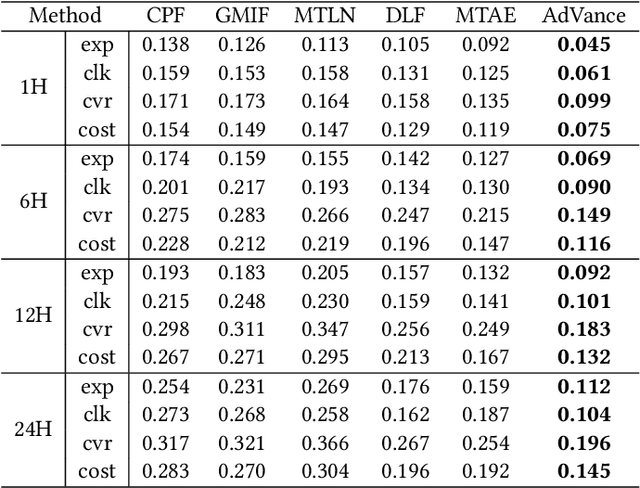

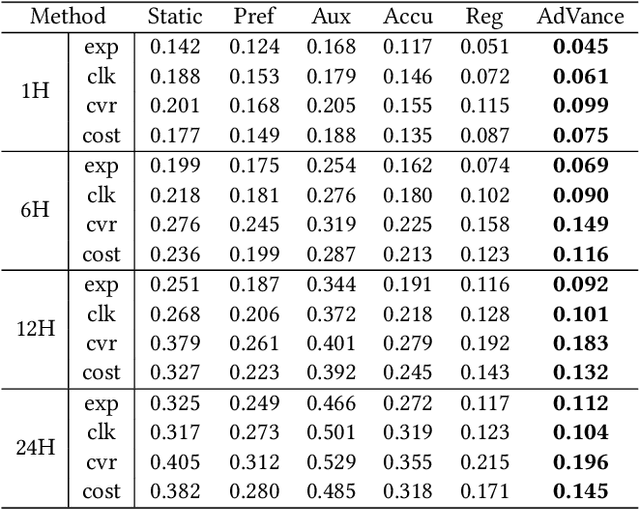

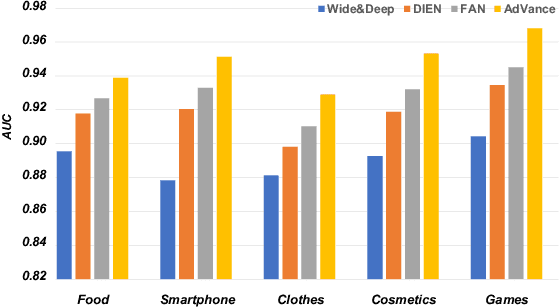

Abstract:Real-time Bidding (RTB) advertisers wish to \textit{know in advance} the expected cost and yield of ad campaigns to avoid trial-and-error expenses. However, Campaign Performance Forecasting (CPF), a sequence modeling task involving tens of thousands of ad auctions, poses challenges of evolving user interest, auction representation, and long context, making coarse-grained and static-modeling methods sub-optimal. We propose \textit{AdVance}, a time-aware framework that integrates local auction-level and global campaign-level modeling. User preference and fatigue are disentangled using a time-positioned sequence of clicked items and a concise vector of all displayed items. Cross-attention, conditioned on the fatigue vector, captures the dynamics of user interest toward each candidate ad. Bidders compete with each other, presenting a complete graph similar to the self-attention mechanism. Hence, we employ a Transformer Encoder to compress each auction into embedding by solving auxiliary tasks. These sequential embeddings are then summarized by a conditional state space model (SSM) to comprehend long-range dependencies while maintaining global linear complexity. Considering the irregular time intervals between auctions, we make SSM's parameters dependent on the current auction embedding and the time interval. We further condition SSM's global predictions on the accumulation of local results. Extensive evaluations and ablation studies demonstrate its superiority over state-of-the-art methods. AdVance has been deployed on the Tencent Advertising platform, and A/B tests show a remarkable 4.5\% uplift in Average Revenue per User (ARPU).

Interaction-aware Factorization Machines for Recommender Systems

Feb 26, 2019

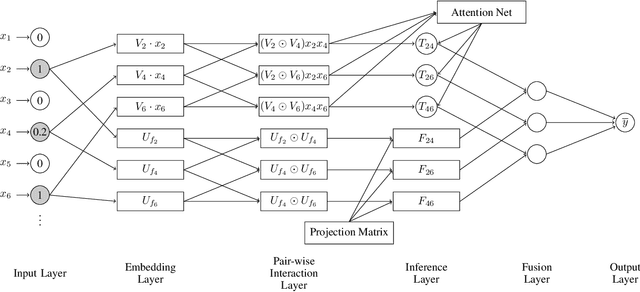

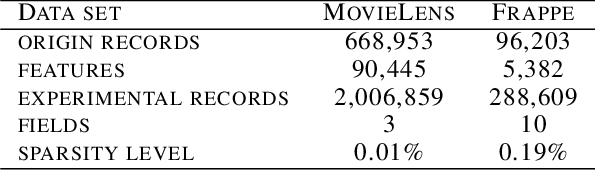

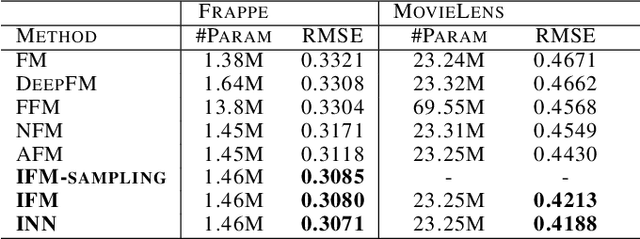

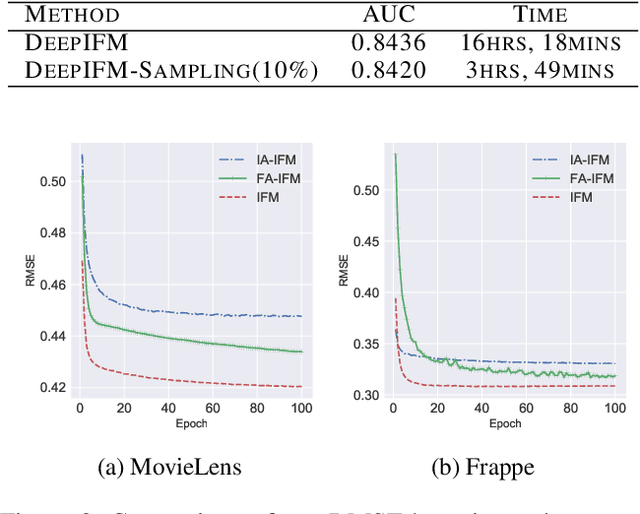

Abstract:Factorization Machine (FM) is a widely used supervised learning approach by effectively modeling of feature interactions. Despite the successful application of FM and its many deep learning variants, treating every feature interaction fairly may degrade the performance. For example, the interactions of a useless feature may introduce noises; the importance of a feature may also differ when interacting with different features. In this work, we propose a novel model named \emph{Interaction-aware Factorization Machine} (IFM) by introducing Interaction-Aware Mechanism (IAM), which comprises the \emph{feature aspect} and the \emph{field aspect}, to learn flexible interactions on two levels. The feature aspect learns feature interaction importance via an attention network while the field aspect learns the feature interaction effect as a parametric similarity of the feature interaction vector and the corresponding field interaction prototype. IFM introduces more structured control and learns feature interaction importance in a stratified manner, which allows for more leverage in tweaking the interactions on both feature-wise and field-wise levels. Besides, we give a more generalized architecture and propose Interaction-aware Neural Network (INN) and DeepIFM to capture higher-order interactions. To further improve both the performance and efficiency of IFM, a sampling scheme is developed to select interactions based on the field aspect importance. The experimental results from two well-known datasets show the superiority of the proposed models over the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge