Domenico Iuso

MIRT: a simultaneous reconstruction and affine motion compensation technique for four dimensional computed tomography (4DCT)

Feb 07, 2024Abstract:In four-dimensional computed tomography (4DCT), 3D images of moving or deforming samples are reconstructed from a set of 2D projection images. Recent techniques for iterative motion-compensated reconstruction either necessitate a reference acquisition or alternate image reconstruction and motion estimation steps. In these methods, the motion estimation step involves the estimation of either complete deformation vector fields (DVFs) or a limited set of parameters corresponding to the affine motion, including rigid motion or scaling. The majority of these approaches rely on nested iterations, incurring significant computational expenses. Notably, despite the direct benefits of an analytical formulation and a substantial reduction in computational complexity, there has been no exploration into parameterizing DVFs for general affine motion in CT imaging. In this work, we propose the Motion-compensated Iterative Reconstruction Technique (MIRT)- an efficient iterative reconstruction scheme that combines image reconstruction and affine motion estimation in a single update step, based on the analytical gradients of the motion towards both the reconstruction and the affine motion parameters. When most of the state-of-the-art 4DCT methods have not attempted to be tested on real data, results from simulation and real experiments show that our method outperforms the state-of-the-art CT reconstruction with affine motion correction methods in computational feasibility and projection distance. In particular, this allows accurate reconstruction for a proper microscale diamond in the appearance of motion from the practically acquired projection radiographs, which leads to a novel application of 4DCT.

Voxel-wise classification for porosity investigation of additive manufactured parts with 3D unsupervised and (deeply) supervised neural networks

May 13, 2023

Abstract:Additive Manufacturing (AM) has emerged as a manufacturing process that allows the direct production of samples from digital models. To ensure that quality standards are met in all manufactured samples of a batch, X-ray computed tomography (X-CT) is often used combined with automated anomaly detection. For the latter, deep learning (DL) anomaly detection techniques are increasingly, as they can be trained to be robust to the material being analysed and resilient towards poor image quality. Unfortunately, most recent and popular DL models have been developed for 2D image processing, thereby disregarding valuable volumetric information. This study revisits recent supervised (UNet, UNet++, UNet 3+, MSS-UNet) and unsupervised (VAE, ceVAE, gmVAE, vqVAE) DL models for porosity analysis of AM samples from X-CT images and extends them to accept 3D input data with a 3D-patch pipeline for lower computational requirements, improved efficiency and generalisability. The supervised models were trained using the Focal Tversky loss to address class imbalance that arises from the low porosity in the training datasets. The output of the unsupervised models is post-processed to reduce misclassifications caused by their inability to adequately represent the object surface. The findings were cross-validated in a 5-fold fashion and include: a performance benchmark of the DL models, an evaluation of the post-processing algorithm, an evaluation of the effect of training supervised models with the output of unsupervised models. In a final performance benchmark on a test set with poor image quality, the best performing supervised model was MSS-UNet with an average precision of 0.808 $\pm$ 0.013, while the best unsupervised model was the post-processed ceVAE with 0.935 $\pm$ 0.001. The VAE/ceVAE models demonstrated superior capabilities, particularly when leveraging post-processing techniques.

Breathing deformation model -- application to multi-resolution abdominal MRI

Oct 10, 2019

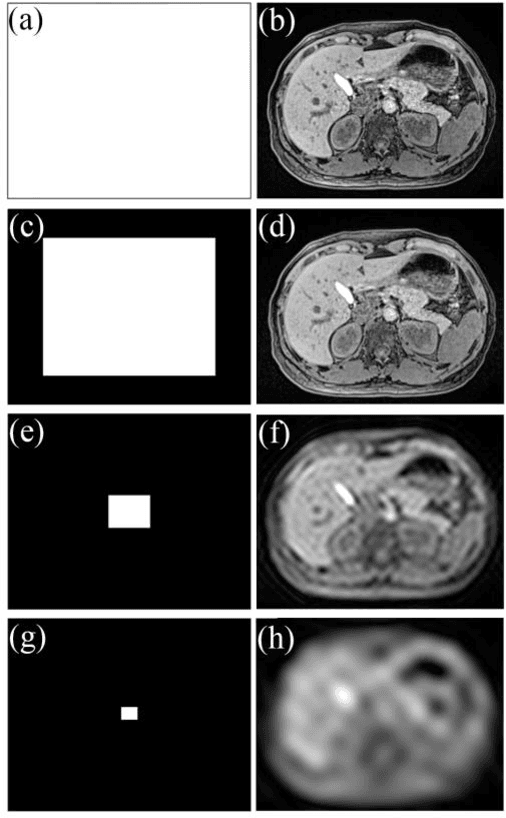

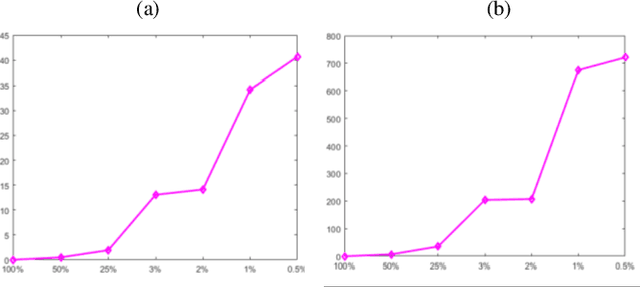

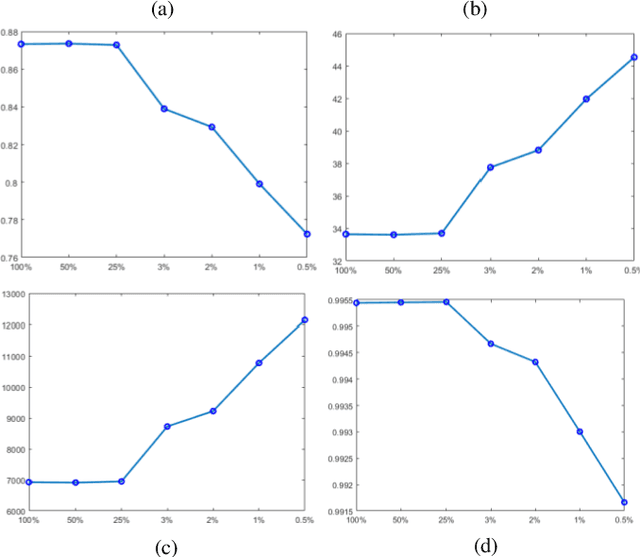

Abstract:Dynamic MRI is a technique of acquiring a series of images continuously to follow the physiological changes over time. However, such fast imaging results in low resolution images. In this work, abdominal deformation model computed from dynamic low resolution images have been applied to high resolution image, acquired previously, to generate dynamic high resolution MRI. Dynamic low resolution images were simulated into different breathing phases (inhale and exhale). Then, the image registration between breathing time points was performed using the B-spline SyN deformable model and using cross-correlation as a similarity metric. The deformation model between different breathing phases were estimated from highly undersampled data. This deformation model was then applied to the high resolution images to obtain high resolution images of different breathing phases. The results indicated that the deformation model could be computed from relatively very low resolution images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge