Dmitri Kovalenko

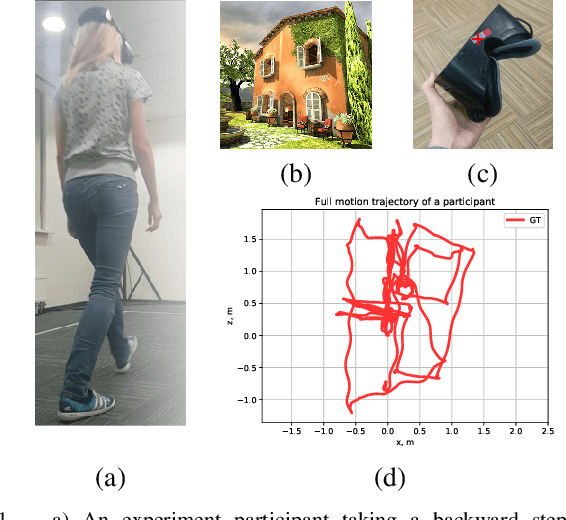

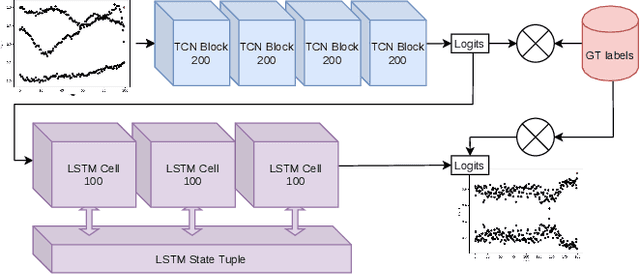

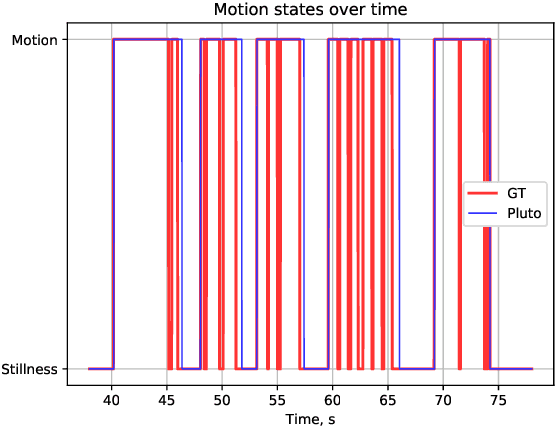

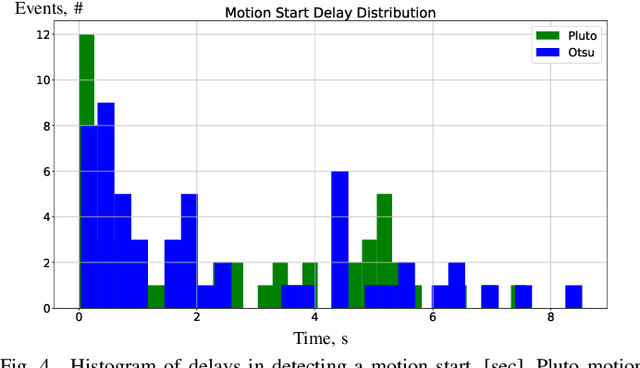

Pluto: Motion Detection for Navigation in a VR Headset

Aug 11, 2021

Abstract:Untethered, inside-out tracking is considered a new goalpost for virtual reality, which became attainable with advent of machine learning in SLAM. Yet computer vision-based navigation is always at risk of a tracking failure due to poor illumination or saliency of the environment. An extension for a navigation system is proposed, which recognizes agents motion and stillness states with 87% accuracy from accelerometer data. 40% reduction in navigation drift is demonstrated in a repeated tracking failure scenario on a challenging dataset.

Sensor Aware Lidar Odometry

Aug 29, 2019

Abstract:A lidar odometry method, integrating into the computation the knowledge about the physics of the sensor, is proposed. A model of measurement error enables higher precision in estimation of the point normal covariance. Adjacent laser beams are used in an outlier correspondence rejection scheme. The method is ranked in the KITTI's leaderboard with 1.37% positioning error. 3.67% is achieved in comparison with the LOAM method on the internal dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge