Pluto: Motion Detection for Navigation in a VR Headset

Paper and Code

Aug 11, 2021

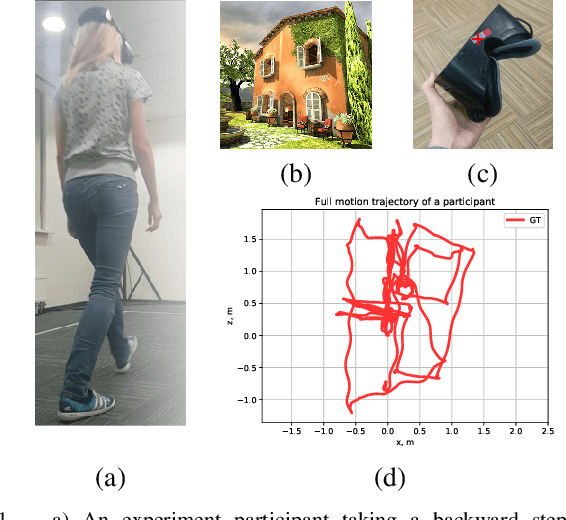

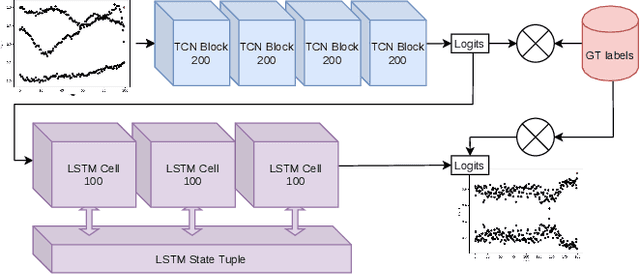

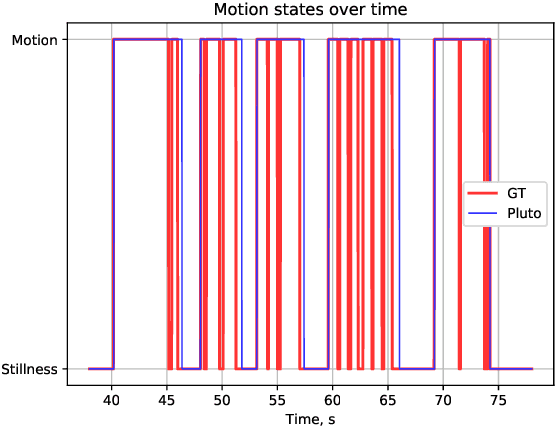

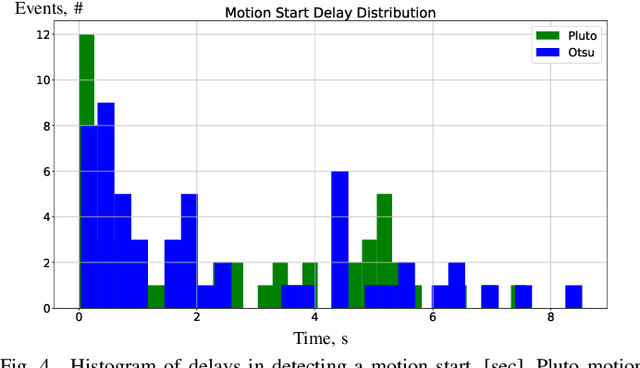

Untethered, inside-out tracking is considered a new goalpost for virtual reality, which became attainable with advent of machine learning in SLAM. Yet computer vision-based navigation is always at risk of a tracking failure due to poor illumination or saliency of the environment. An extension for a navigation system is proposed, which recognizes agents motion and stillness states with 87% accuracy from accelerometer data. 40% reduction in navigation drift is demonstrated in a repeated tracking failure scenario on a challenging dataset.

* to appear in 2021 International Conference on Indoor Positioning and

Indoor Navigation (IPIN), 29 Nov. - 2 Dec. 2021, Lloret de Mar, Spain

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge