Dimitrios Boursinos

Reliable Probability Intervals For Classification Using Inductive Venn Predictors Based on Distance Learning

Oct 07, 2021

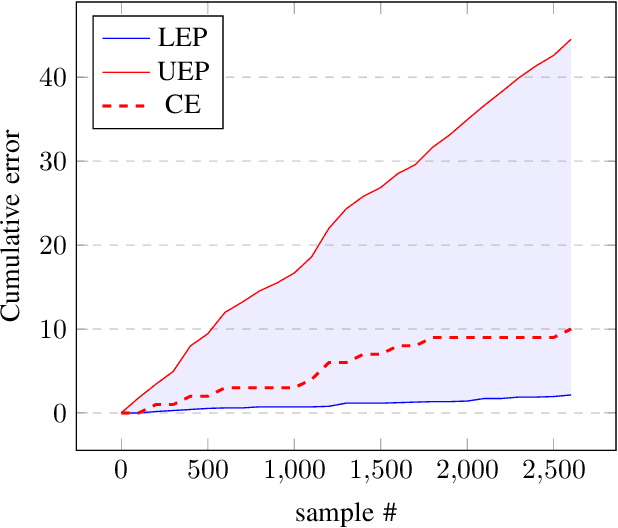

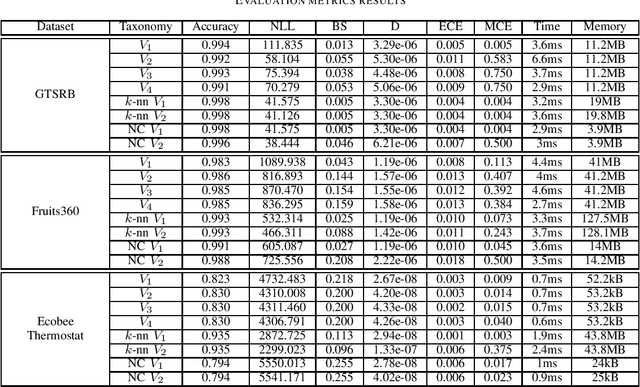

Abstract:Deep neural networks are frequently used by autonomous systems for their ability to learn complex, non-linear data patterns and make accurate predictions in dynamic environments. However, their use as black boxes introduces risks as the confidence in each prediction is unknown. Different frameworks have been proposed to compute accurate confidence measures along with the predictions but at the same time introduce a number of limitations like execution time overhead or inability to be used with high-dimensional data. In this paper, we use the Inductive Venn Predictors framework for computing probability intervals regarding the correctness of each prediction in real-time. We propose taxonomies based on distance metric learning to compute informative probability intervals in applications involving high-dimensional inputs. Empirical evaluation on image classification and botnet attacks detection in Internet-of-Things (IoT) applications demonstrates improved accuracy and calibration. The proposed method is computationally efficient, and therefore, can be used in real-time.

* Published in IEEE International Conference on Omni-Layer Intelligent Systems (COINS), 2021

Improving Prediction Confidence in Learning-Enabled Autonomous Systems

Oct 07, 2021

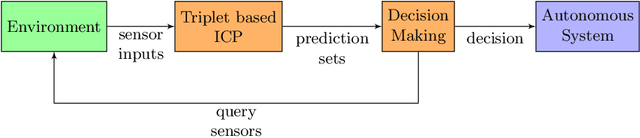

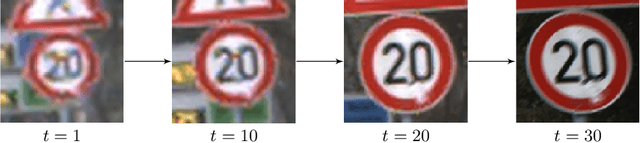

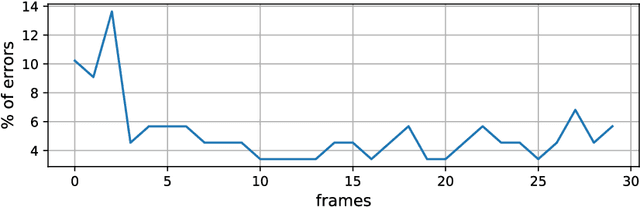

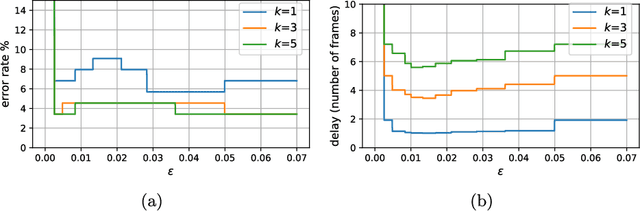

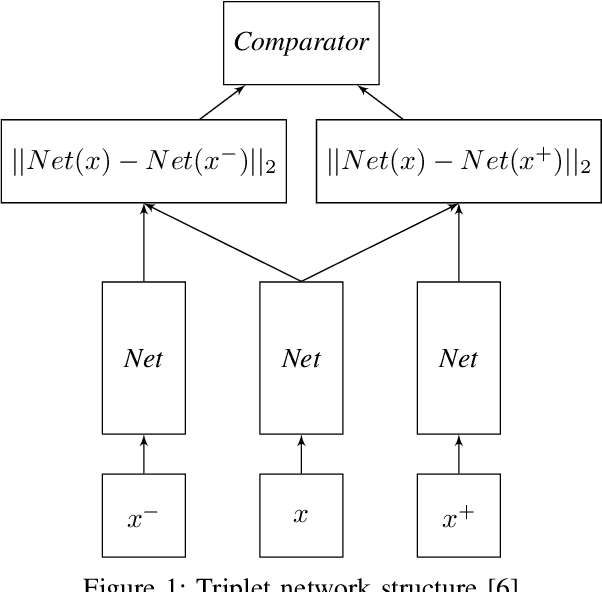

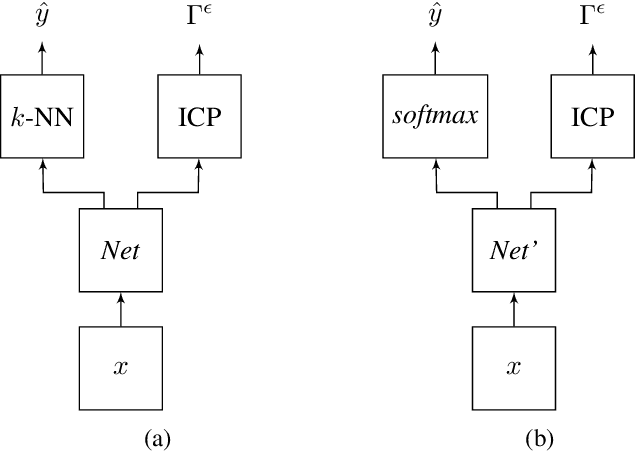

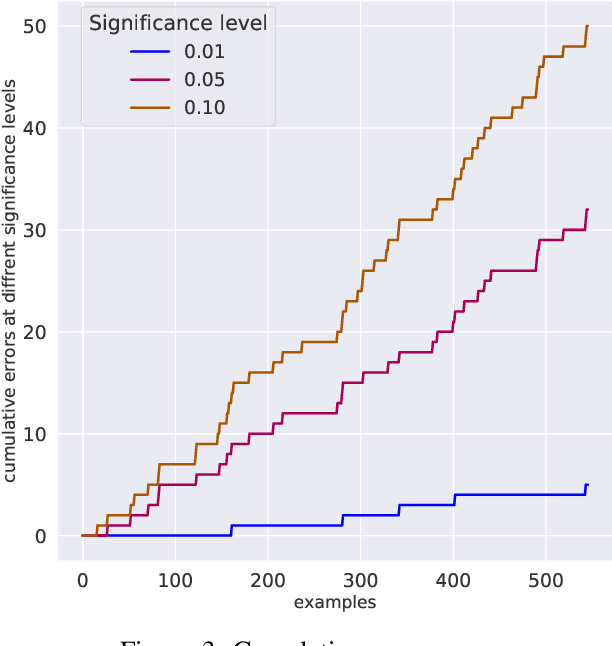

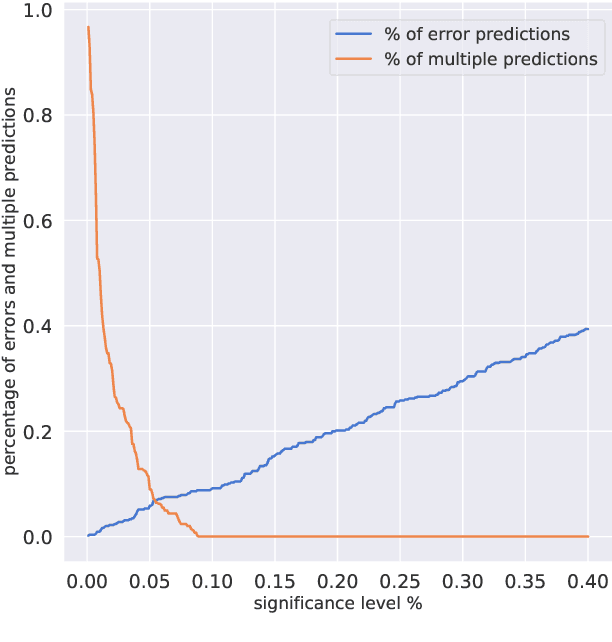

Abstract:Autonomous systems use extensively learning-enabled components such as deep neural networks (DNNs) for prediction and decision making. In this paper, we utilize a feedback loop between learning-enabled components used for classification and the sensors of an autonomous system in order to improve the confidence of the predictions. We design a classifier using Inductive Conformal Prediction (ICP) based on a triplet network architecture in order to learn representations that can be used to quantify the similarity between test and training examples. The method allows computing confident set predictions with an error rate predefined using a selected significance level. A feedback loop that queries the sensors for a new input is used to further refine the predictions and increase the classification accuracy. The method is computationally efficient, scalable to high-dimensional inputs, and can be executed in a feedback loop with the system in real-time. The approach is evaluated using a traffic sign recognition dataset and the results show that the error rate is reduced.

* Published in Dynamic Data Driven Applications Systems. DDDAS 2020

Assurance Monitoring of Learning Enabled Cyber-Physical Systems Using Inductive Conformal Prediction based on Distance Learning

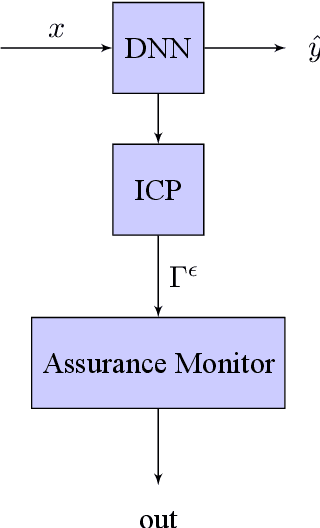

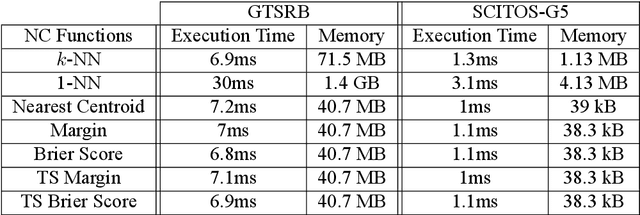

Oct 07, 2021Abstract:Machine learning components such as deep neural networks are used extensively in Cyber-Physical Systems (CPS). However, such components may introduce new types of hazards that can have disastrous consequences and need to be addressed for engineering trustworthy systems. Although deep neural networks offer advanced capabilities, they must be complemented by engineering methods and practices that allow effective integration in CPS. In this paper, we proposed an approach for assurance monitoring of learning-enabled CPS based on the conformal prediction framework. In order to allow real-time assurance monitoring, the approach employs distance learning to transform high-dimensional inputs into lower size embedding representations. By leveraging conformal prediction, the approach provides well-calibrated confidence and ensures a bounded small error rate while limiting the number of inputs for which an accurate prediction cannot be made. We demonstrate the approach using three data sets of mobile robot following a wall, speaker recognition, and traffic sign recognition. The experimental results demonstrate that the error rates are well-calibrated while the number of alarms is very small. Further, the method is computationally efficient and allows real-time assurance monitoring of CPS.

* Published in Artificial Intelligence for Engineering Design, Analysis and Manufacturing. arXiv admin note: text overlap with arXiv:2001.05014

Trusted Confidence Bounds for Learning Enabled Cyber-Physical Systems

Mar 11, 2020

Abstract:Cyber-physical systems (CPS) can benefit by the use of learning enabled components (LECs) such as deep neural networks (DNNs) for perception and desicion making tasks. However, DNNs are typically non-transparent making reasoning about their predictions very difficult, and hence their application to safety-critical systems is very challenging. LECs could be integrated easier into CPS if their predictions could be complemented with a confidence measure that quantifies how much we trust their output. The paper presents an approach for computing confidence bounds based on Inductive Conformal Prediction (ICP). We train a Triplet Network architecture to learn representations of the input data that can be used to estimate the similarity between test examples and examples in the training data set. Then, these representations are used to estimate the confidence of a set predictor based on the DNN architecture used in the triplet based on ICP. The approach is evaluated using a robotic navigation benchmark and the results show that we can computed trusted confidence bounds efficiently in real-time.

Assurance Monitoring of Cyber-Physical Systems with Machine Learning Components

Jan 14, 2020

Abstract:Machine learning components such as deep neural networks are used extensively in Cyber-physical Systems (CPS). However, they may introduce new types of hazards that can have disastrous consequences and need to be addressed for engineering trustworthy systems. Although deep neural networks offer advanced capabilities, they must be complemented by engineering methods and practices that allow effective integration in CPS. In this paper, we investigate how to use the conformal prediction framework for assurance monitoring of CPS with machine learning components. In order to handle high-dimensional inputs in real-time, we compute nonconformity scores using embedding representations of the learned models. By leveraging conformal prediction the approach provides well-calibrated confidence and can allow monitoring that ensures a bounded small error rate while limiting the number of inputs for which an accurate prediction cannot be made. Empirical evaluation results using the German Traffic Sign Recognition Benchmark and a robot navigation dataset demonstrate that the error rates are well-calibrated while the number of alarms is small. The method is computationally efficient, and therefore, the approach is promising for assurance monitoring of CPS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge