Diego Sáez

Mobile Recognition of Wikipedia Featured Sites using Deep Learning and Crowd-sourced Imagery

Nov 04, 2019

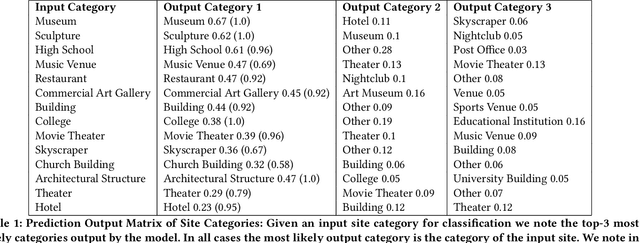

Abstract:Rendering Wikipedia content through mobile and augmented reality mediums can enable new forms of interaction in urban-focused user communities facilitating learning, communication and knowledge exchange. With this objective in mind, in this work we develop a mobile application that allows for the recognition of notable sites featured on Wikipedia. The application is powered by a deep neural network that has been trained on crowd-sourced imagery describing sites of interest, such as buildings, statues, museums or other physical entities that are present and visually accessible in an urban environment. We describe an end-to-end pipeline that describes data collection, model training and evaluation of our application considering online and real world scenarios. We identify a number of challenges in the site recognition task which arise due to visual similarities amongst the classified sites as well as due to noise introduce by the surrounding built environment. We demonstrate how using mobile contextual information, such as user location, orientation and attention patterns can significantly alleviate such challenges. Moreover, we present an unsupervised learning technique to de-noise crowd-sourced imagery which improves classification performance further.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge