Diego Gonzalez-Morin

Real Time Egocentric Segmentation for Video-self Avatar in Mixed Reality

Jul 04, 2022

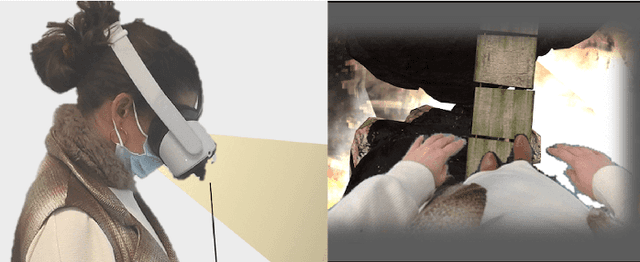

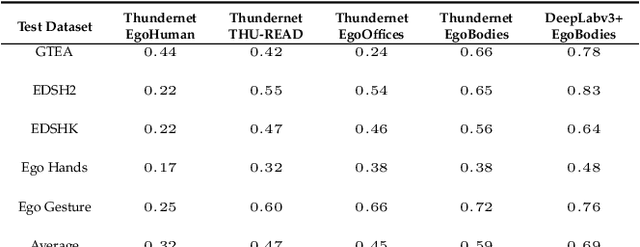

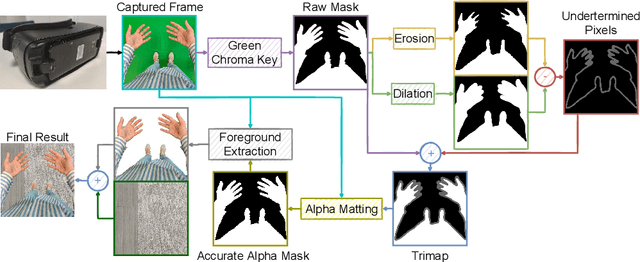

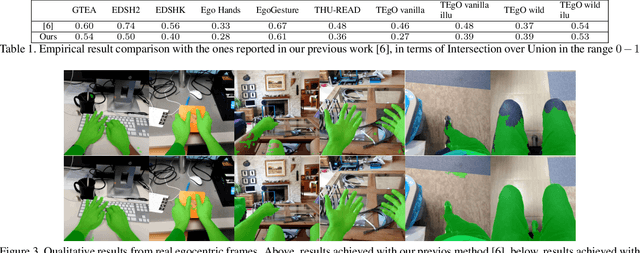

Abstract:In this work we present our real-time egocentric body segmentation algorithm. Our algorithm achieves a frame rate of 66 fps for an input resolution of 640x480, thanks to our shallow network inspired in Thundernet's architecture. Besides, we put a strong emphasis on the variability of the training data. More concretely, we describe the creation process of our Egocentric Bodies (EgoBodies) dataset, composed of almost 10,000 images from three datasets, created both from synthetic methods and real capturing. We conduct experiments to understand the contribution of the individual datasets; compare Thundernet model trained with EgoBodies with simpler and more complex previous approaches and discuss their corresponding performance in a real-life setup in terms of segmentation quality and inference times. The described trained semantic segmentation algorithm is already integrated in an end-to-end system for Mixed Reality (MR), making it possible for users to see his/her own body while being immersed in a MR scene.

Egocentric Human Segmentation for Mixed Reality

Jun 08, 2020

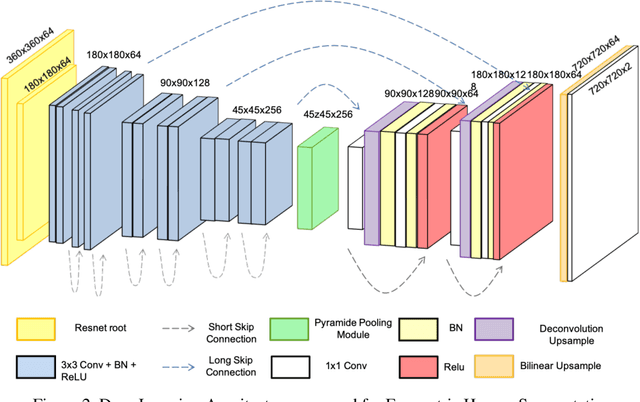

Abstract:The objective of this work is to segment human body parts from egocentric video using semantic segmentation networks. Our contribution is two-fold: i) we create a semi-synthetic dataset composed of more than 15, 000 realistic images and associated pixel-wise labels of egocentric human body parts, such as arms or legs including different demographic factors; ii) building upon the ThunderNet architecture, we implement a deep learning semantic segmentation algorithm that is able to perform beyond real-time requirements (16 ms for 720 x 720 images). It is believed that this method will enhance sense of presence of Virtual Environments and will constitute a more realistic solution to the standard virtual avatars.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge