Diego Bustamente

A Symbolic Language for Interpreting Decision Trees

Oct 18, 2023

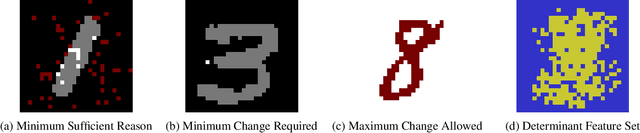

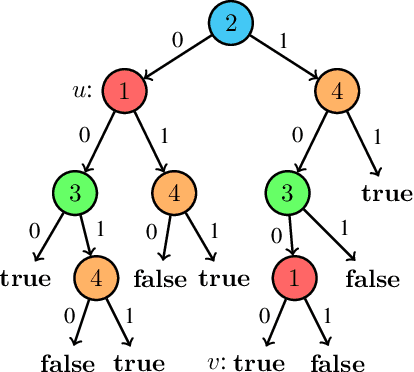

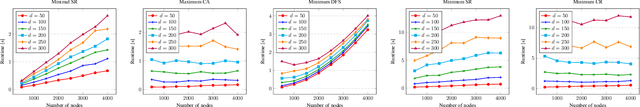

Abstract:The recent development of formal explainable AI has disputed the folklore claim that "decision trees are readily interpretable models", showing different interpretability queries that are computationally hard on decision trees, as well as proposing different methods to deal with them in practice. Nonetheless, no single explainability query or score works as a "silver bullet" that is appropriate for every context and end-user. This naturally suggests the possibility of "interpretability languages" in which a wide variety of queries can be expressed, giving control to the end-user to tailor queries to their particular needs. In this context, our work presents ExplainDT, a symbolic language for interpreting decision trees. ExplainDT is rooted in a carefully constructed fragment of first-ordered logic that we call StratiFOILed. StratiFOILed balances expressiveness and complexity of evaluation, allowing for the computation of many post-hoc explanations--both local (e.g., abductive and contrastive explanations) and global ones (e.g., feature relevancy)--while remaining in the Boolean Hierarchy over NP. Furthermore, StratiFOILed queries can be written as a Boolean combination of NP-problems, thus allowing us to evaluate them in practice with a constant number of calls to a SAT solver. On the theoretical side, our main contribution is an in-depth analysis of the expressiveness and complexity of StratiFOILed, while on the practical side, we provide an optimized implementation for encoding StratiFOILed queries as propositional formulas, together with an experimental study on its efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge