Diane Gromala

Invisible Users: Uncovering End-Users' Requirements for Explainable AI via Explanation Forms and Goals

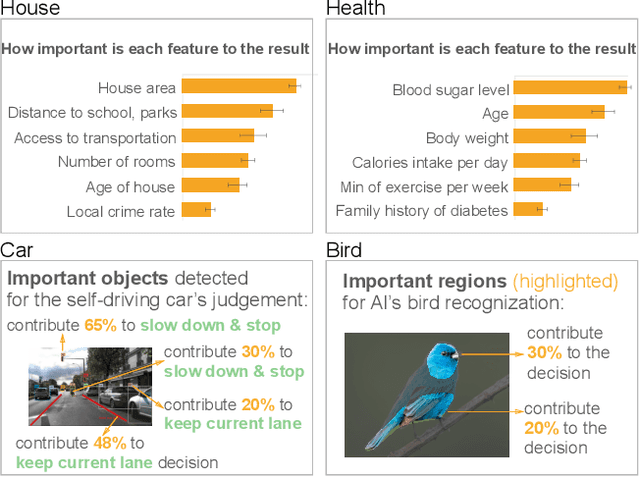

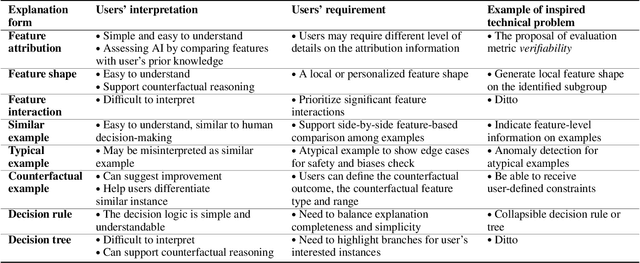

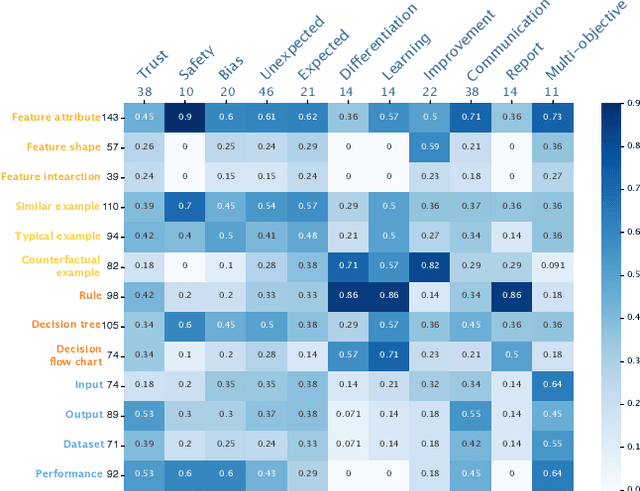

Feb 15, 2023Abstract:Non-technical end-users are silent and invisible users of the state-of-the-art explainable artificial intelligence (XAI) technologies. Their demands and requirements for AI explainability are not incorporated into the design and evaluation of XAI techniques, which are developed to explain the rationales of AI decisions to end-users and assist their critical decisions. This makes XAI techniques ineffective or even harmful in high-stakes applications, such as healthcare, criminal justice, finance, and autonomous driving systems. To systematically understand end-users' requirements to support the technical development of XAI, we conducted the EUCA user study with 32 layperson participants in four AI-assisted critical tasks. The study identified comprehensive user requirements for feature-, example-, and rule-based XAI techniques (manifested by the end-user-friendly explanation forms) and XAI evaluation objectives (manifested by the explanation goals), which were shown to be helpful to directly inspire the proposal of new XAI algorithms and evaluation metrics. The EUCA study findings, the identified explanation forms and goals for technical specification, and the EUCA study dataset support the design and evaluation of end-user-centered XAI techniques for accessible, safe, and accountable AI.

Transcending XAI Algorithm Boundaries through End-User-Inspired Design

Aug 18, 2022

Abstract:The boundaries of existing explainable artificial intelligence (XAI) algorithms are confined to problems grounded in technical users' demand for explainability. This research paradigm disproportionately ignores the larger group of non-technical end users of XAI, who do not have technical knowledge but need explanations in their AI-assisted critical decisions. Lacking explainability-focused functional support for end users may hinder the safe and responsible use of AI in high-stakes domains, such as healthcare, criminal justice, finance, and autonomous driving systems. In this work, we explore how designing XAI tailored to end users' critical tasks inspires the framing of new technical problems. To elicit users' interpretations and requirements for XAI algorithms, we first identify eight explanation forms as the communication tool between AI researchers and end users, such as explaining using features, examples, or rules. Using the explanation forms, we then conduct a user study with 32 layperson participants in the context of achieving different explanation goals (such as verifying AI decisions, and improving user's predicted outcomes) in four critical tasks. Based on the user study findings, we identify and formulate novel XAI technical problems, and propose an evaluation metric verifiability based on users' explanation goal of verifying AI decisions. Our work shows that grounding the technical problem in end users' use of XAI can inspire new research questions. Such end-user-inspired research questions have the potential to promote social good by democratizing AI and ensuring the responsible use of AI in critical domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge