Dennis Kaburu

Exploiting Phonological Similarities between African Languages to achieve Speech to Speech Translation

Oct 30, 2024

Abstract:This paper presents a pilot study on direct speech-to-speech translation (S2ST) by leveraging linguistic similarities among selected African languages within the same phylum, particularly in cases where traditional data annotation is expensive or impractical. We propose a segment-based model that maps speech segments both within and across language phyla, effectively eliminating the need for large paired datasets. By utilizing paired segments and guided diffusion, our model enables translation between any two languages in the dataset. We evaluate the model on a proprietary dataset from the Kenya Broadcasting Corporation (KBC), which includes five languages: Swahili, Luo, Kikuyu, Nandi, and English. The model demonstrates competitive performance in segment pairing and translation quality, particularly for languages within the same phylum. Our experiments reveal that segment length significantly influences translation accuracy, with average-length segments yielding the highest pairing quality. Comparative analyses with traditional cascaded ASR-MT techniques show that the proposed model delivers nearly comparable translation performance. This study underscores the potential of exploiting linguistic similarities within language groups to perform efficient S2ST, especially in low-resource language contexts.

Contrastive Environmental Sound Representation Learning

Jul 18, 2022

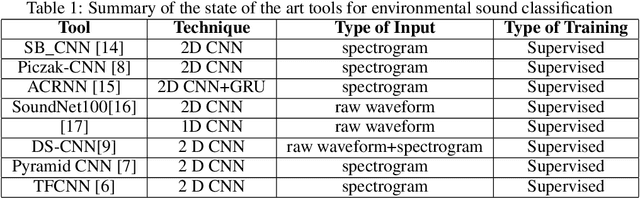

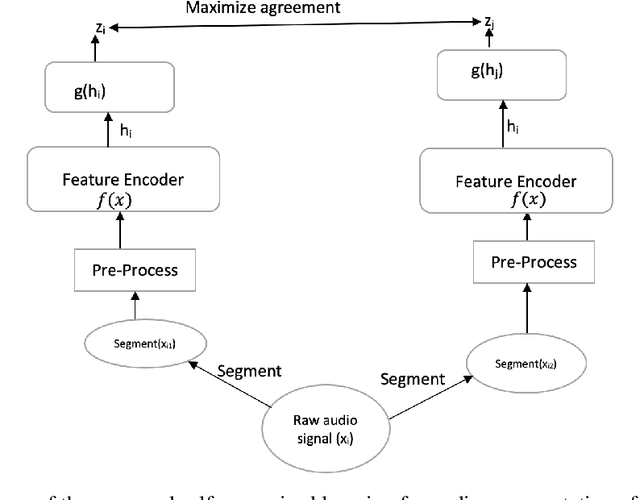

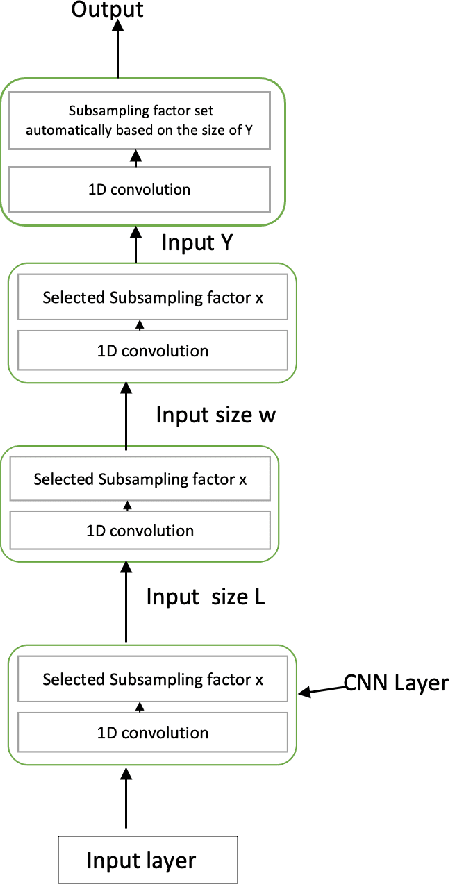

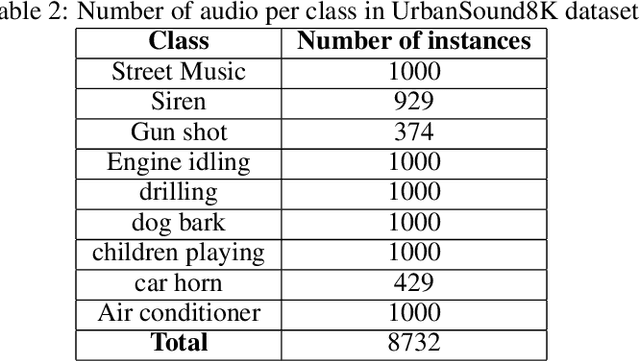

Abstract:Machine hearing of the environmental sound is one of the important issues in the audio recognition domain. It gives the machine the ability to discriminate between the different input sounds that guides its decision making. In this work we exploit the self-supervised contrastive technique and a shallow 1D CNN to extract the distinctive audio features (audio representations) without using any explicit annotations.We generate representations of a given audio using both its raw audio waveform and spectrogram and evaluate if the proposed learner is agnostic to the type of audio input. We further use canonical correlation analysis (CCA) to fuse representations from the two types of input of a given audio and demonstrate that the fused global feature results in robust representation of the audio signal as compared to the individual representations. The evaluation of the proposed technique is done on both ESC-50 and UrbanSound8K. The results show that the proposed technique is able to extract most features of the environmental audio and gives an improvement of 12.8% and 0.9% on the ESC-50 and UrbanSound8K datasets respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge