Dennis G Wilson

Searching Search Spaces: Meta-evolving a Geometric Encoding for Neural Networks

Mar 20, 2024Abstract:In evolutionary policy search, neural networks are usually represented using a direct mapping: each gene encodes one network weight. Indirect encoding methods, where each gene can encode for multiple weights, shorten the genome to reduce the dimensions of the search space and better exploit permutations and symmetries. The Geometric Encoding for Neural network Evolution (GENE) introduced an indirect encoding where the weight of a connection is computed as the (pseudo-)distance between the two linked neurons, leading to a genome size growing linearly with the number of genes instead of quadratically in direct encoding. However GENE still relies on hand-crafted distance functions with no prior optimization. Here we show that better performing distance functions can be found for GENE using Cartesian Genetic Programming (CGP) in a meta-evolution approach, hence optimizing the encoding to create a search space that is easier to exploit. We show that GENE with a learned function can outperform both direct encoding and the hand-crafted distances, generalizing on unseen problems, and we study how the encoding impacts neural network properties.

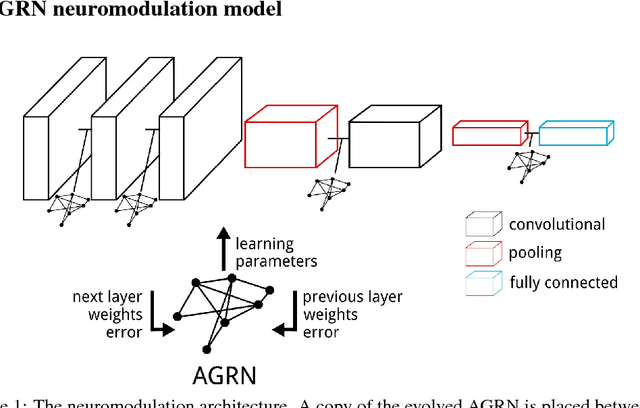

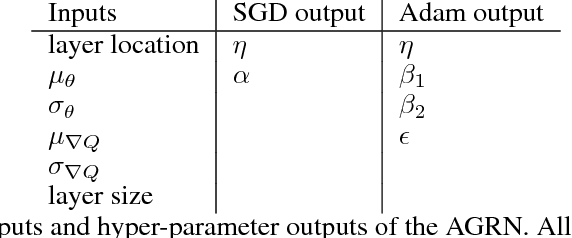

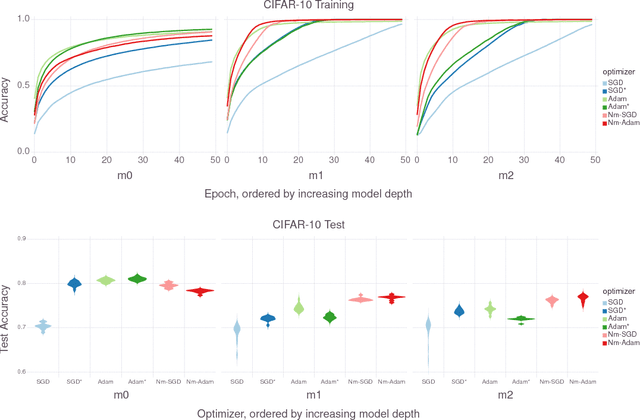

Neuromodulated Learning in Deep Neural Networks

Dec 05, 2018

Abstract:In the brain, learning signals change over time and synaptic location, and are applied based on the learning history at the synapse, in the complex process of neuromodulation. Learning in artificial neural networks, on the other hand, is shaped by hyper-parameters set before learning starts, which remain static throughout learning, and which are uniform for the entire network. In this work, we propose a method of deep artificial neuromodulation which applies the concepts of biological neuromodulation to stochastic gradient descent. Evolved neuromodulatory dynamics modify learning parameters at each layer in a deep neural network over the course of the network's training. We show that the same neuromodulatory dynamics can be applied to different models and can scale to new problems not encountered during evolution. Finally, we examine the evolved neuromodulation, showing that evolution found dynamic, location-specific learning strategies.

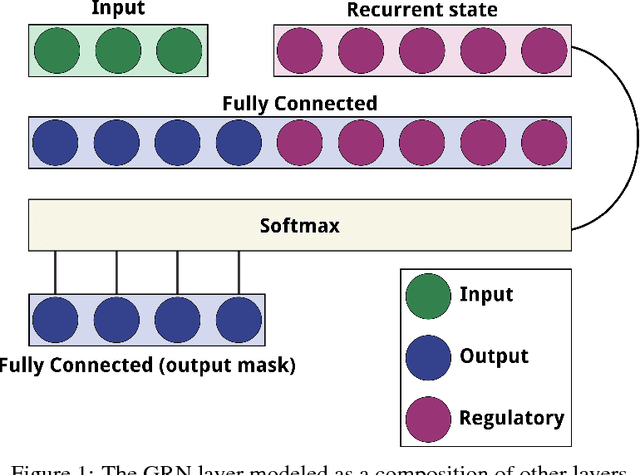

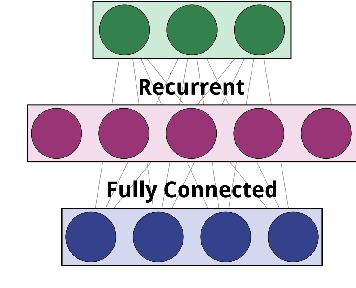

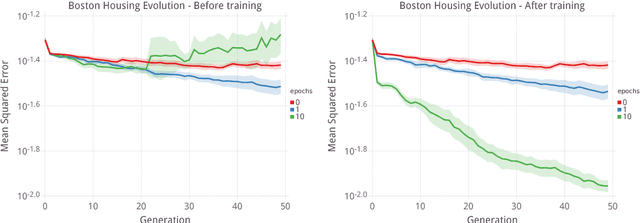

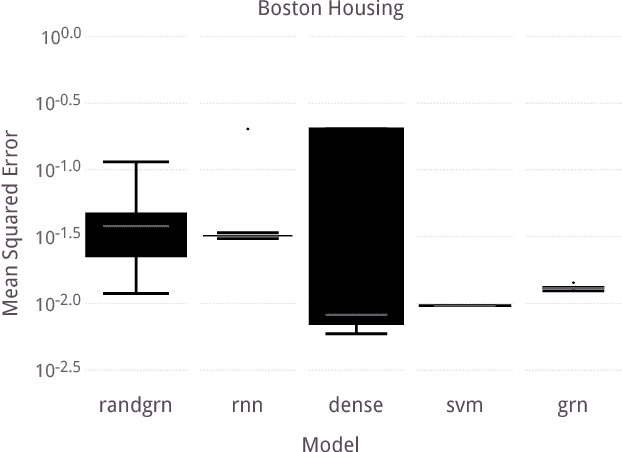

Evolving Differentiable Gene Regulatory Networks

Jul 16, 2018

Abstract:Over the past twenty years, artificial Gene Regulatory Networks (GRNs) have shown their capacity to solve real-world problems in various domains such as agent control, signal processing and artificial life experiments. They have also benefited from new evolutionary approaches and improvements to dynamic which have increased their optimization efficiency. In this paper, we present an additional step toward their usability in machine learning applications. We detail an GPU-based implementation of differentiable GRNs, allowing for local optimization of GRN architectures with stochastic gradient descent (SGD). Using a standard machine learning dataset, we evaluate the ways in which evolution and SGD can be combined to further GRN optimization. We compare these approaches with neural network models trained by SGD and with support vector machines.

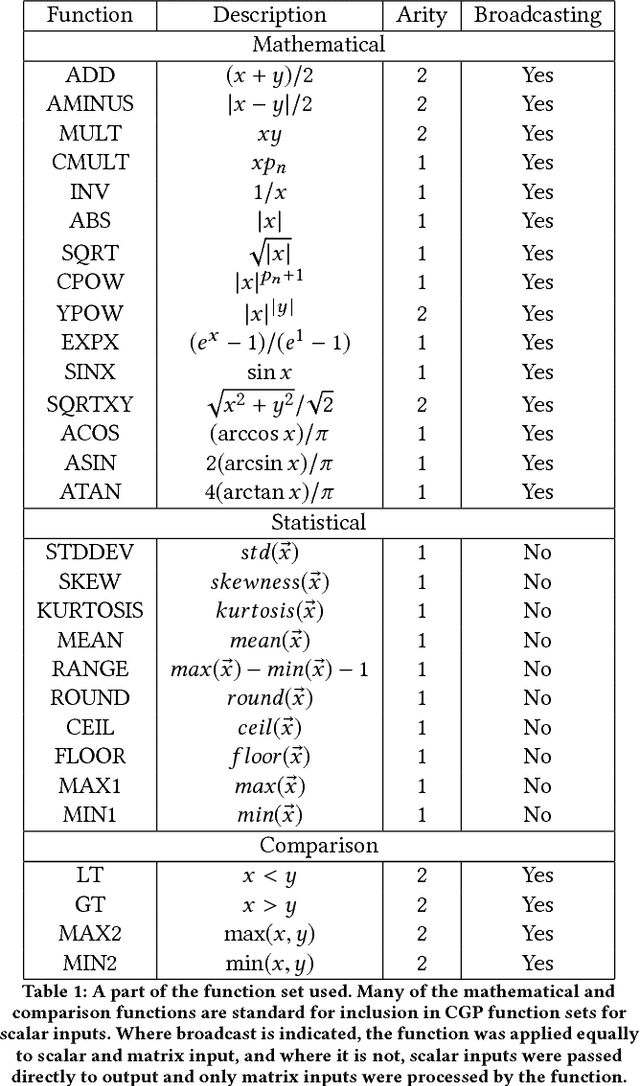

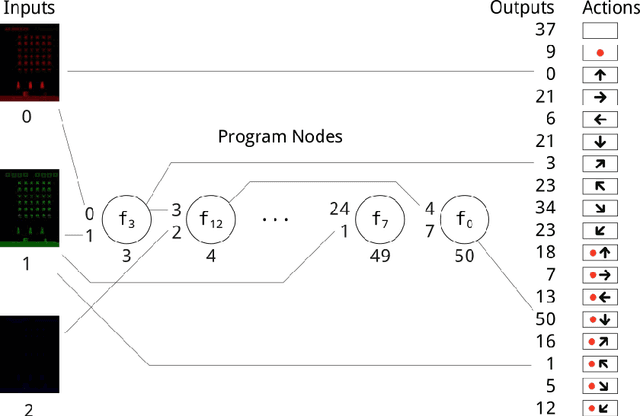

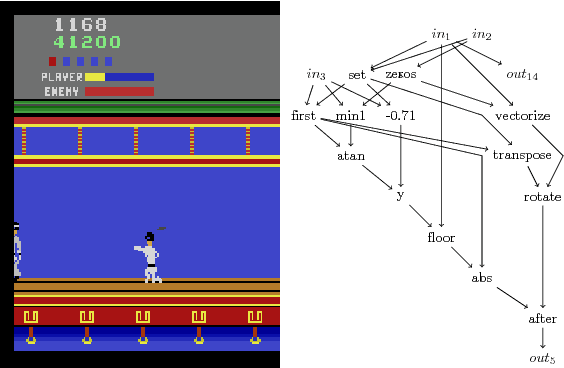

Evolving simple programs for playing Atari games

Jun 14, 2018

Abstract:Cartesian Genetic Programming (CGP) has previously shown capabilities in image processing tasks by evolving programs with a function set specialized for computer vision. A similar approach can be applied to Atari playing. Programs are evolved using mixed type CGP with a function set suited for matrix operations, including image processing, but allowing for controller behavior to emerge. While the programs are relatively small, many controllers are competitive with state of the art methods for the Atari benchmark set and require less training time. By evaluating the programs of the best evolved individuals, simple but effective strategies can be found.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge