Delong Du

What Matters in Explanations: Towards Explainable Fake Review Detection Focusing on Transformers

Jul 24, 2024

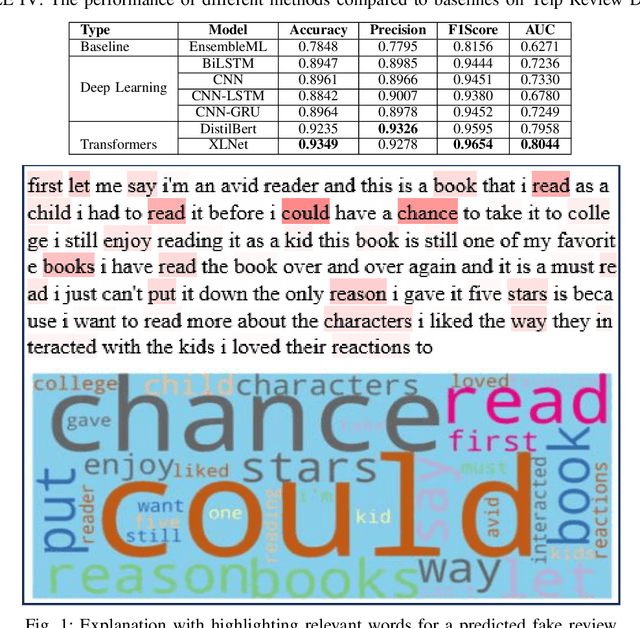

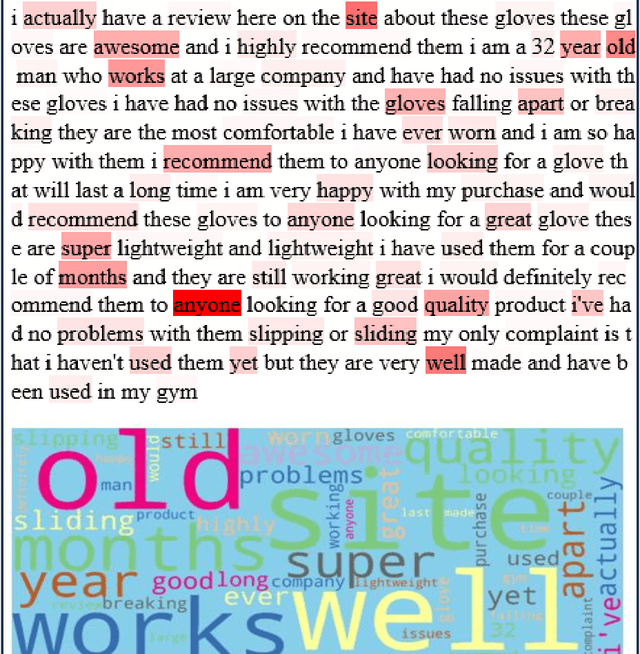

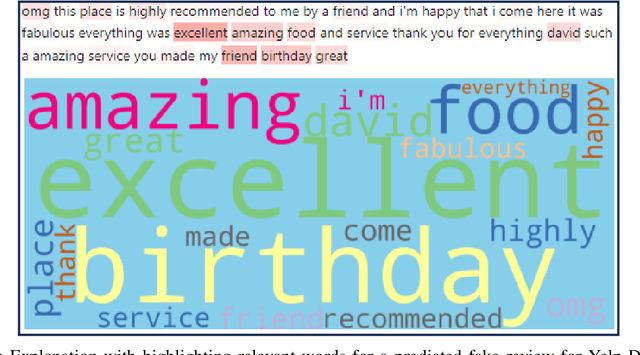

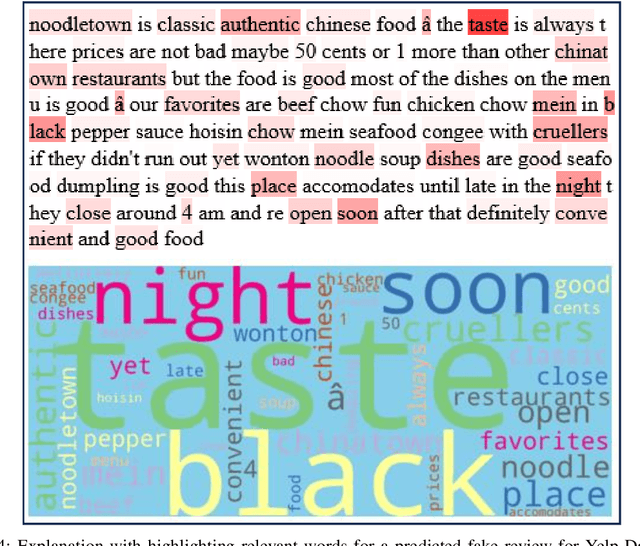

Abstract:Customers' reviews and feedback play crucial role on electronic commerce~(E-commerce) platforms like Amazon, Zalando, and eBay in influencing other customers' purchasing decisions. However, there is a prevailing concern that sellers often post fake or spam reviews to deceive potential customers and manipulate their opinions about a product. Over the past decade, there has been considerable interest in using machine learning (ML) and deep learning (DL) models to identify such fraudulent reviews. Unfortunately, the decisions made by complex ML and DL models - which often function as \emph{black-boxes} - can be surprising and difficult for general users to comprehend. In this paper, we propose an explainable framework for detecting fake reviews with high precision in identifying fraudulent content with explanations and investigate what information matters most for explaining particular decisions by conducting empirical user evaluation. Initially, we develop fake review detection models using DL and transformer models including XLNet and DistilBERT. We then introduce layer-wise relevance propagation (LRP) technique for generating explanations that can map the contributions of words toward the predicted class. The experimental results on two benchmark fake review detection datasets demonstrate that our predictive models achieve state-of-the-art performance and outperform several existing methods. Furthermore, the empirical user evaluation of the generated explanations concludes which important information needs to be considered in generating explanations in the context of fake review identification.

Explaining AI Decisions: Towards Achieving Human-Centered Explainability in Smart Home Environments

Apr 23, 2024Abstract:Smart home systems are gaining popularity as homeowners strive to enhance their living and working environments while minimizing energy consumption. However, the adoption of artificial intelligence (AI)-enabled decision-making models in smart home systems faces challenges due to the complexity and black-box nature of these systems, leading to concerns about explainability, trust, transparency, accountability, and fairness. The emerging field of explainable artificial intelligence (XAI) addresses these issues by providing explanations for the models' decisions and actions. While state-of-the-art XAI methods are beneficial for AI developers and practitioners, they may not be easily understood by general users, particularly household members. This paper advocates for human-centered XAI methods, emphasizing the importance of delivering readily comprehensible explanations to enhance user satisfaction and drive the adoption of smart home systems. We review state-of-the-art XAI methods and prior studies focusing on human-centered explanations for general users in the context of smart home applications. Through experiments on two smart home application scenarios, we demonstrate that explanations generated by prominent XAI techniques might not be effective in helping users understand and make decisions. We thus argue for the necessity of a human-centric approach in representing explanations in smart home systems and highlight relevant human-computer interaction (HCI) methodologies, including user studies, prototyping, technology probes analysis, and heuristic evaluation, that can be employed to generate and present human-centered explanations to users.

Peeking Inside the Schufa Blackbox: Explaining the German Housing Scoring System

Nov 22, 2023

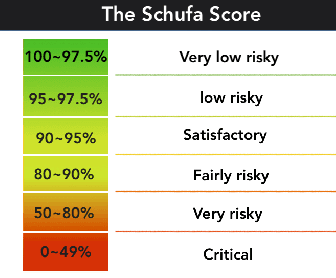

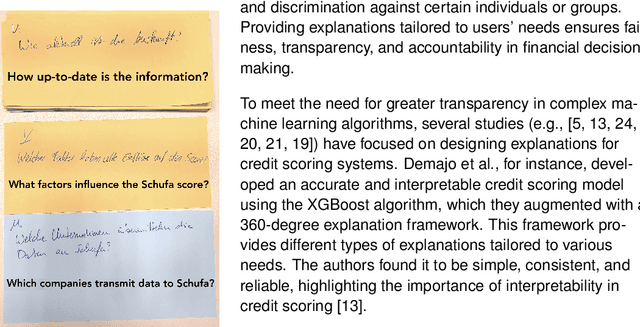

Abstract:Explainable Artificial Intelligence is a concept aimed at making complex algorithms transparent to users through a uniform solution. Researchers have highlighted the importance of integrating domain specific contexts to develop explanations tailored to end users. In this study, we focus on the Schufa housing scoring system in Germany and investigate how users information needs and expectations for explanations vary based on their roles. Using the speculative design approach, we asked business information students to imagine user interfaces that provide housing credit score explanations from the perspectives of both tenants and landlords. Our preliminary findings suggest that although there are general needs that apply to all users, there are also conflicting needs that depend on the practical realities of their roles and how credit scores affect them. We contribute to Human centered XAI research by proposing future research directions that examine users explanatory needs considering their roles and agencies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge