Debjit Pal

Automated Hardware Trojan Insertion in Industrial-Scale Designs

Nov 11, 2025Abstract:Industrial Systems-on-Chips (SoCs) often comprise hundreds of thousands to millions of nets and millions to tens of millions of connectivity edges, making empirical evaluation of hardware-Trojan (HT) detectors on realistic designs both necessary and difficult. Public benchmarks remain significantly smaller and hand-crafted, while releasing truly malicious RTL raises ethical and operational risks. This work presents an automated and scalable methodology for generating HT-like patterns in industry-scale netlists whose purpose is to stress-test detection tools without altering user-visible functionality. The pipeline (i) parses large gate-level designs into connectivity graphs, (ii) explores rare regions using SCOAP testability metrics, and (iii) applies parameterized, function-preserving graph transformations to synthesize trigger-payload pairs that mimic the statistical footprint of stealthy HTs. When evaluated on the benchmarks generated in this work, representative state-of-the-art graph-learning models fail to detect Trojans. The framework closes the evaluation gap between academic circuits and modern SoCs by providing reproducible challenge instances that advance security research without sharing step-by-step attack instructions.

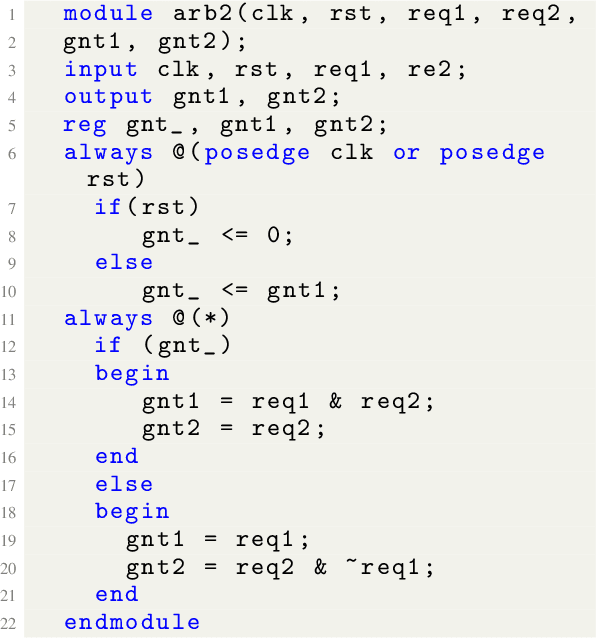

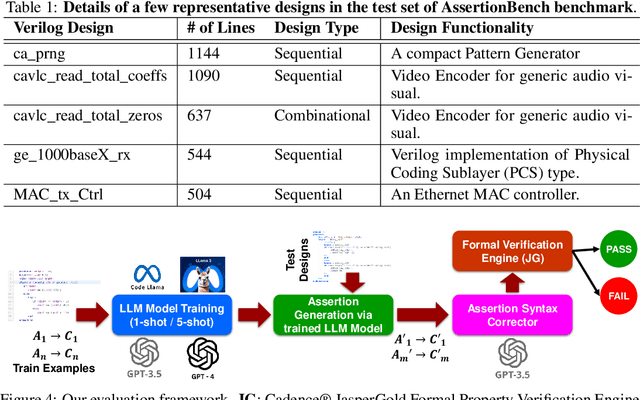

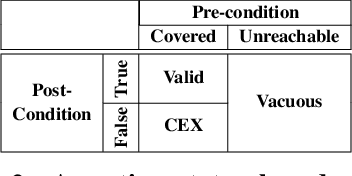

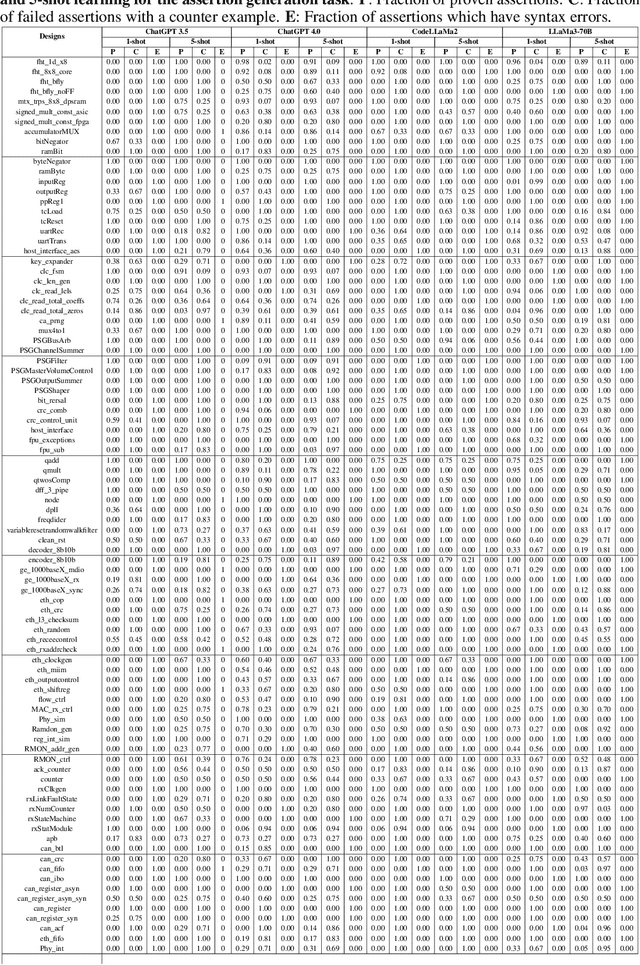

Are LLMs Ready for Practical Adoption for Assertion Generation?

Feb 28, 2025Abstract:Assertions have been the de facto collateral for simulation-based and formal verification of hardware designs for over a decade. The quality of hardware verification, i.e., detection and diagnosis of corner-case design bugs, is critically dependent on the quality of the assertions. With the onset of generative AI such as Transformers and Large-Language Models (LLMs), there has been a renewed interest in developing novel, effective, and scalable techniques of generating functional and security assertions from design source code. While there have been recent works that use commercial-of-the-shelf (COTS) LLMs for assertion generation, there is no comprehensive study in quantifying the effectiveness of LLMs in generating syntactically and semantically correct assertions. In this paper, we first discuss AssertionBench from our prior work, a comprehensive set of designs and assertions to quantify the goodness of a broad spectrum of COTS LLMs for the task of assertion generations from hardware design source code. Our key insight was that COTS LLMs are not yet ready for prime-time adoption for assertion generation as they generate a considerable fraction of syntactically and semantically incorrect assertions. Motivated by the insight, we propose AssertionLLM, a first of its kind LLM model, specifically fine-tuned for assertion generation. Our initial experimental results show that AssertionLLM considerably improves the semantic and syntactic correctness of the generated assertions over COTS LLMs.

AssertionBench: A Benchmark to Evaluate Large-Language Models for Assertion Generation

Jun 26, 2024

Abstract:Assertions have been the de facto collateral for simulation-based and formal verification of hardware designs for over a decade. The quality of hardware verification, \ie, detection and diagnosis of corner-case design bugs, is critically dependent on the quality of the assertions. There has been a considerable amount of research leveraging a blend of data-driven statistical analysis and static analysis to generate high-quality assertions from hardware design source code and design execution trace data. Despite such concerted effort, all prior research struggles to scale to industrial-scale large designs, generates too many low-quality assertions, often fails to capture subtle and non-trivial design functionality, and does not produce any easy-to-comprehend explanations of the generated assertions to understand assertions' suitability to different downstream validation tasks. Recently, with the advent of Large-Language Models (LLMs), there has been a widespread effort to leverage prompt engineering to generate assertions. However, there is little effort to quantitatively establish the effectiveness and suitability of various LLMs for assertion generation. In this paper, we present AssertionBench, a novel benchmark to evaluate LLMs' effectiveness for assertion generation quantitatively. AssertioBench contains 100 curated Verilog hardware designs from OpenCores and formally verified assertions for each design generated from GoldMine and HARM. We use AssertionBench to compare state-of-the-art LLMs to assess their effectiveness in inferring functionally correct assertions for hardware designs. Our experiments demonstrate how LLMs perform relative to each other, the benefits of using more in-context exemplars in generating a higher fraction of functionally correct assertions, and the significant room for improvement for LLM-based assertion generators.

VeriBug: An Attention-based Framework for Bug-Localization in Hardware Designs

Jan 17, 2024

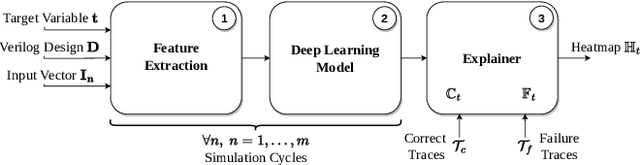

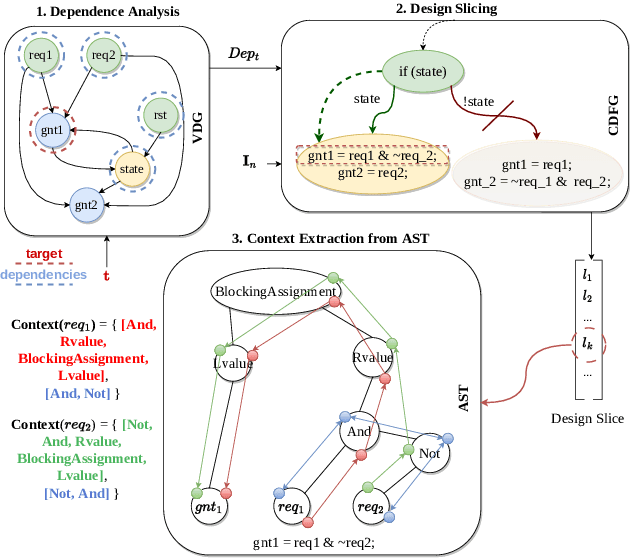

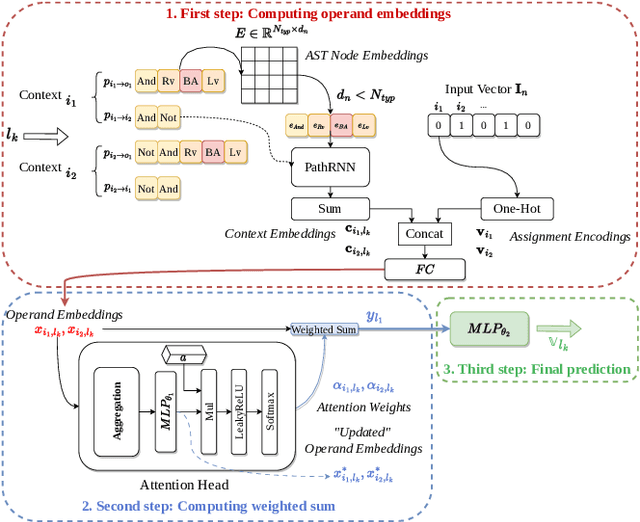

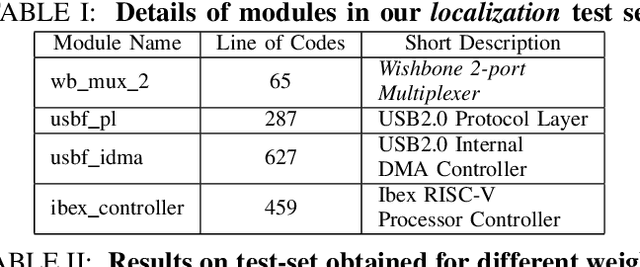

Abstract:In recent years, there has been an exponential growth in the size and complexity of System-on-Chip designs targeting different specialized applications. The cost of an undetected bug in these systems is much higher than in traditional processor systems as it may imply the loss of property or life. The problem is further exacerbated by the ever-shrinking time-to-market and ever-increasing demand to churn out billions of devices. Despite decades of research in simulation and formal methods for debugging and verification, it is still one of the most time-consuming and resource intensive processes in contemporary hardware design cycle. In this work, we propose VeriBug, which leverages recent advances in deep learning to accelerate debugging at the Register-Transfer Level and generates explanations of likely root causes. First, VeriBug uses control-data flow graph of a hardware design and learns to execute design statements by analyzing the context of operands and their assignments. Then, it assigns an importance score to each operand in a design statement and uses that score for generating explanations for failures. Finally, VeriBug produces a heatmap highlighting potential buggy source code portions. Our experiments show that VeriBug can achieve an average bug localization coverage of 82.5% on open-source designs and different types of injected bugs.

Feature Engineering for Scalable Application-Level Post-Silicon Debugging

Feb 08, 2021

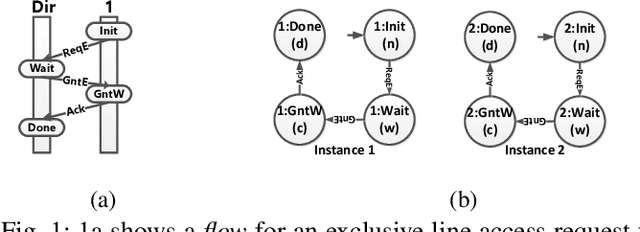

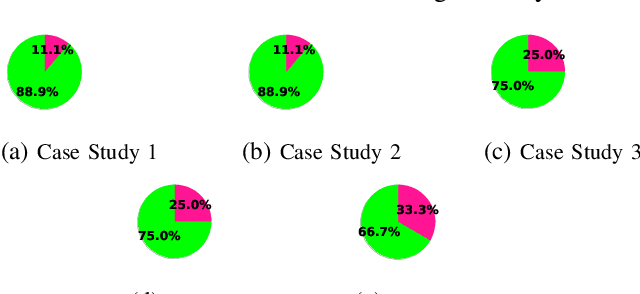

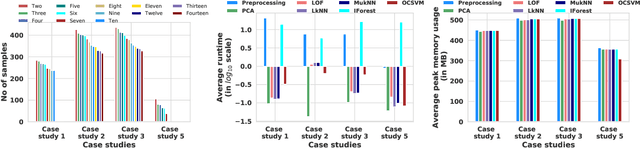

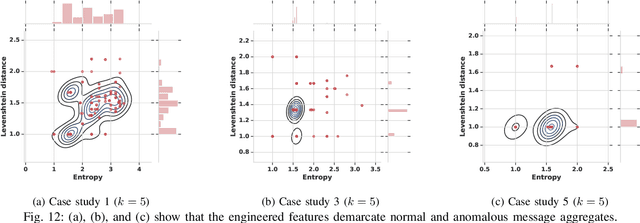

Abstract:We present systematic and efficient solutions for both observability enhancement and root-cause diagnosis of post-silicon System-on-Chips (SoCs) validation with diverse usage scenarios. We model specification of interacting flows in typical applications for message selection. Our method for message selection optimizes flow specification coverage and trace buffer utilization. We define the diagnosis problem as identifying buggy traces as outliers and bug-free traces as inliers/normal behaviors, for which we use unsupervised learning algorithms for outlier detection. Instead of direct application of machine learning algorithms over trace data using the signals as raw features, we use feature engineering to transform raw features into more sophisticated features using domain specific operations. The engineered features are highly relevant to the diagnosis task and are generic to be applied across any hardware designs. We present debugging and root cause analysis of subtle post-silicon bugs in industry-scale OpenSPARC T2 SoC. We achieve a trace buffer utilization of 98.96\% with a flow specification coverage of 94.3\% (average). Our diagnosis method was able to diagnose up to 66.7\% more bugs and took up to 847$\times$ less diagnosis time as compared to the manual debugging with a diagnosis precision of 0.769.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge