Davide Pastorello

A weighted quantum ensemble of homogeneous quantum classifiers

Jun 09, 2025Abstract:Ensemble methods in machine learning aim to improve prediction accuracy by combining multiple models. This is achieved by ensuring diversity among predictors to capture different data aspects. Homogeneous ensembles use identical models, achieving diversity through different data subsets, and weighted-average ensembles assign higher influence to more accurate models through a weight learning procedure. We propose a method to achieve a weighted homogeneous quantum ensemble using quantum classifiers with indexing registers for data encoding. This approach leverages instance-based quantum classifiers, enabling feature and training point subsampling through superposition and controlled unitaries, and allowing for a quantum-parallel execution of diverse internal classifiers with different data compositions in superposition. The method integrates a learning process involving circuit execution and classical weight optimization, for a trained ensemble execution with weights encoded in the circuit at test-time. Empirical evaluation demonstrate the effectiveness of the proposed method, offering insights into its performance.

Mean-field limit from general mixtures of experts to quantum neural networks

Jan 24, 2025Abstract:In this work, we study the asymptotic behavior of Mixture of Experts (MoE) trained via gradient flow on supervised learning problems. Our main result establishes the propagation of chaos for a MoE as the number of experts diverges. We demonstrate that the corresponding empirical measure of their parameters is close to a probability measure that solves a nonlinear continuity equation, and we provide an explicit convergence rate that depends solely on the number of experts. We apply our results to a MoE generated by a quantum neural network.

Local Binary and Multiclass SVMs Trained on a Quantum Annealer

Mar 13, 2024

Abstract:Support vector machines (SVMs) are widely used machine learning models (e.g., in remote sensing), with formulations for both classification and regression tasks. In the last years, with the advent of working quantum annealers, hybrid SVM models characterised by quantum training and classical execution have been introduced. These models have demonstrated comparable performance to their classical counterparts. However, they are limited in the training set size due to the restricted connectivity of the current quantum annealers. Hence, to take advantage of large datasets (like those related to Earth observation), a strategy is required. In the classical domain, local SVMs, namely, SVMs trained on the data samples selected by a k-nearest neighbors model, have already proven successful. Here, the local application of quantum-trained SVM models is proposed and empirically assessed. In particular, this approach allows overcoming the constraints on the training set size of the quantum-trained models while enhancing their performance. In practice, the FaLK-SVM method, designed for efficient local SVMs, has been combined with quantum-trained SVM models for binary and multiclass classification. In addition, for comparison, FaLK-SVM has been interfaced for the first time with a classical single-step multiclass SVM model (CS SVM). Concerning the empirical evaluation, D-Wave's quantum annealers and real-world datasets taken from the remote sensing domain have been employed. The results have shown the effectiveness and scalability of the proposed approach, but also its practical applicability in a real-world large-scale scenario.

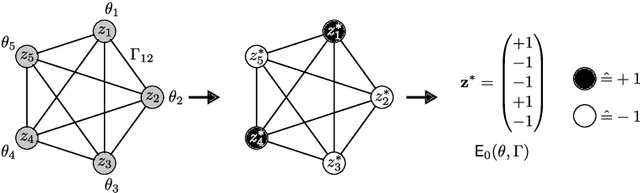

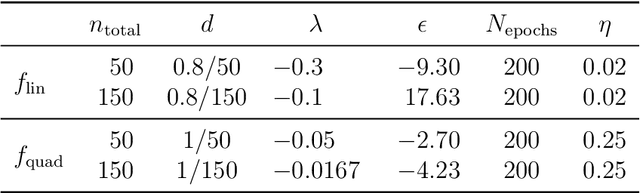

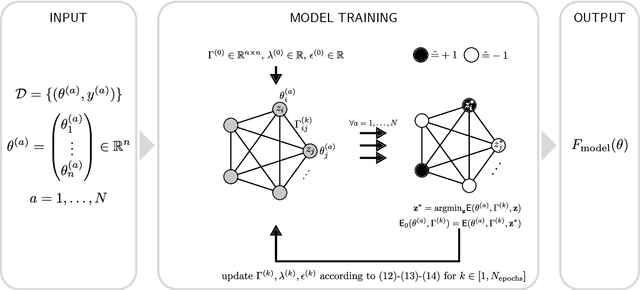

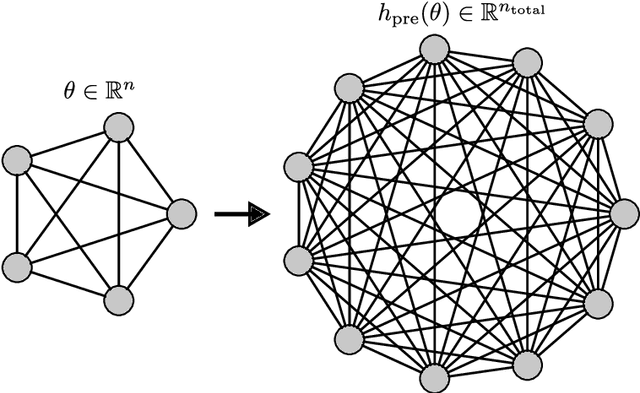

A general learning scheme for classical and quantum Ising machines

Oct 27, 2023

Abstract:An Ising machine is any hardware specifically designed for finding the ground state of the Ising model. Relevant examples are coherent Ising machines and quantum annealers. In this paper, we propose a new machine learning model that is based on the Ising structure and can be efficiently trained using gradient descent. We provide a mathematical characterization of the training process, which is based upon optimizing a loss function whose partial derivatives are not explicitly calculated but estimated by the Ising machine itself. Moreover, we present some experimental results on the training and execution of the proposed learning model. These results point out new possibilities offered by Ising machines for different learning tasks. In particular, in the quantum realm, the quantum resources are used for both the execution and the training of the model, providing a promising perspective in quantum machine learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge