David Maberley

Learning from few examples: Classifying sex from retinal images via deep learning

Jul 20, 2022

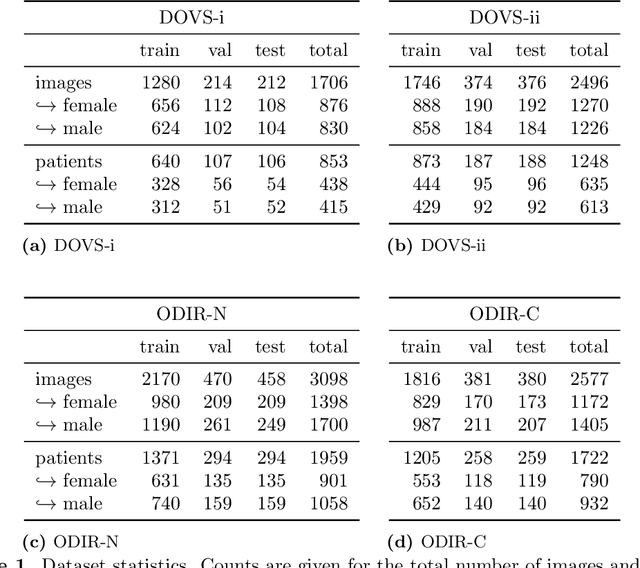

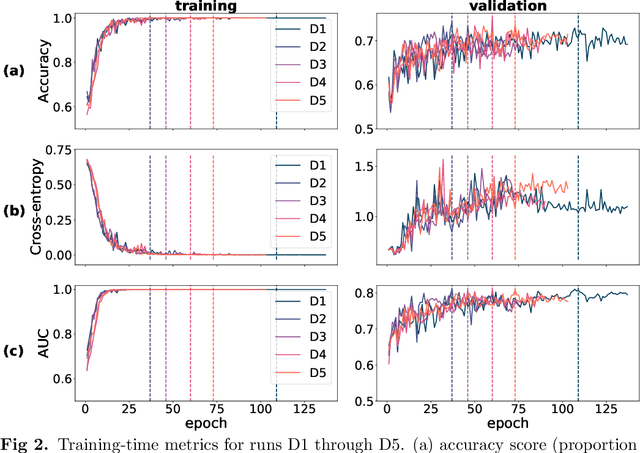

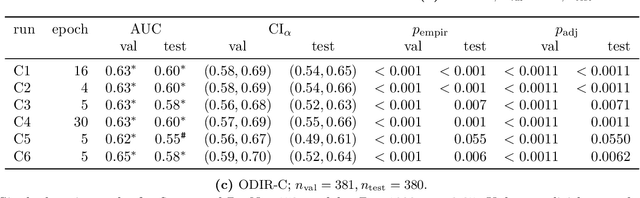

Abstract:Deep learning has seen tremendous interest in medical imaging, particularly in the use of convolutional neural networks (CNNs) for developing automated diagnostic tools. The facility of its non-invasive acquisition makes retinal fundus imaging amenable to such automated approaches. Recent work in analyzing fundus images using CNNs relies on access to massive data for training and validation - hundreds of thousands of images. However, data residency and data privacy restrictions stymie the applicability of this approach in medical settings where patient confidentiality is a mandate. Here, we showcase results for the performance of DL on small datasets to classify patient sex from fundus images - a trait thought not to be present or quantifiable in fundus images until recently. We fine-tune a Resnet-152 model whose last layer has been modified for binary classification. In several experiments, we assess performance in the small dataset context using one private (DOVS) and one public (ODIR) data source. Our models, developed using approximately 2500 fundus images, achieved test AUC scores of up to 0.72 (95% CI: [0.67, 0.77]). This corresponds to a mere 25% decrease in performance despite a nearly 1000-fold decrease in the dataset size compared to prior work in the literature. Even with a hard task like sex categorization from retinal images, we find that classification is possible with very small datasets. Additionally, we perform domain adaptation experiments between DOVS and ODIR; explore the effect of data curation on training and generalizability; and investigate model ensembling to maximize CNN classifier performance in the context of small development datasets.

Deep learning vessel segmentation and quantification of the foveal avascular zone using commercial and prototype OCT-A platforms

Sep 25, 2019

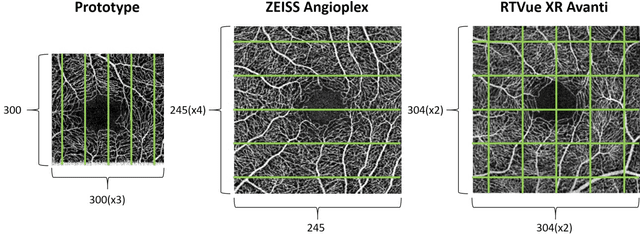

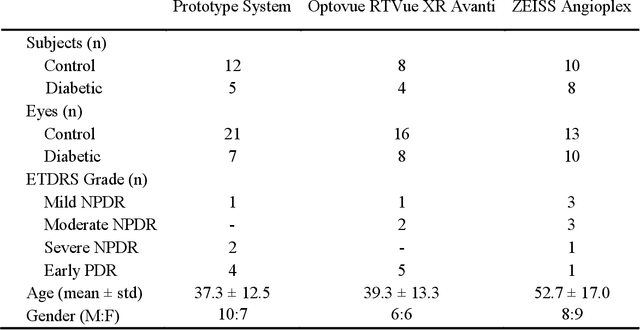

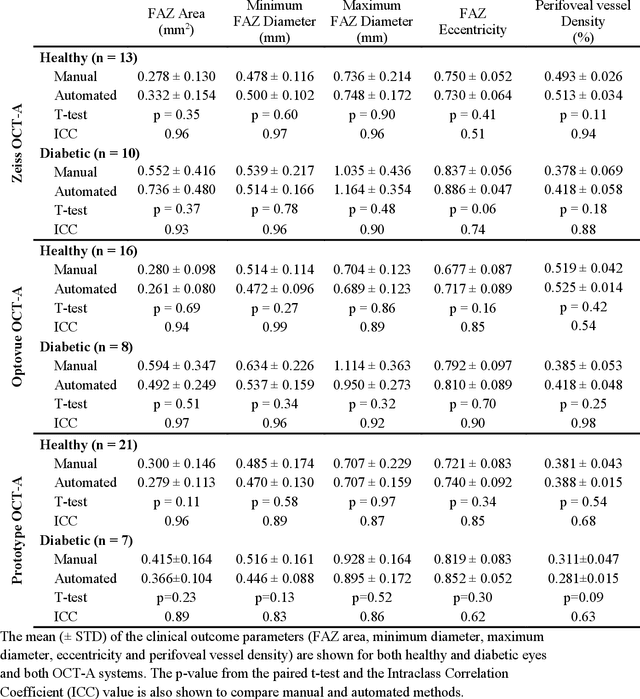

Abstract:Automatic quantification of perifoveal vessel densities in optical coherence tomography angiography (OCT-A) images face challenges such as variable intra- and inter-image signal to noise ratios, projection artefacts from outer vasculature layers, and motion artefacts. This study demonstrates the utility of deep neural networks for automatic quantification of foveal avascular zone (FAZ) parameters and perifoveal vessel density of OCT-A images in healthy and diabetic eyes. OCT-A images of the foveal region were acquired using three OCT-A systems: a 1060nm Swept Source (SS)-OCT prototype, RTVue XR Avanti (Optovue Inc., Fremont, CA), and the ZEISS Angioplex (Carl Zeiss Meditec, Dublin, CA). Automated segmentation was then performed using a deep neural network. Four FAZ morphometric parameters (area, min/max diameter, and eccentricity) and perifoveal vessel density were used as outcome measures. The accuracy, sensitivity and specificity of the DNN vessel segmentations were comparable across all three device platforms. No significant difference between the means of the measurements from automated and manual segmentations were found for any of the outcome measures on any system. The intraclass correlation coefficient (ICC) was also good (> 0.51) for all measurements. Automated deep learning vessel segmentation of OCT-A may be suitable for both commercial and research purposes for better quantification of the retinal circulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge