David M. Zoltowski

Unifying and generalizing models of neural dynamics during decision-making

Jan 13, 2020

Abstract:An open question in systems and computational neuroscience is how neural circuits accumulate evidence towards a decision. Fitting models of decision-making theory to neural activity helps answer this question, but current approaches limit the number of these models that we can fit to neural data. Here we propose a unifying framework for modeling neural activity during decision-making tasks. The framework includes the canonical drift-diffusion model and enables extensions such as multi-dimensional accumulators, variable and collapsing boundaries, and discrete jumps. Our framework is based on constraining the parameters of recurrent state-space models, for which we introduce a scalable variational Laplace-EM inference algorithm. We applied the modeling approach to spiking responses recorded from monkey parietal cortex during two decision-making tasks. We found that a two-dimensional accumulator better captured the trial-averaged responses of a set of parietal neurons than a single accumulator model. Next, we identified a variable lower boundary in the responses of an LIP neuron during a random dot motion task.

Efficient non-conjugate Gaussian process factor models for spike count data using polynomial approximations

Jun 07, 2019

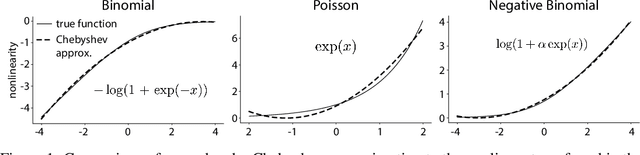

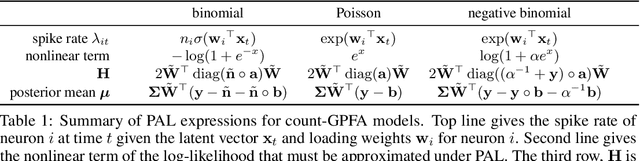

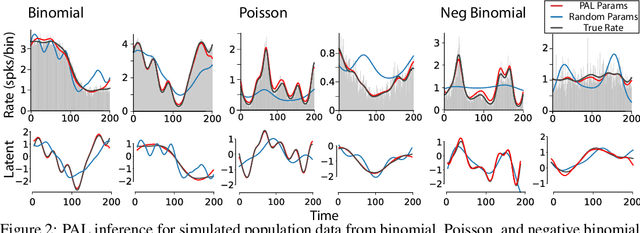

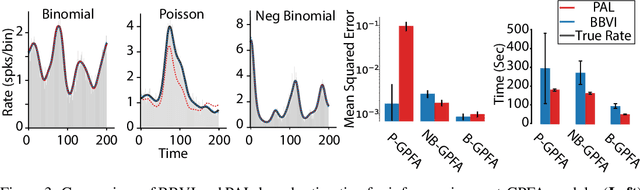

Abstract:Gaussian Process Factor Analysis (GPFA) has been broadly applied to the problem of identifying smooth, low-dimensional temporal structure underlying large-scale neural recordings. However, spike trains are non-Gaussian, which motivates combining GPFA with discrete observation models for binned spike count data. The drawback to this approach is that GPFA priors are not conjugate to count model likelihoods, which makes inference challenging. Here we address this obstacle by introducing a fast, approximate inference method for non-conjugate GPFA models. Our approach uses orthogonal second-order polynomials to approximate the nonlinear terms in the non-conjugate log-likelihood, resulting in a method we refer to as polynomial approximate log-likelihood (PAL) estimators. This approximation allows for accurate closed-form evaluation of marginal likelihood and fast numerical optimization for parameters and hyperparameters. We derive PAL estimators for GPFA models with binomial, Poisson, and negative binomial observations, and additionally show that the parameters obtained can be used to initialize black-box variational inference, which significantly speeds up and stabilizes the inference procedure for these factor analytic models. We apply these methods to data from mouse visual cortex and monkey higher-order visual and parietal cortices, and compare GPFA under three different spike count observation models to traditional GPFA. We demonstrate that PAL estimators achieve fast and accurate extraction of latent structure from multi-neuron spike train data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge