David M. Brandman

BCI decoder performance comparison of an LSTM recurrent neural network and a Kalman filter in retrospective simulation

Dec 24, 2018

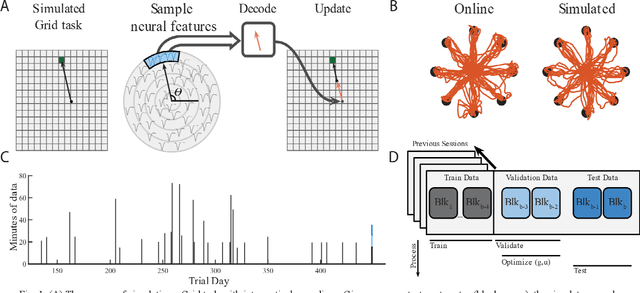

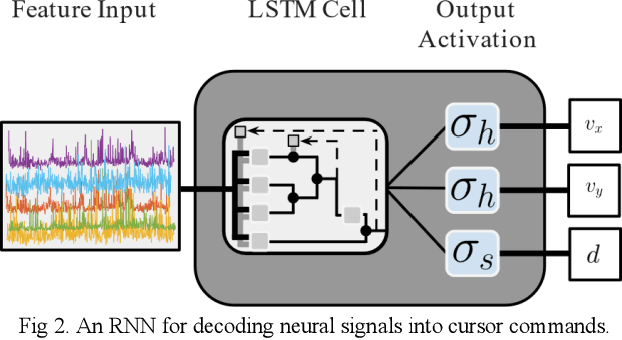

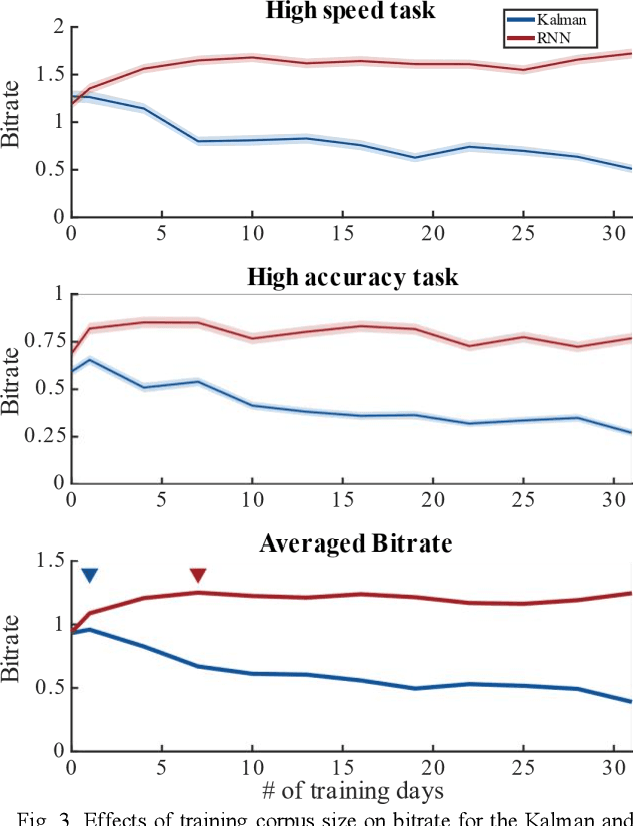

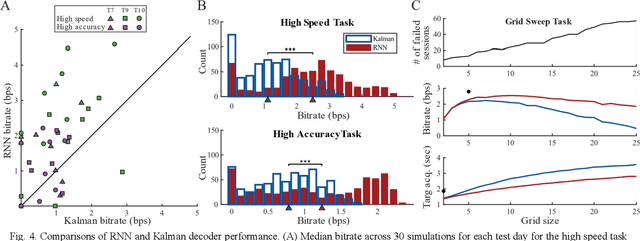

Abstract:Intracortical brain computer interfaces (iBCIs) using linear Kalman decoders have enabled individuals with paralysis to control a computer cursor for continuous point-and-click typing on a virtual keyboard, browsing the internet, and using familiar tablet apps. However, further advances are needed to deliver iBCI-enabled cursor control approaching able-bodied performance. Motivated by recent evidence that nonlinear recurrent neural networks (RNNs) can provide higher performance iBCI cursor control in nonhuman primates (NHPs), we evaluated decoding of intended cursor velocity from human motor cortical signals using a long-short term memory (LSTM) RNN trained across multiple days of multi-electrode recordings. Running simulations with previously recorded intracortical signals from three BrainGate iBCI trial participants, we demonstrate an RNN that can substantially increase bits-per-second metric in a high-speed cursor-based target selection task as well as a challenging small-target high-accuracy task when compared to a Kalman decoder. These results indicate that RNN decoding applied to human intracortical signals could achieve substantial performance advances in continuous 2-D cursor control and motivate a real-time RNN implementation for online evaluation by individuals with tetraplegia.

The discriminative Kalman filter for nonlinear and non-Gaussian sequential Bayesian filtering

Aug 30, 2016

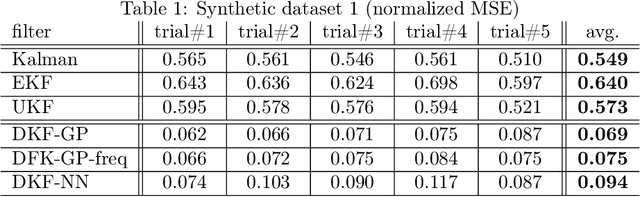

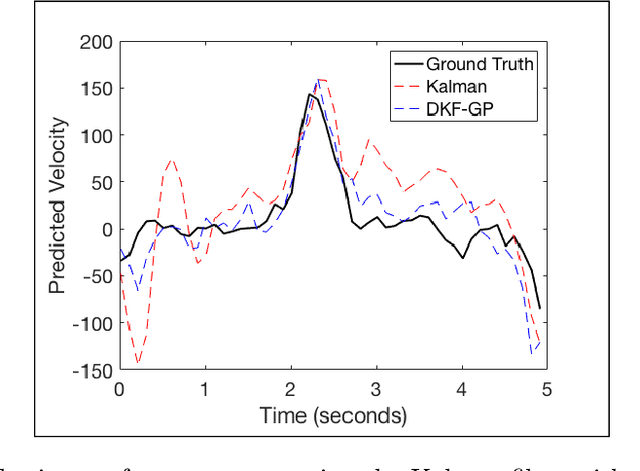

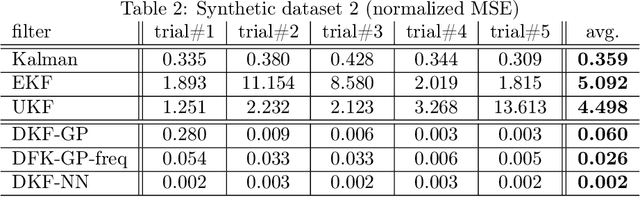

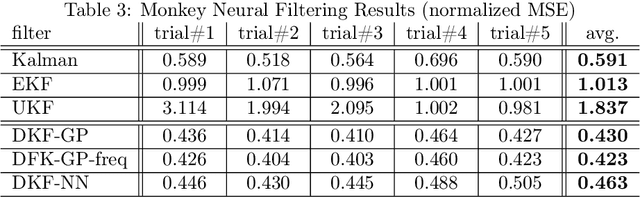

Abstract:The Kalman filter (KF) is used in a variety of applications for computing the posterior distribution of latent states in a state space model. The model requires a linear relationship between states and observations. Extensions to the Kalman filter have been proposed that incorporate linear approximations to nonlinear models, such as the extended Kalman filter (EKF) and the unscented Kalman filter (UKF). However, we argue that in cases where the dimensionality of observed variables greatly exceeds the dimensionality of state variables, a model for $p(\text{state}|\text{observation})$ proves both easier to learn and more accurate for latent space estimation. We derive and validate what we call the discriminative Kalman filter (DKF): a closed-form discriminative version of Bayesian filtering that readily incorporates off-the-shelf discriminative learning techniques. Further, we demonstrate that given mild assumptions, highly non-linear models for $p(\text{state}|\text{observation})$ can be specified. We motivate and validate on synthetic datasets and in neural decoding from non-human primates, showing substantial increases in decoding performance versus the standard Kalman filter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge