David Helbert

XLIM-ASALI

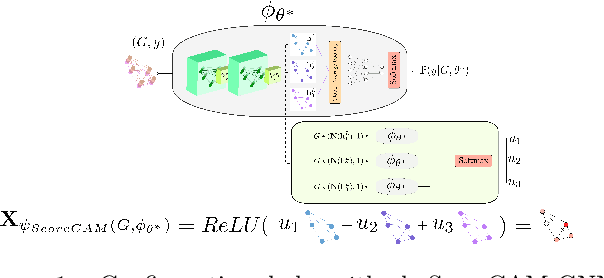

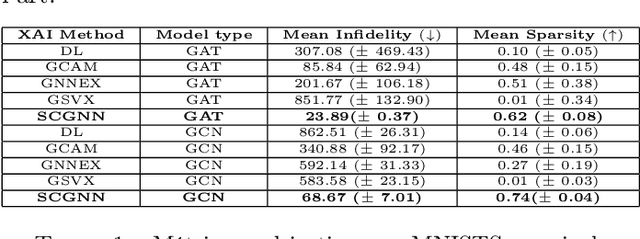

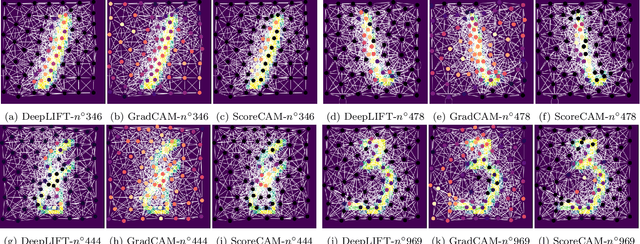

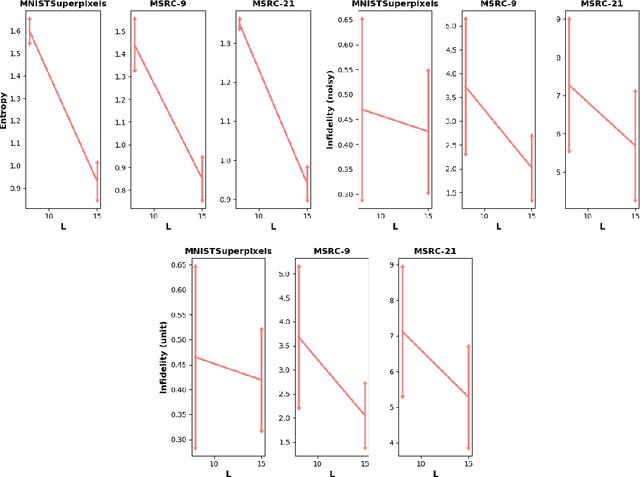

ScoreCAM GNN: une explication optimale des réseaux profonds sur graphes

Jul 26, 2022

Abstract:The explainability of deep networks is becoming a central issue in the deep learning community. It is the same for learning on graphs, a data structure present in many real world problems. In this paper, we propose a method that is more optimal, lighter, consistent and better exploits the topology of the evaluated graph than the state-of-the-art methods.

EiX-GNN : Concept-level eigencentrality explainer for graph neural networks

Jun 07, 2022

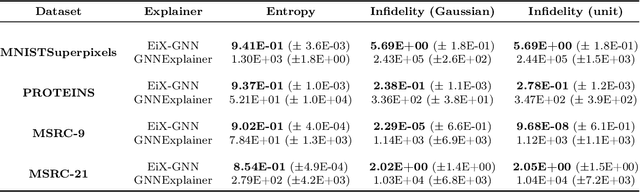

Abstract:Explaining is a human knowledge transfer process regarding a phenomenon between an explainer and an explainee. Each word used to explain this phenomenon must be carefully selected by the explainer in accordance with the current explainee phenomenon-related knowledge level and the phenomenon itself in order to have a high understanding from the explainee of the phenomenon. Nowadays, deep models, especially graph neural networks, have a major place in daily life even in critical applications. In such context, those models need to have a human high interpretability also referred as being explainable, in order to improve usage trustability of them in sensitive cases. Explaining is also a human dependent task and methods that explain deep model behavior must include these social-related concerns for providing profitable and quality explanations. Current explaining methods often occlude such social aspect for providing their explanations and only focus on the signal aspect of the question. In this contribution we propose a reliable social-aware explaining method suited for graph neural network that includes this social feature as a modular concept generator and by both leveraging signal and graph domain aspect thanks to an eigencentrality concept ordering approach. Besides our method takes into account the human-dependent aspect underlying any explanation process, we also reach high score regarding state-of-the-art objective metrics assessing explanation methods for graph neural networks models.

Color graph based wavelet transform with perceptual information

Oct 19, 2015Abstract:In this paper, we propose a numerical strategy to define a multiscale analysis for color and multicomponent images based on the representation of data on a graph. Our approach consists in computing the graph of an image using the psychovisual information and analysing it by using the spectral graph wavelet transform. We suggest introducing color dimension into the computation of the weights of the graph and using the geodesic distance as a means of distance measurement. We thus have defined a wavelet transform based on a graph with perceptual information by using the CIELab color distance. This new representation is illustrated with denoising and inpainting applications. Overall, by introducing psychovisual information in the graph computation for the graph wavelet transform we obtain very promising results. Therefore results in image restoration highlight the interest of the appropriate use of color information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge