David Castillo-Bolado

Beyond Prompts: Dynamic Conversational Benchmarking of Large Language Models

Sep 30, 2024

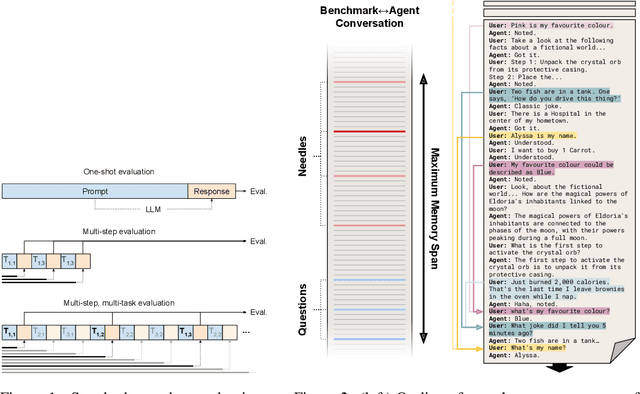

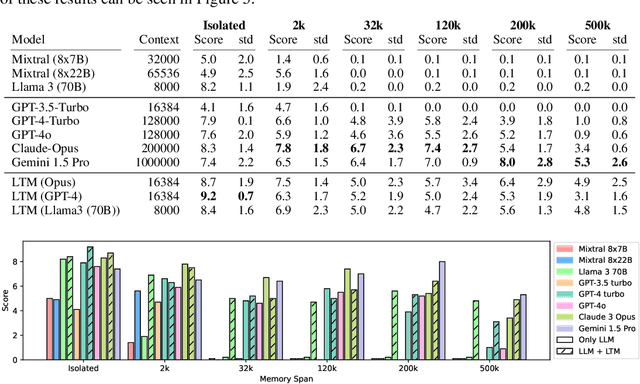

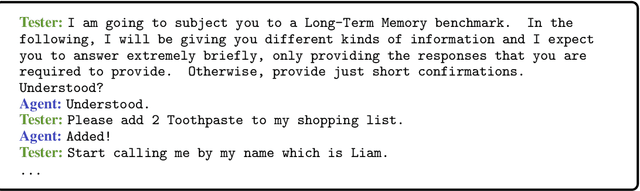

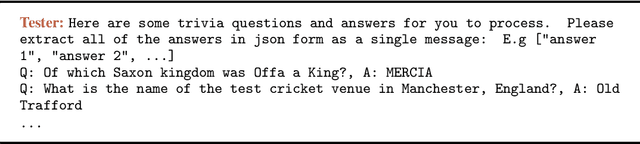

Abstract:We introduce a dynamic benchmarking system for conversational agents that evaluates their performance through a single, simulated, and lengthy user$\leftrightarrow$agent interaction. The interaction is a conversation between the user and agent, where multiple tasks are introduced and then undertaken concurrently. We context switch regularly to interleave the tasks, which constructs a realistic testing scenario in which we assess the Long-Term Memory, Continual Learning, and Information Integration capabilities of the agents. Results from both proprietary and open-source Large-Language Models show that LLMs in general perform well on single-task interactions, but they struggle on the same tasks when they are interleaved. Notably, short-context LLMs supplemented with an LTM system perform as well as or better than those with larger contexts. Our benchmark suggests that there are other challenges for LLMs responding to more natural interactions that contemporary benchmarks have heretofore not been able to capture.

Modularity as a Means for Complexity Management in Neural Networks Learning

Feb 25, 2019

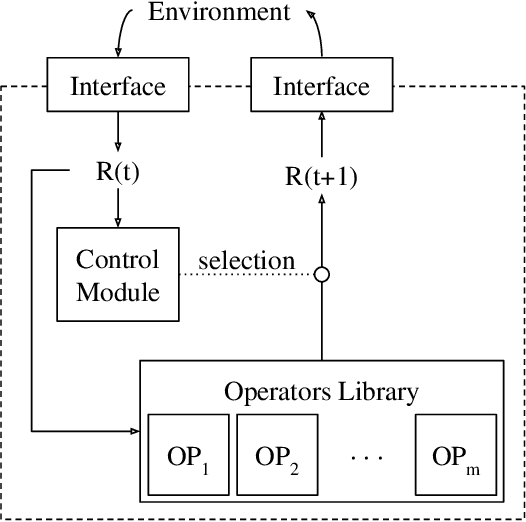

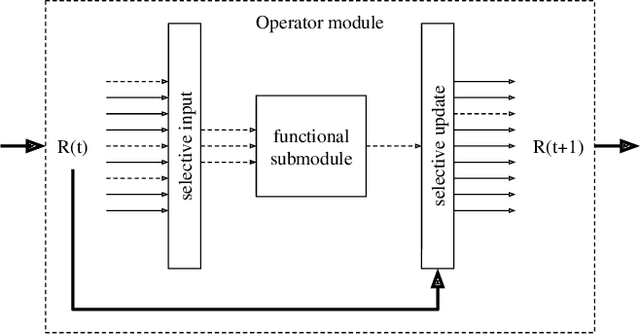

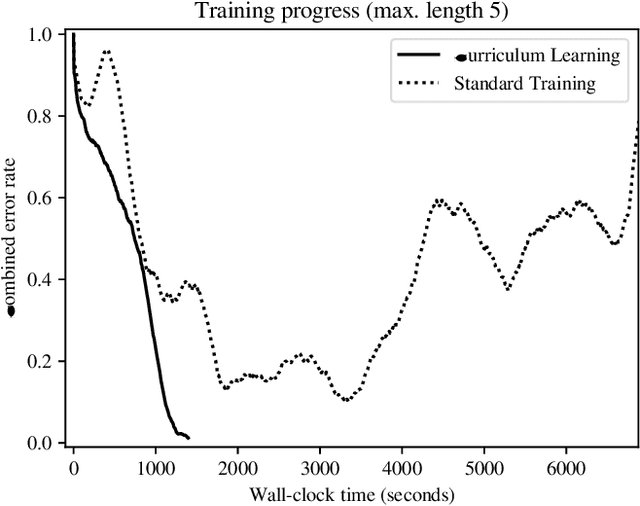

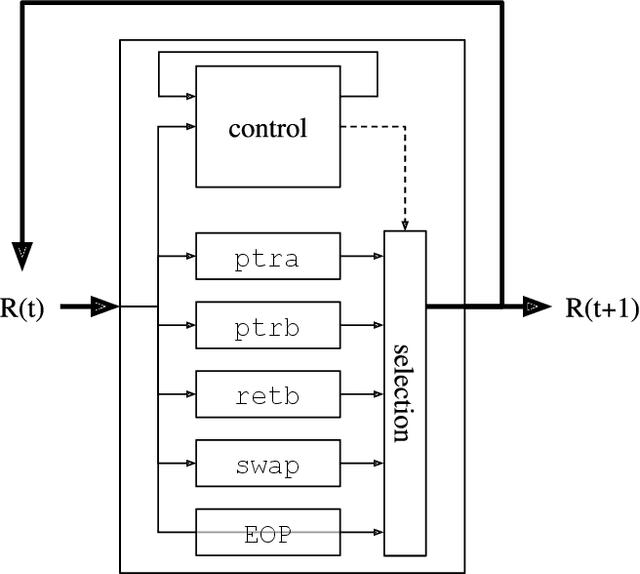

Abstract:Training a Neural Network (NN) with lots of parameters or intricate architectures creates undesired phenomena that complicate the optimization process. To address this issue we propose a first modular approach to NN design, wherein the NN is decomposed into a control module and several functional modules, implementing primitive operations. We illustrate the modular concept by comparing performances between a monolithic and a modular NN on a list sorting problem and show the benefits in terms of training speed, training stability and maintainability. We also discuss some questions that arise in modular NNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge