David Bojanic

Can Human Sex Be Learned Using Only 2D Body Keypoint Estimations?

Nov 05, 2020

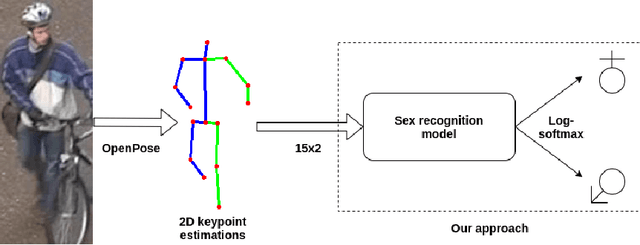

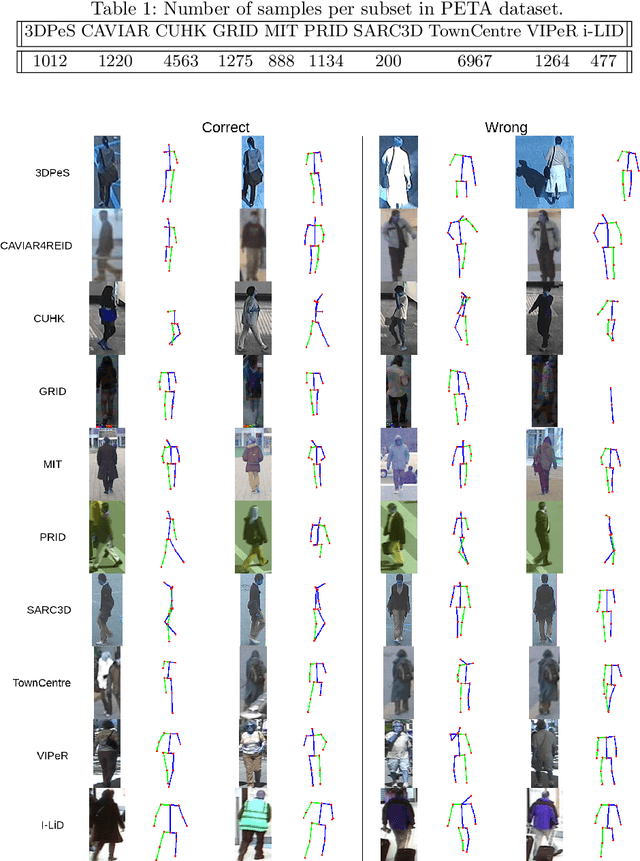

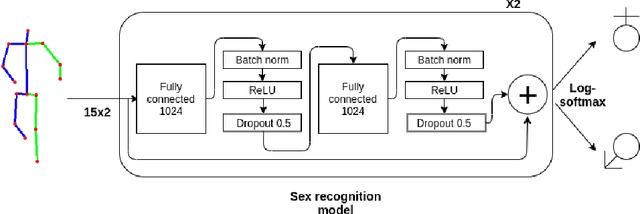

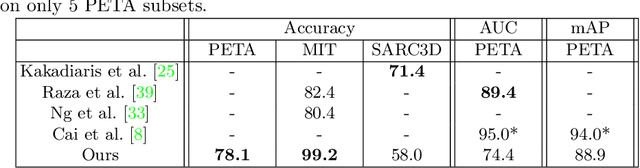

Abstract:In this paper, we analyze human male and female sex recognition problem and present a fully automated classification system using only 2D keypoints. The keypoints represent human joints. A keypoint set consists of 15 joints and the keypoint estimations are obtained using an OpenPose 2D keypoint detector. We learn a deep learning model to distinguish males and females using the keypoints as input and binary labels as output. We use two public datasets in the experimental section - 3DPeople and PETA. On PETA dataset, we report a 77% accuracy. We provide model performance details on both PETA and 3DPeople. To measure the effect of noisy 2D keypoint detections on the performance, we run separate experiments on 3DPeople ground truth and noisy keypoint data. Finally, we extract a set of factors that affect the classification accuracy and propose future work. The advantage of the approach is that the input is small and the architecture is simple, which enables us to run many experiments and keep the real-time performance in inference. The source code, with the experiments and data preparation scripts, are available on GitHub (https://github.com/kristijanbartol/human-sex-classifier).

Smart Time-Multiplexing of Quads Solves the Multicamera Interference Problem

Nov 05, 2020

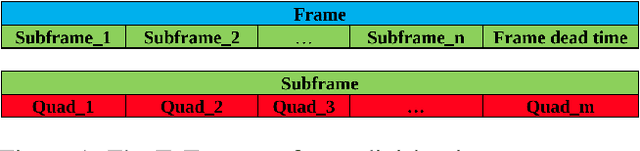

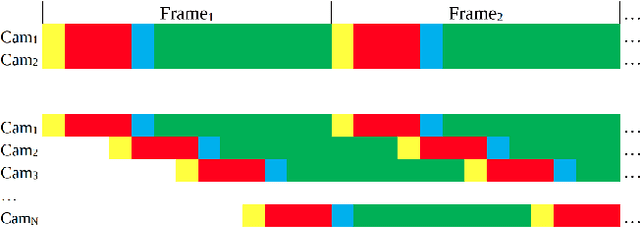

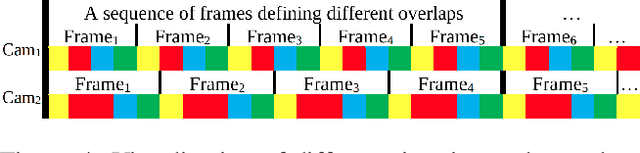

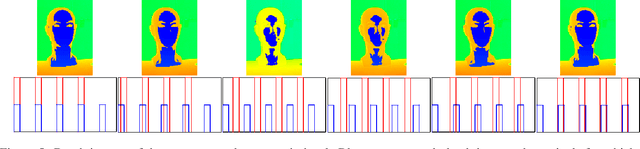

Abstract:Time-of-flight (ToF) cameras are becoming increasingly popular for 3D imaging. Their optimal usage has been studied from the several aspects. One of the open research problems is the possibility of a multicamera interference problem when two or more ToF cameras are operating simultaneously. In this work we present an efficient method to synchronize multiple operating ToF cameras. Our method is based on the time-division multiplexing, but unlike traditional time multiplexing, it does not decrease the effective camera frame rate. Additionally, for unsynchronized cameras, we provide a robust method to extract from their corresponding video streams, frames which are not subject to multicamera interference problem. We demonstrate our approach through a series of experiments and with a different level of support available for triggering, ranging from a hardware triggering to purely random software triggering.

Towards Keypoint Guided Self-Supervised Depth Estimation

Nov 05, 2020

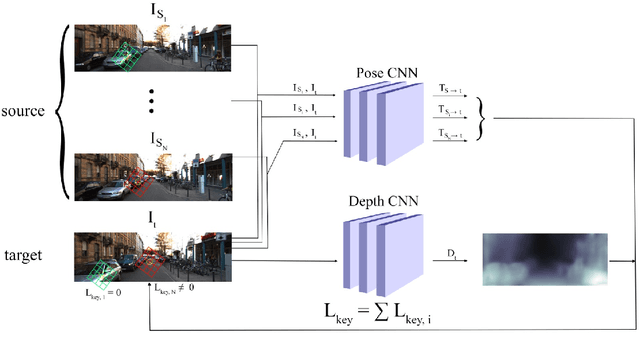

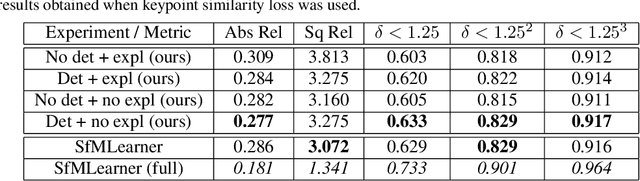

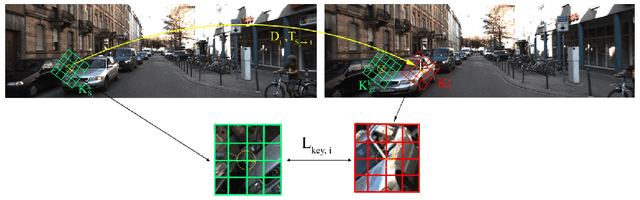

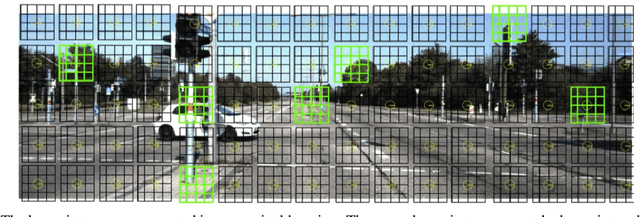

Abstract:This paper proposes to use keypoints as a self-supervision clue for learning depth map estimation from a collection of input images. As ground truth depth from real images is difficult to obtain, there are many unsupervised and self-supervised approaches to depth estimation that have been proposed. Most of these unsupervised approaches use depth map and ego-motion estimations to reproject the pixels from the current image into the adjacent image from the image collection. Depth and ego-motion estimations are evaluated based on pixel intensity differences between the correspondent original and reprojected pixels. Instead of reprojecting the individual pixels, we propose to first select image keypoints in both images and then reproject and compare the correspondent keypoints of the two images. The keypoints should describe the distinctive image features well. By learning a deep model with and without the keypoint extraction technique, we show that using the keypoints improve the depth estimation learning. We also propose some future directions for keypoint-guided learning of structure-from-motion problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge