Darsen D. Lu

CIMulator: A Comprehensive Simulation Platform for Computing-In-Memory Circuit Macros with Low Bit-Width and Real Memory Materials

Jun 26, 2023

Abstract:This paper presents a simulation platform, namely CIMulator, for quantifying the efficacy of various synaptic devices in neuromorphic accelerators for different neural network architectures. Nonvolatile memory devices, such as resistive random-access memory, ferroelectric field-effect transistor, and volatile static random-access memory devices, can be selected as synaptic devices. A multilayer perceptron and convolutional neural networks (CNNs), such as LeNet-5, VGG-16, and a custom CNN named C4W-1, are simulated to evaluate the effects of these synaptic devices on the training and inference outcomes. The dataset used in the simulations are MNIST, CIFAR-10, and a white blood cell dataset. By applying batch normalization and appropriate optimizers in the training phase, neuromorphic systems with very low-bit-width or binary weights could achieve high pattern recognition rates that approach software-based CNN accuracy. We also introduce spiking neural networks with RRAM-based synaptic devices for the recognition of MNIST handwritten digits.

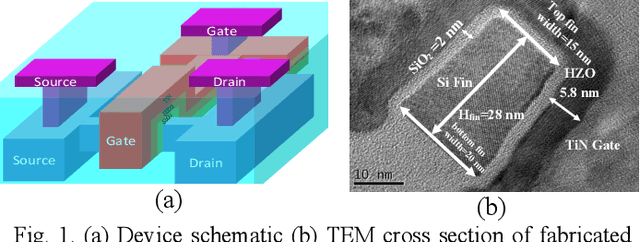

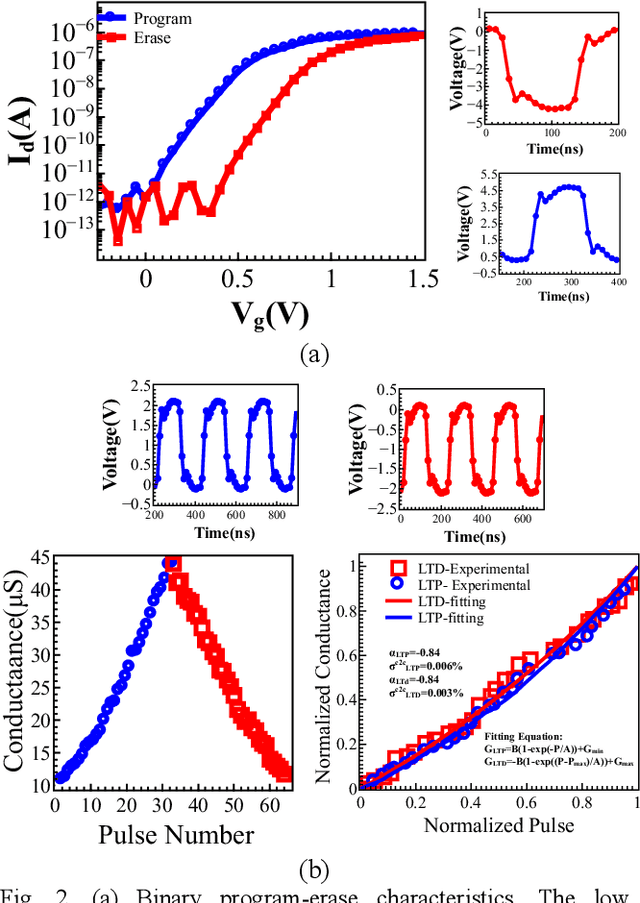

Alleviation of Temperature Variation Induced Accuracy Degradation in Ferroelectric FinFET Based Neural Network

Mar 03, 2021

Abstract:This paper reports the impacts of temperature variation on the inference accuracy of pre-trained all-ferroelectric FinFET deep neural networks, along with plausible design techniques to abate these impacts. We adopted a pre-trained artificial neural network (NN) with 96.4% inference accuracy on the MNIST dataset as the baseline. As an aftermath of temperature change, the conductance drift of a programmed cell was captured by a compact model over a wide range of gate bias. We observe a significant inference accuracy degradation in the analog neural network at 233 K for a NN trained at 300 K. Finally, we deployed binary neural networks with "read voltage" optimization to ensure immunity of NN to accuracy degradation under temperature variation, maintaining an inference accuracy 96.1%

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge