Md. Aftab Baig

CIMulator: A Comprehensive Simulation Platform for Computing-In-Memory Circuit Macros with Low Bit-Width and Real Memory Materials

Jun 26, 2023

Abstract:This paper presents a simulation platform, namely CIMulator, for quantifying the efficacy of various synaptic devices in neuromorphic accelerators for different neural network architectures. Nonvolatile memory devices, such as resistive random-access memory, ferroelectric field-effect transistor, and volatile static random-access memory devices, can be selected as synaptic devices. A multilayer perceptron and convolutional neural networks (CNNs), such as LeNet-5, VGG-16, and a custom CNN named C4W-1, are simulated to evaluate the effects of these synaptic devices on the training and inference outcomes. The dataset used in the simulations are MNIST, CIFAR-10, and a white blood cell dataset. By applying batch normalization and appropriate optimizers in the training phase, neuromorphic systems with very low-bit-width or binary weights could achieve high pattern recognition rates that approach software-based CNN accuracy. We also introduce spiking neural networks with RRAM-based synaptic devices for the recognition of MNIST handwritten digits.

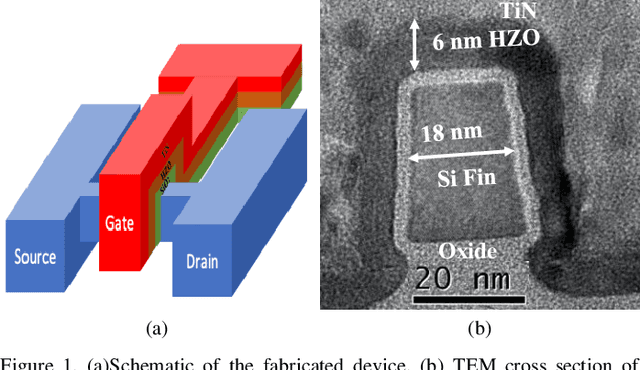

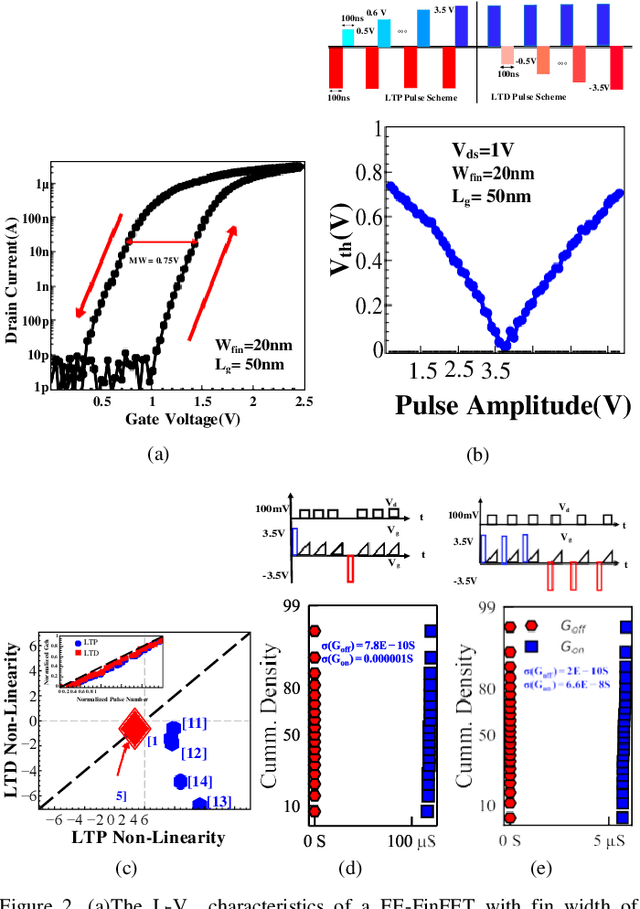

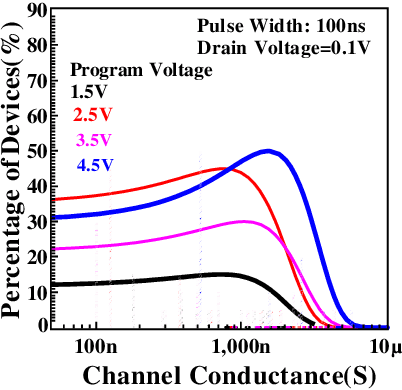

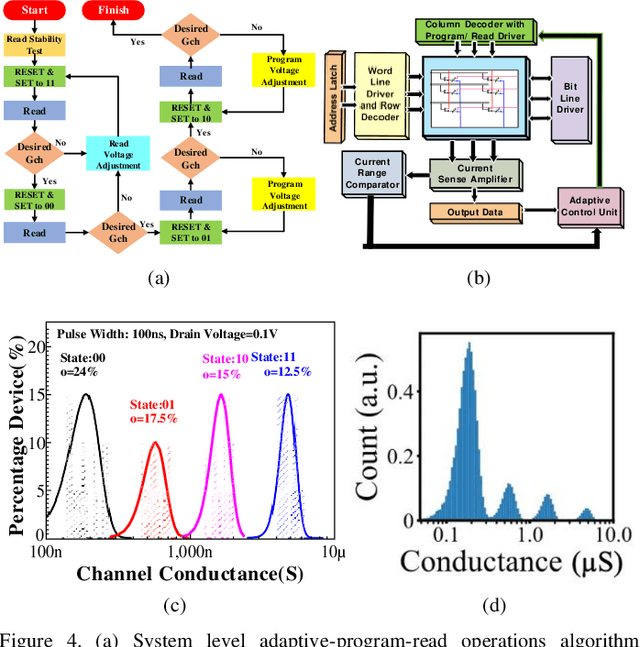

Neuromorphic Computing with Deeply Scaled Ferroelectric FinFET in Presence of Process Variation, Device Aging and Flicker Noise

Mar 05, 2021

Abstract:This paper reports a comprehensive study on the applicability of ultra-scaled ferroelectric FinFETs with 6 nm thick hafnium zirconium oxide layer for neuromorphic computing in the presence of process variation, flicker noise, and device aging. An intricate study has been conducted about the impact of such variations on the inference accuracy of pre-trained neural networks consisting of analog, quaternary (2-bit/cell) and binary synapse. A pre-trained neural network with 97.5% inference accuracy on the MNIST dataset has been adopted as the baseline. Process variation, flicker noise, and device aging characterization have been performed and a statistical model has been developed to capture all these effects during neural network simulation. Extrapolated retention above 10 years have been achieved for binary read-out procedure. We have demonstrated that the impact of (1) retention degradation due to the oxide thickness scaling, (2) process variation, and (3) flicker noise can be abated in ferroelectric FinFET based binary neural networks, which exhibits superior performance over quaternary and analog neural network, amidst all variations. The performance of a neural network is the result of coalesced performance of device, architecture and algorithm. This research corroborates the applicability of deeply scaled ferroelectric FinFETs for non-von Neumann computing with proper combination of architecture and algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge