Darja Fišer

The FRENK Datasets of Socially Unacceptable Discourse in Slovene and English

Jun 13, 2019

Abstract:In this paper we present datasets of Facebook comment threads to mainstream media posts in Slovene and English developed inside the Slovene national project FRENK which cover two topics, migrants and LGBT, and are manually annotated for different types of socially unacceptable discourse (SUD). The main advantages of these datasets compared to the existing ones are identical sampling procedures, producing comparable data across languages and an annotation schema that takes into account six types of SUD and five targets at which SUD is directed. We describe the sampling and annotation procedures, and analyze the annotation distributions and inter-annotator agreements. We consider this dataset to be an important milestone in understanding and combating SUD for both languages.

KAS-term: Extracting Slovene Terms from Doctoral Theses via Supervised Machine Learning

Jun 05, 2019

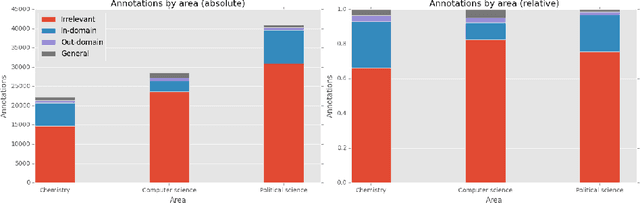

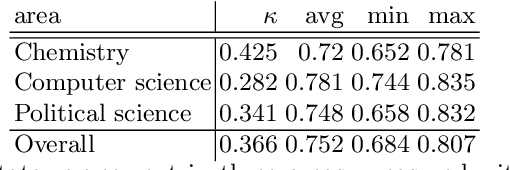

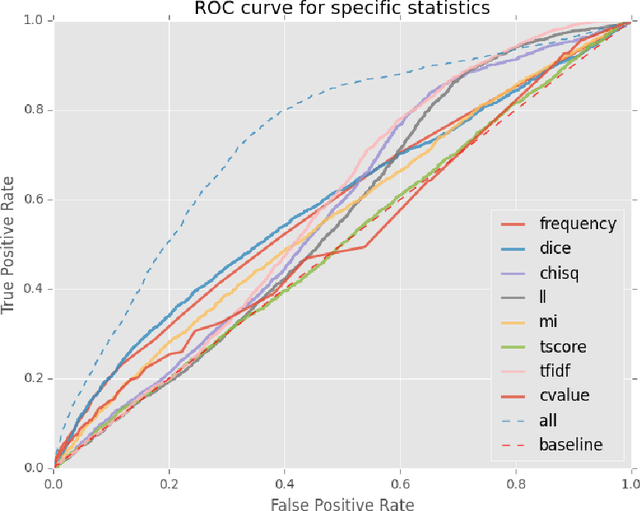

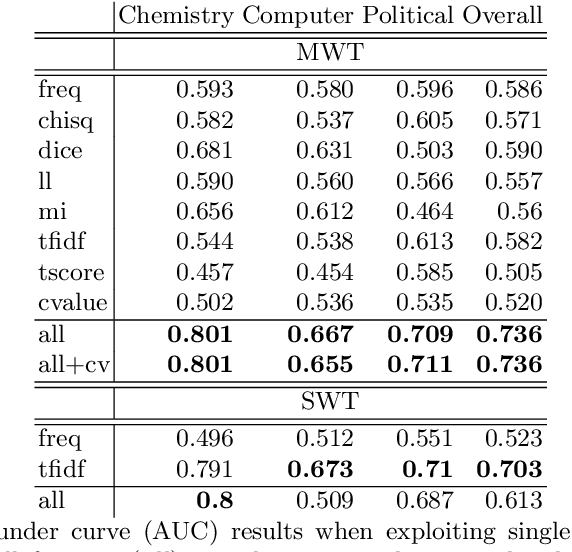

Abstract:This paper presents a dataset and supervised learning experiments for term extraction from Slovene academic texts. Term candidates in the dataset were extracted via morphosyntactic patterns and annotated for their termness by four annotators. Experiments on the dataset show that most co-occurrence statistics, applied after morphosyntactic patterns and a frequency threshold, perform close to random and that the results can be significantly improved by combining, with supervised machine learning, all the seven statistic measures included in the dataset. On multi-word terms the model using all statistics obtains an AUC of 0.736 while the best single statistic produces only AUC 0.590. Among many additional candidate features, only adding multi-word morphosyntactic pattern information and length of the single-word term candidates achieves further improvements of the results.

Predicting Concreteness and Imageability of Words Within and Across Languages via Word Embeddings

Jul 09, 2018

Abstract:The notions of concreteness and imageability, traditionally important in psycholinguistics, are gaining significance in semantic-oriented natural language processing tasks. In this paper we investigate the predictability of these two concepts via supervised learning, using word embeddings as explanatory variables. We perform predictions both within and across languages by exploiting collections of cross-lingual embeddings aligned to a single vector space. We show that the notions of concreteness and imageability are highly predictable both within and across languages, with a moderate loss of up to 20% in correlation when predicting across languages. We further show that the cross-lingual transfer via word embeddings is more efficient than the simple transfer via bilingual dictionaries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge