Darian Hoagland

Integrating Virtual Reality and Large Language Models for Team-Based Non-Technical Skills Training and Evaluation in the Operating Room

Jan 19, 2026Abstract:Although effective teamwork and communication are critical to surgical safety, structured training for non-technical skills (NTS) remains limited compared with technical simulation. The ACS/APDS Phase III Team-Based Skills Curriculum calls for scalable tools that both teach and objectively assess these competencies during laparoscopic emergencies. We introduce the Virtual Operating Room Team Experience (VORTeX), a multi-user virtual reality (VR) platform that integrates immersive team simulation with large language model (LLM) analytics to train and evaluate communication, decision-making, teamwork, and leadership. Team dialogue is analyzed using structured prompts derived from the Non-Technical Skills for Surgeons (NOTSS) framework, enabling automated classification of behaviors and generation of directed interaction graphs that quantify communication structure and hierarchy. Two laparoscopic emergency scenarios, pneumothorax and intra-abdominal bleeding, were implemented to elicit realistic stress and collaboration. Twelve surgical professionals completed pilot sessions at the 2024 SAGES conference, rating VORTeX as intuitive, immersive, and valuable for developing teamwork and communication. The LLM consistently produced interpretable communication networks reflecting expected operative hierarchies, with surgeons as central integrators, nurses as initiators, and anesthesiologists as balanced intermediaries. By integrating immersive VR with LLM-driven behavioral analytics, VORTeX provides a scalable, privacy-compliant framework for objective assessment and automated, data-informed debriefing across distributed training environments.

Evaluating Model Performance with Hard-Swish Activation Function Adjustments

Oct 09, 2024

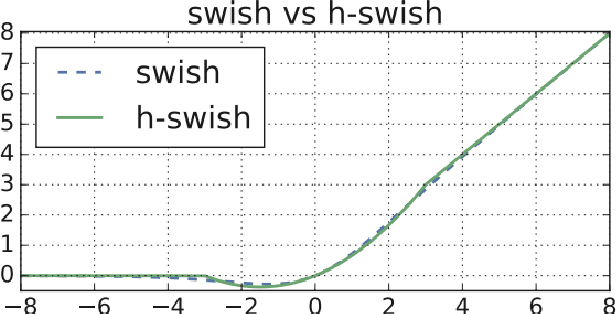

Abstract:In the field of pattern recognition, achieving high accuracy is essential. While training a model to recognize different complex images, it is vital to fine-tune the model to achieve the highest accuracy possible. One strategy for fine-tuning a model involves changing its activation function. Most pre-trained models use ReLU as their default activation function, but switching to a different activation function like Hard-Swish could be beneficial. This study evaluates the performance of models using ReLU, Swish and Hard-Swish activation functions across diverse image datasets. Our results show a 2.06% increase in accuracy for models on the CIFAR-10 dataset and a 0.30% increase in accuracy for models on the ATLAS dataset. Modifying the activation functions in architecture of pre-trained models lead to improved overall accuracy.

* 2 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge