Daniele Romanini

Practical Defences Against Model Inversion Attacks for Split Neural Networks

Apr 21, 2021

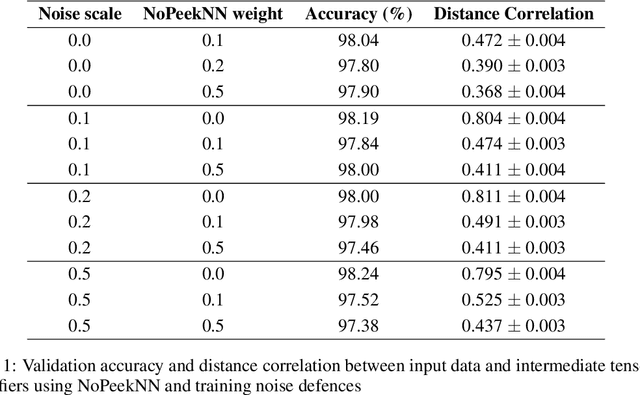

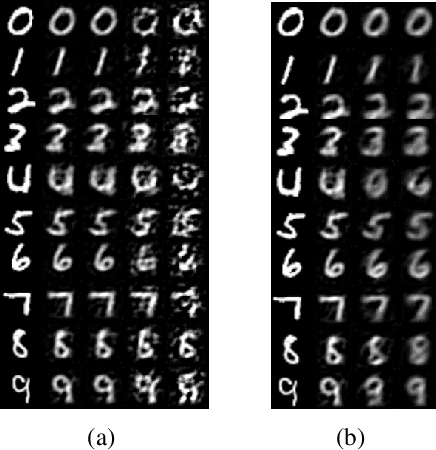

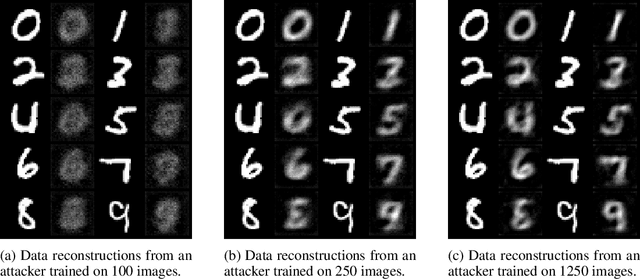

Abstract:We describe a threat model under which a split network-based federated learning system is susceptible to a model inversion attack by a malicious computational server. We demonstrate that the attack can be successfully performed with limited knowledge of the data distribution by the attacker. We propose a simple additive noise method to defend against model inversion, finding that the method can significantly reduce attack efficacy at an acceptable accuracy trade-off on MNIST. Furthermore, we show that NoPeekNN, an existing defensive method, protects different information from exposure, suggesting that a combined defence is necessary to fully protect private user data.

PyVertical: A Vertical Federated Learning Framework for Multi-headed SplitNN

Apr 14, 2021

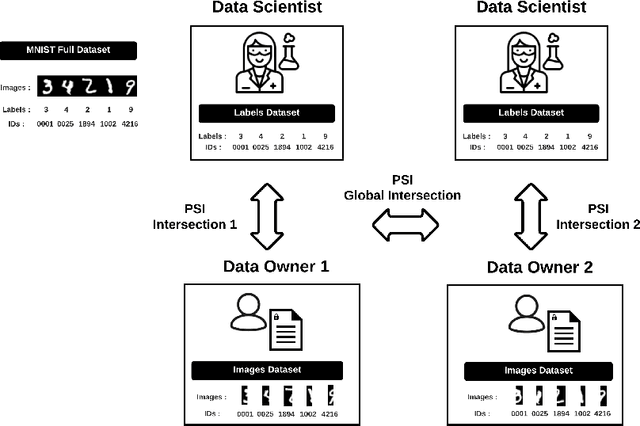

Abstract:We introduce PyVertical, a framework supporting vertical federated learning using split neural networks. The proposed framework allows a data scientist to train neural networks on data features vertically partitioned across multiple owners while keeping raw data on an owner's device. To link entities shared across different datasets' partitions, we use Private Set Intersection on IDs associated with data points. To demonstrate the validity of the proposed framework, we present the training of a simple dual-headed split neural network for a MNIST classification task, with data samples vertically distributed across two data owners and a data scientist.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge