Daniel Teitelman

Adversarial Attack Against Image-Based Localization Neural Networks

Oct 11, 2022

Abstract:In this paper, we present a proof of concept for adversarially attacking the image-based localization module of an autonomous vehicle. This attack aims to cause the vehicle to perform a wrong navigational decisions and prevent it from reaching a desired predefined destination in a simulated urban environment. A database of rendered images allowed us to train a deep neural network that performs a localization task and implement, develop and assess the adversarial pattern. Our tests show that using this adversarial attack we can prevent the vehicle from turning at a given intersection. This is done by manipulating the vehicle's navigational module to falsely estimate its current position and thus fail to initialize the turning procedure until the vehicle misses the last opportunity to perform a safe turn in a given intersection.

Stealing Black-Box Functionality Using The Deep Neural Tree Architecture

Feb 23, 2020

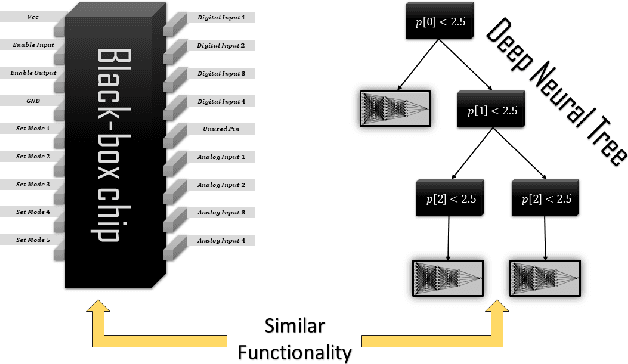

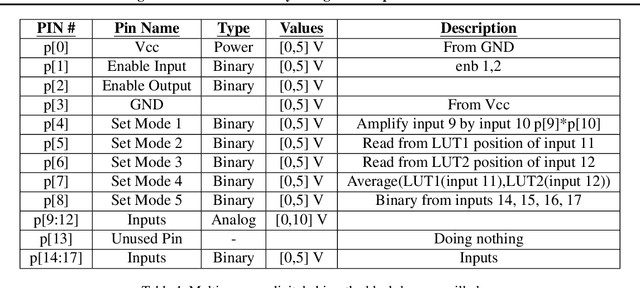

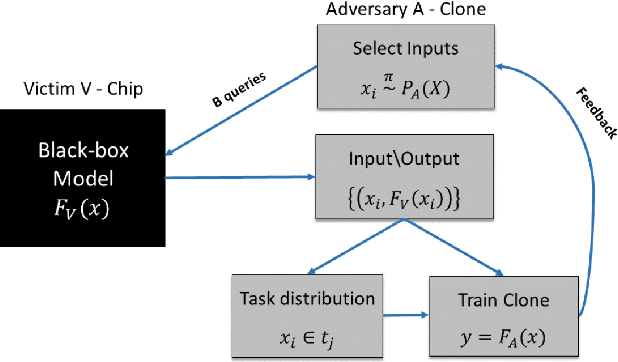

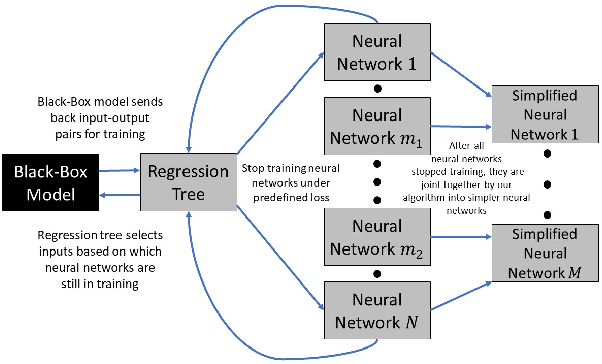

Abstract:This paper makes a substantial step towards cloning the functionality of black-box models by introducing a Machine learning (ML) architecture named Deep Neural Trees (DNTs). This new architecture can learn to separate different tasks of the black-box model, and clone its task-specific behavior. We propose to train the DNT using an active learning algorithm to obtain faster and more sample-efficient training. In contrast to prior work, we study a complex "victim" black-box model based solely on input-output interactions, while at the same time the attacker and the victim model may have completely different internal architectures. The attacker is a ML based algorithm whereas the victim is a generally unknown module, such as a multi-purpose digital chip, complex analog circuit, mechanical system, software logic or a hybrid of these. The trained DNT module not only can function as the attacked module, but also provides some level of explainability to the cloned model due to the tree-like nature of the proposed architecture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge