Daniel J. Wu

Image-based Data Representations of Time Series: A Comparative Analysis in EEG Artifact Detection

Dec 21, 2023Abstract:Alternative data representations are powerful tools that augment the performance of downstream models. However, there is an abundance of such representations within the machine learning toolbox, and the field lacks a comparative understanding of the suitability of each representation method. In this paper, we propose artifact detection and classification within EEG data as a testbed for profiling image-based data representations of time series data. We then evaluate eleven popular deep learning architectures on each of six commonly-used representation methods. We find that, while the choice of representation entails a choice within the tradeoff between bias and variance, certain representations are practically more effective in highlighting features which increase the signal-to-noise ratio of the data. We present our results on EEG data, and open-source our testing framework to enable future comparative analyses in this vein.

Practical Deepfake Detection: Vulnerabilities in Global Contexts

Jun 20, 2022

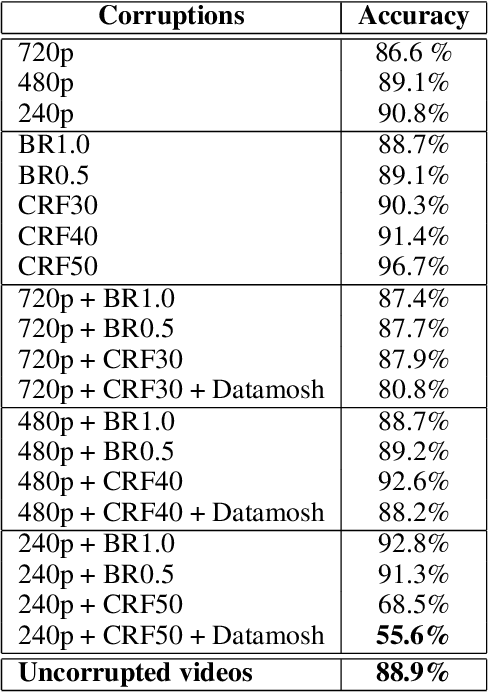

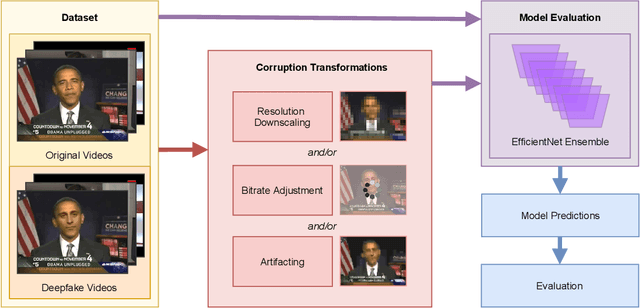

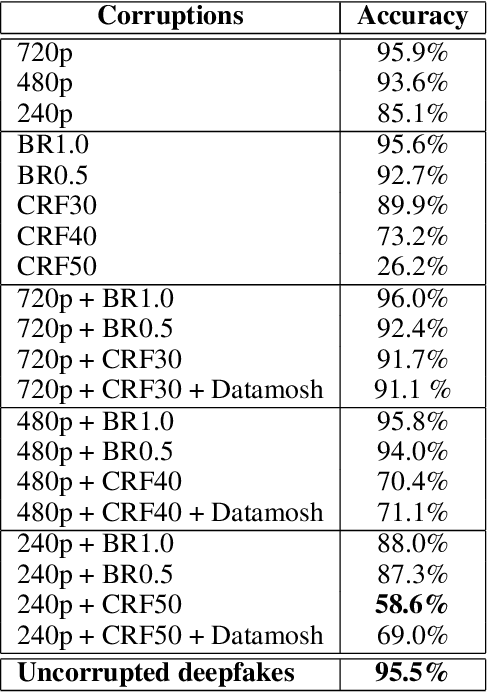

Abstract:Recent advances in deep learning have enabled realistic digital alterations to videos, known as deepfakes. This technology raises important societal concerns regarding disinformation and authenticity, galvanizing the development of numerous deepfake detection algorithms. At the same time, there are significant differences between training data and in-the-wild video data, which may undermine their practical efficacy. We simulate data corruption techniques and examine the performance of a state-of-the-art deepfake detection algorithm on corrupted variants of the FaceForensics++ dataset. While deepfake detection models are robust against video corruptions that align with training-time augmentations, we find that they remain vulnerable to video corruptions that simulate decreases in video quality. Indeed, in the controversial case of the video of Gabonese President Bongo's new year address, the algorithm, which confidently authenticates the original video, judges highly corrupted variants of the video to be fake. Our work opens up both technical and ethical avenues of exploration into practical deepfake detection in global contexts.

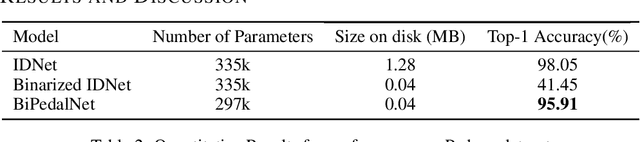

Binarized Neural Networks for Resource-Constrained On-Device Gait Identification

Mar 30, 2021

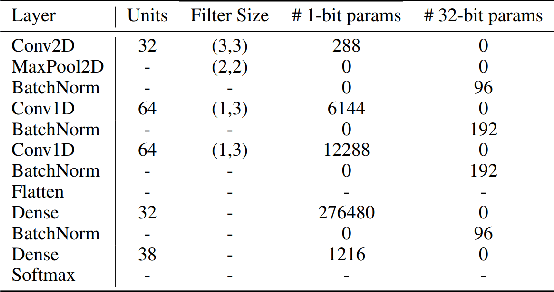

Abstract:User authentication through gait analysis is a promising application of discriminative neural networks -- particularly due to the ubiquity of the primary sources of gait accelerometry, in-pocket cellphones. However, conventional machine learning models are often too large and computationally expensive to enable inference on low-resource mobile devices. We propose that binarized neural networks can act as robust discriminators, maintaining both an acceptable level of accuracy while also dramatically decreasing memory requirements, thereby enabling on-device inference. To this end, we propose BiPedalNet, a compact CNN that nearly matches the state-of-the-art on the Padova gait dataset, with only 1/32 of the memory overhead.

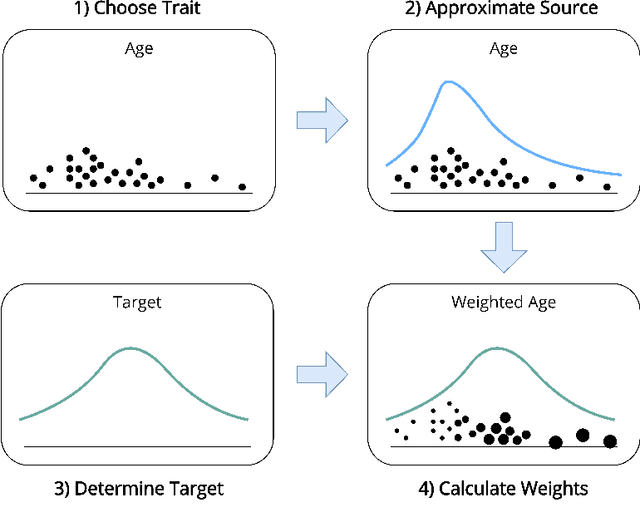

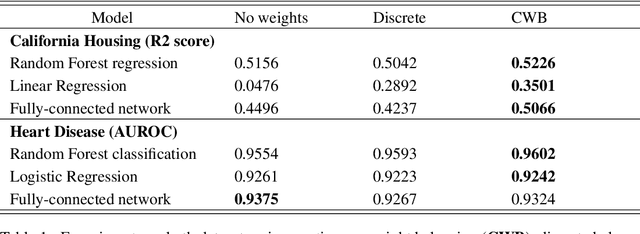

Continuous Weight Balancing

Mar 30, 2021

Abstract:We propose a simple method by which to choose sample weights for problems with highly imbalanced or skewed traits. Rather than naively discretizing regression labels to find binned weights, we take a more principled approach -- we derive sample weights from the transfer function between an estimated source and specified target distributions. Our method outperforms both unweighted and discretely-weighted models on both regression and classification tasks. We also open-source our implementation of this method (https://github.com/Daniel-Wu/Continuous-Weight-Balancing) to the scientific community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge